-

Notifications

You must be signed in to change notification settings - Fork 1k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

dBFT 2.0 #547

dBFT 2.0 #547

Conversation

* Add commit phase to consensus algorithm * fix tests * Prevent repeated sending of `Commit` messages * RPC call gettransactionheight (#541) * getrawtransactionheight Nowadays two calls are need to get a transaction height, `getrawtransaction` with `verbose` and then use the `blockhash`. Other option is to use `confirmations`, but it can be misleading. * Minnor fix * Shargon's tip * modified * Allow to use the wallet inside a RPC plugin (#536) * Clean code * Clean code

|

From #534, the only opened discussion was related to the Regeneration phase. |

|

@erikzhang, do you have an idea for the template for the Regeneration? |

|

I think regeneration should have two levels. The first is to achieve state recovery by reading the log recorded by the node, and the second is to achieve state synchronization through the replay of network messages. |

|

Sounds good, Erik. |

* Pass store to `ConsensusService` * Implement `ISerializable` in `ConsensusContext` * Start from recovery log * Fix unit tests due to constructor taking the store. * Add unit tests for serializing and deserializing the consensus context.

|

@erikzhang I added also saving consensus context upon changing view, check #575 |

|

Nice to see this as NEO 3.0, @erikzhang, deserved. If we start to think deeply the set of changes of this PR summarizes knowledge, backgrounds, scientific studies, insights and discussions of several involved agents. |

|

Perfect, @erikzhang, it seems to be working as expected. I did not check the Regeneration yet, in an intensive manner. |

|

After #579 is merged this PR might be ready for being integrated into master. Considering recent discussions that have been carried on in such aforementioned thread, we fell that the complexity of the Regeneration/Recover mechanism is reaching another level of quality. In connection with a Redundant mechanism for replacing Speakers (in case of delays) we might experience another level of Consensus in the next phase of improvements that will be carried on after this PR. Thanks to everyone who has been contributing to this PR. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

It looks all correct. In my tests everything is running almost 100%.

In some tests I got some delays in recovering changeviews, however, might be my configuration of iptables and network disabling.

I will take another check as soon as possible.

| { | ||

| var eligibleResponders = context.Validators.Length - 1; | ||

| var chosenIndex = (payload.ValidatorIndex + i + message.NewViewNumber) % eligibleResponders; | ||

| if (chosenIndex >= payload.ValidatorIndex) chosenIndex++; |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I did not get this point here. Why to jump one here? The next iteration of the loop would play this role.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Because the ValidatorIndex is the requester’s index. The requester does not respond to his own ChangeView message with a recovery.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

If instead it moved to the next iteration of the loop that would mean there would be one less potential responders. It is preferable to increment the chosenIndex to allow the correct number of nodes to respond with recovery.

I am afraid that even if the node has previously sent a |

|

Hi @erikzhang, I agree with you. But maybe as Jeff said we could do these additional improvements in other PRs in the near future and try to merge this one here when ready. |

I am not suggesting it change the view directly if had sent in the past; I was just suggesting it start it’s expectedView where it left off after it was restarted. It’s an optimization that can wait for later if we decide to implement it. |

|

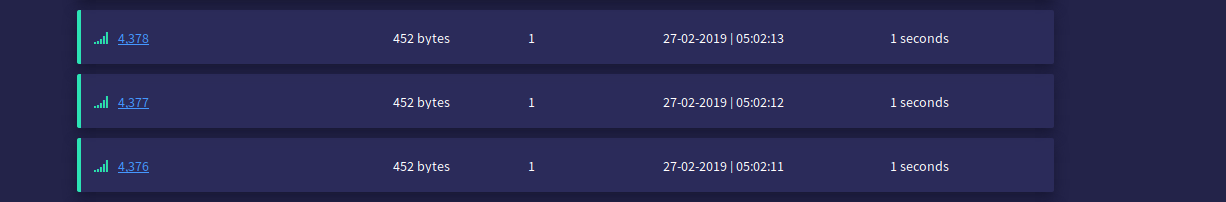

The tests NGD is doing are expected to be completed on March 1. |

Great! I will do some additional testing between now and then as well then. |

|

Testing with #608 looks good so far. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

These changes introduce a networking incompatibility with the existing old clients. See my included comment.

[19:12:54.896] chain sync: expected=379 current=354 nodes=7

[19:12:55.175] timeout: height=355 view=0 state=Backup, RequestReceived, ResponseSent, CommitSent

[19:12:55.176] send recovery to resend commitWhen a CN is lagging behind and timeout is this message expected? |

|

The current version is online at: https://neocompiler.io/#/ecolab Click on @erikzhang, do you think that we can update the variable |

Yes, it is expected. From it's perspective it hasn't received newer blocks yet and it hasn't received enough commits for the block it is on, so it will periodically send recovery until it either receives enough commits to persist the block it is on, or until it receives the next block that others have already persisted. |

We can reach a consensus within 1 second? |

|

I think so, Erik, aehuaheauah....even less nowadays. We are talking about local blockchain networks, for example, a bank, supply chain enterprises, or a database partially-centralized running CNs. There are some useful application for low latency systems, for example:

Such systems could for example, just need few couple of tx's per seconds in private blockchain, however, requiring them to be published as soon as possible. It would be great if we could change it now. We need to be sure that the exponential |

|

neo/neo/Network/P2P/Payloads/BlockBase.cs Line 19 in 91e006c

One difficulty is that |

|

Don't worry, Erik. Let's do it later, in some more couple of weeks. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Changes look very nice, and it is working well from my testing. I'm looking forward to see the results from NGD testing that should be complete soon.

|

A day to celebrate! Congratulations to everyone that worked hard to solve this issue, especially: @vncoelho @jsolman @shargon and others... to not forget @erikzhang, as always :) |

PR with the key changes for the achievement of dBFT 2.0 (#426, #422, #534, among others...), an improved and optimization version of the pioner dBFT 1.0.

Key features will be merged here before final merge on the Master code.

Main contributors: @erikzhang, @shargon, @igormcoelho, @vncoelho, @belane, @wanglongfei88, @edwardz246003, @jsolman