-

Notifications

You must be signed in to change notification settings - Fork 5

Commit

This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository.

feat(dag-protobuf): cache dag pb directory structure and block indexes (

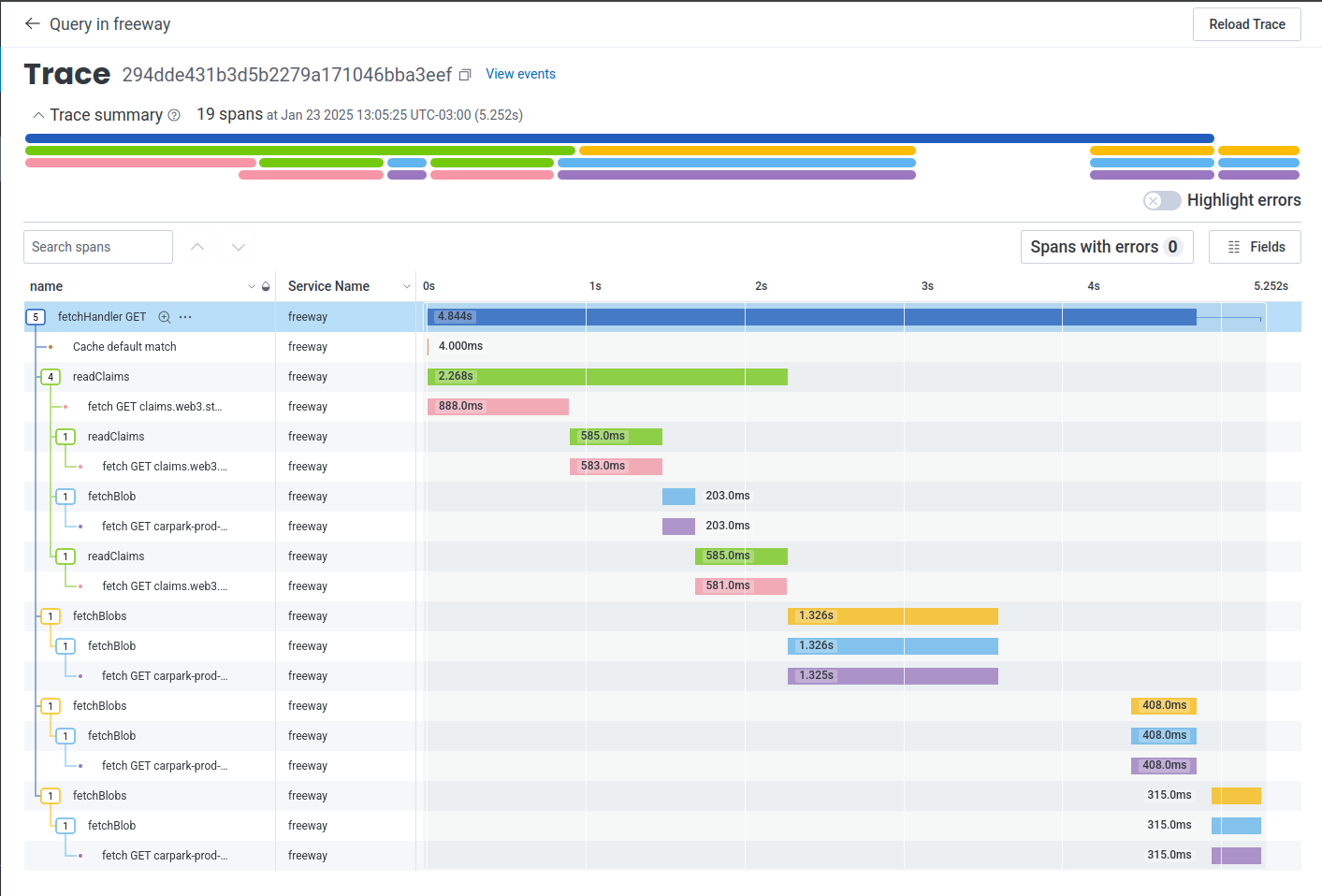

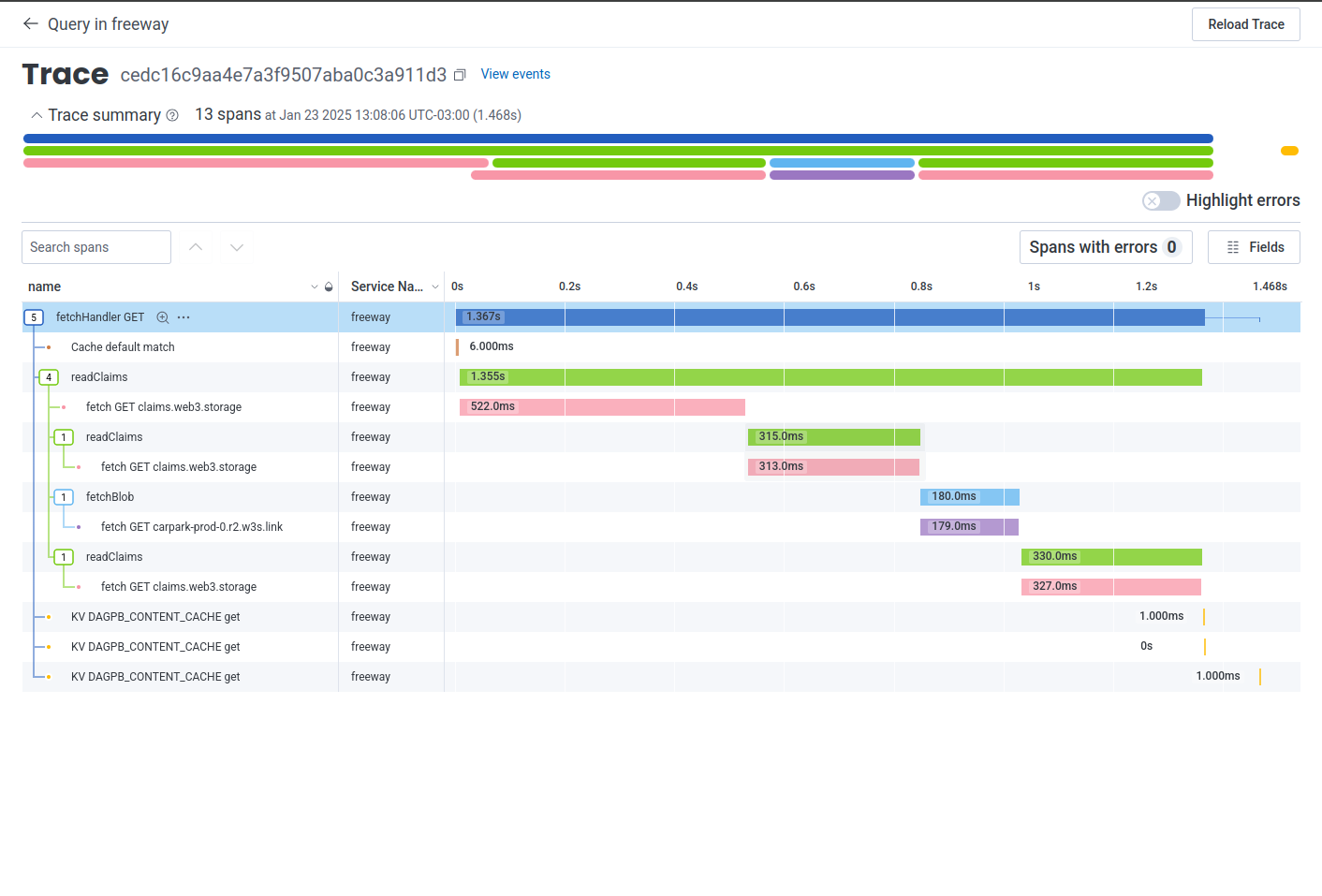

#147) ### Context The requests to fetch a DAG Protobuf directory structure using a CID execute the following steps: 1. Get all content claims lookups to identify where we are pulling data from 2. Fetch cid `bafy...cid` - which represents the folder containing the target file, so that we can determine the verifiable cid for the file (let's call that `bafy...file`) 3. Fetch cid `bafy...file` to get the root block of the file, which in UnixFS contains NO raw data, but rather is a list of sub-blocks that contain the file (let's call those `bafy...bytes1` and `bafy...bytes2`) 4. Fetch the first raw data blocks to send the first byte This PR enables the caching strategy for steps 2 to 4 where instead of fetching the directory structure from the locator and navigating the DAG for every request, it caches the DAGs if they have a Protobuf structure and content size <= 2MB. ### Changes - Updated `withContentClaimsDagula` middleware to cache DAG PB content requests - New KV Store - `DAGPB_CONTENT_CACHE` - Caching rules - `FF_DAGPB_CONTENT_CACHE_TTL_SECONDS`: The number that represents when to expire the key-value pair in seconds from now. The minimum value is 60 seconds. Any value less than 60MB will not be used. We will use **30 days TTL** by default for Production environment. - `FF_DAGPB_CONTENT_CACHE_MAX_SIZE_MB`: The maximum size of the key-value pair in MB. The minimum value is 1 MB. Any value less than 1MB will not be used. We will use **2MB max file size** by default. - `FF_DAGPB_CONTENT_CACHE_ENABLED`: The flag that enables the DAGPB content cache. The cache is **disabled in prod** by default. ### Samples **2MB file - no cache**  - 5.2 seconds **2MB file - cached**  - 1.4 seconds ### KV Limits - Reads: unlimited - Writes (different keys): unlimited - Writes (same key): 1w / sec (rate limiting) - Storage/account & Storage/namespace: unlimited - key size: <= 512 bytes - value size: <= 25MiB - Minimum cache ttl: 60 seconds - Higher limit? -> https://forms.gle/ukpeZVLWLnKeixDu7 resolves storacha/project-tracking#301

- Loading branch information

Showing

5 changed files

with

266 additions

and

23 deletions.

There are no files selected for viewing

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -1,5 +1,24 @@ | ||

| import { KVNamespace } from '@cloudflare/workers-types' | ||

| import { Environment as MiddlewareEnvironment } from '@web3-storage/gateway-lib' | ||

|

|

||

| export interface Environment extends MiddlewareEnvironment { | ||

| CONTENT_CLAIMS_SERVICE_URL?: string | ||

| /** | ||

| * The KV namespace that stores the DAGPB content cache. | ||

| */ | ||

| DAGPB_CONTENT_CACHE: KVNamespace | ||

| /** | ||

| * The number that represents when to expire the key-value pair in seconds from now. | ||

| * The minimum value is 60 seconds. Any value less than 60MB will not be used. | ||

| */ | ||

| FF_DAGPB_CONTENT_CACHE_TTL_SECONDS?: string | ||

| /** | ||

| * The maximum size of the key-value pair in MB. | ||

| * The minimum value is 1 MB. Any value less than 1MB will not be used. | ||

| */ | ||

| FF_DAGPB_CONTENT_CACHE_MAX_SIZE_MB?: string | ||

| /** | ||

| * The flag that enables the DAGPB content cache. | ||

| */ | ||

| FF_DAGPB_CONTENT_CACHE_ENABLED: string | ||

| } |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

Oops, something went wrong.