-

Notifications

You must be signed in to change notification settings - Fork 2

Commit

This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository.

Merge pull request #1 from kaancolak/add-package

(perception_benchmark_tool): add package

- Loading branch information

Showing

23 changed files

with

2,248 additions

and

1 deletion.

There are no files selected for viewing

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -1 +1,96 @@ | ||

| # perception_benchmark_tool | ||

| # perception_benchmark_tool | ||

|

|

||

| This package contains a benchmark tool for testing the perception stack of Autoware.Universe with the Waymo dataset. | ||

|

|

||

| ## Installation | ||

|

|

||

|

|

||

| For testing 3D Object Tracking with Waymo Dataset, follow the given steps. | ||

|

|

||

| 1- Download the Waymo dataset validation segment(.tfrecord) files from the given link. Unpack it to the desired directory. | ||

|

|

||

| <https://waymo.com/open/download/> | ||

|

|

||

|

|

||

| ```bash | ||

| cd ~/Downloads/ | ||

| tar -xvf validation_validation_0000.tar | ||

| ``` | ||

|

|

||

| Change the dataset folder from "benchmark_runner.launch.py" | ||

|

|

||

| 2- Install the Waymo Open Dataset Toolkit. | ||

|

|

||

| ```bash | ||

| mkdir -p ~/perception_benchmark_ws/src | ||

| cd ~/perception_benchmark_ws/src | ||

| git clone https://github.com/autowarefoundation/perception_benchmark_tool.git | ||

| pip3 install protobuf==3.9.2 numpy==1.19.2 | ||

| pip3 install waymo-open-dataset-tf-2-6-0 | ||

| ``` | ||

|

|

||

| 3- For running Autoware.Universe with the Waymo evaluation node, | ||

|

|

||

| Lidar point clouds and camera images are encoded in the .tfrecord file. It may take about ~60-90 seconds to decode | ||

| the data back. | ||

|

|

||

| Go to the Autoware folder and source it, | ||

| ```bash | ||

| source install/setup.bash | ||

| ``` | ||

|

|

||

| Build perception benchmark tool, | ||

| ```bash | ||

| cd ~/perception_benchmark_ws/ | ||

| rosdep install -y --from-paths src --ignore-src --rosdistro $ROS_DISTRO | ||

| colcon build | ||

| source install/setup.bash | ||

| ``` | ||

|

|

||

| Run perception benchmark tool, | ||

| ```bash | ||

| ros2 launch perception_benchmark_tool benchmark_runner.launch.py | ||

| ``` | ||

|

|

||

| This command will run the perception stack with the Waymo Dataset. We will get the ground truth and prediction files in the file | ||

| paths we give as arguments to the launch file. | ||

|

|

||

| Lidar point clouds and camera images are encoded in the .tfrecord file. It may take about ~60-90 seconds to decode | ||

| the data back for each segment file. | ||

|

|

||

| 4- Install Waymo Open Dataset toolkit for metric computation: | ||

|

|

||

| Follow the given command or instruction provided by the Waymo: <https://github.com/waymo-research/waymo-open-dataset/blob/master/docs/quick_start.md> | ||

|

|

||

| ```bash | ||

| git clone https://github.com/waymo-research/waymo-open-dataset.git waymo-od | ||

| cd waymo-od | ||

| git checkout remotes/origin/master | ||

| sudo apt-get install --assume-yes pkg-config zip g++ zlib1g-dev unzip python3 python3-pip | ||

| BAZEL_VERSION=3.1.0 | ||

| wget https://github.com/bazelbuild/bazel/releases/download/${BAZEL_VERSION}/bazel-${BAZEL_VERSION}-installer-linux-x86_64.sh | ||

| sudo bash bazel-${BAZEL_VERSION}-installer-linux-x86_64.sh | ||

| sudo apt install build-essential | ||

| ./configure.sh | ||

| bazel clean | ||

| bazel build waymo_open_dataset/metrics/tools/compute_tracking_metrics_main | ||

| ``` | ||

|

|

||

| 5- Evaluate tracking result | ||

|

|

||

| ```bash | ||

| bazel-bin/waymo_open_dataset/metrics/tools/compute_tracking_metrics_main \ | ||

| ~/benchmark_result/predictions.bin ~/benchmark_result/gt.bin | ||

| ``` | ||

|

|

||

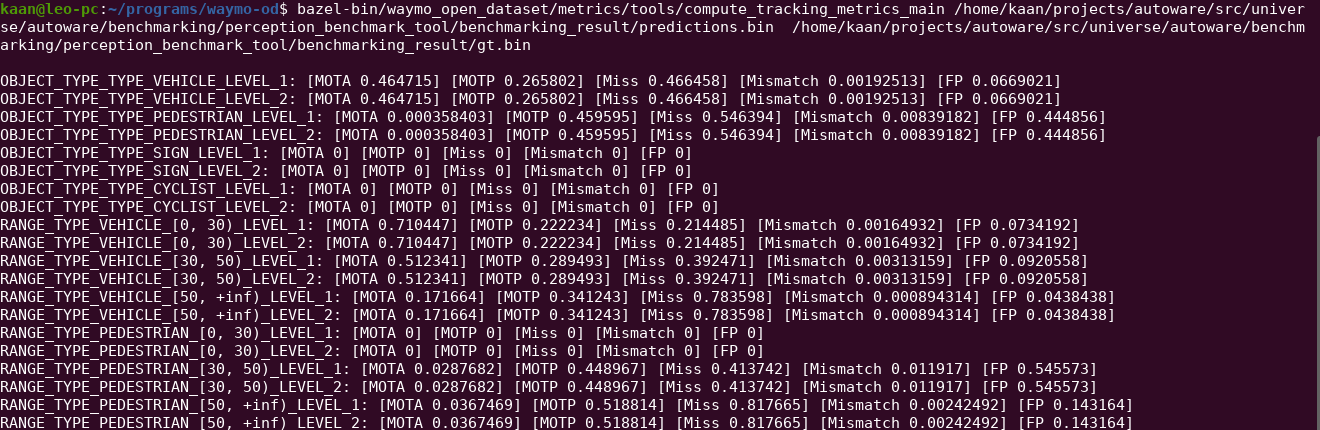

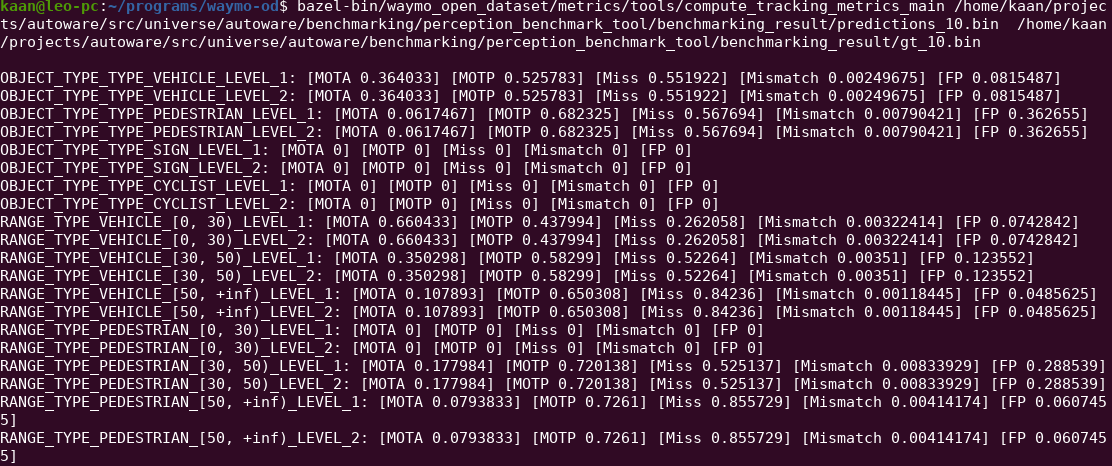

| ## Result | ||

|

|

||

| The evaluation result of the Perception pipeline on the Waymo Dataset is presented below. | ||

|

|

||

| ### lidar_centerpoint | ||

|

|

||

|  | ||

|

|

||

| ### lidar_apollo_instance_segmentation | ||

|

|

||

|  |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,103 @@ | ||

| import os | ||

| from os.path import join as joinPath | ||

|

|

||

| from launch import LaunchDescription | ||

| from launch.actions import DeclareLaunchArgument | ||

| from launch.substitutions import LaunchConfiguration | ||

| from launch_ros.actions import Node | ||

|

|

||

|

|

||

| def generate_launch_description(): | ||

|

|

||

| dataset_path_launch_arg = DeclareLaunchArgument( | ||

| "dataset_path", | ||

| default_value=joinPath(os.environ["HOME"], "validation_validation_0000"), | ||

| description="", | ||

| ) | ||

|

|

||

| use_camera_launch_arg = DeclareLaunchArgument( | ||

| "use_camera", | ||

| default_value="False", | ||

| description="", | ||

| ) | ||

|

|

||

| result_path_launch_arg = DeclareLaunchArgument( | ||

| "result_path", | ||

| default_value=joinPath(os.environ["HOME"], "benchmark_result"), | ||

| description="", | ||

| ) | ||

|

|

||

| benchmark_frame_launch_arg = DeclareLaunchArgument("benchmark_frame", default_value="base_link") | ||

|

|

||

| launch_file_launch_arg = DeclareLaunchArgument( | ||

| "launch_file", | ||

| default_value="waymo_perception.launch.xml", | ||

| description="Launch file for testing perception stack", | ||

| ) | ||

|

|

||

| vehicle_model_launch_arg = DeclareLaunchArgument( | ||

| "vehicle_model", | ||

| default_value="sample_vehicle", | ||

| description="", | ||

| ) | ||

|

|

||

| sensor_model_launch_arg = DeclareLaunchArgument( | ||

| "sensor_model", | ||

| default_value="sample_sensor_kit", | ||

| description="", | ||

| ) | ||

|

|

||

| benchmark_node = Node( | ||

| package="perception_benchmark_tool", | ||

| name="benchmark_node", | ||

| executable="benchmark_node", | ||

| output="screen", | ||

| parameters=[ | ||

| { | ||

| "result_path": LaunchConfiguration("result_path"), | ||

| "benchmark_frame": LaunchConfiguration("benchmark_frame"), | ||

| } | ||

| ], | ||

| ) | ||

|

|

||

| waymo_player_node = Node( | ||

| package="perception_benchmark_tool", | ||

| name="waymo_player_node", | ||

| executable="waymo_player_node", | ||

| output="screen", | ||

| parameters=[ | ||

| { | ||

| "dataset_path": LaunchConfiguration("dataset_path"), | ||

| "use_camera": LaunchConfiguration("use_camera"), | ||

| } | ||

| ], | ||

| ) | ||

|

|

||

| autoware_workflow_runner_node = Node( | ||

| package="perception_benchmark_tool", | ||

| name="autoware_workflow_runner_node", | ||

| executable="autoware_workflow_runner_node", | ||

| output="screen", | ||

| parameters=[ | ||

| { | ||

| "launch_file": LaunchConfiguration("launch_file"), | ||

| "vehicle_model": LaunchConfiguration("vehicle_model"), | ||

| "sensor_model": LaunchConfiguration("sensor_model"), | ||

| } | ||

| ], | ||

| ) | ||

|

|

||

| return LaunchDescription( | ||

| [ | ||

| dataset_path_launch_arg, | ||

| use_camera_launch_arg, | ||

| benchmark_frame_launch_arg, | ||

| result_path_launch_arg, | ||

| launch_file_launch_arg, | ||

| vehicle_model_launch_arg, | ||

| sensor_model_launch_arg, | ||

| benchmark_node, | ||

| waymo_player_node, | ||

| autoware_workflow_runner_node, | ||

| ] | ||

| ) |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,107 @@ | ||

| # Copyright 2020 Tier IV, Inc. All rights reserved. | ||

| # | ||

| # Licensed under the Apache License, Version 2.0 (the "License"); | ||

| # you may not use this file except in compliance with the License. | ||

| # You may obtain a copy of the License at | ||

| # | ||

| # http://www.apache.org/licenses/LICENSE-2.0 | ||

| # | ||

| # Unless required by applicable law or agreed to in writing, software | ||

| # distributed under the License is distributed on an "AS IS" BASIS, | ||

| # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. | ||

| # See the License for the specific language governing permissions and | ||

| # limitations under the License. | ||

|

|

||

|

|

||

| import launch | ||

| from launch.actions import DeclareLaunchArgument | ||

| from launch.actions import OpaqueFunction | ||

| from launch.actions import SetLaunchConfiguration | ||

| from launch.conditions import IfCondition | ||

| from launch.conditions import UnlessCondition | ||

| from launch.substitutions import LaunchConfiguration | ||

| from launch_ros.actions import ComposableNodeContainer | ||

| from launch_ros.actions import LoadComposableNodes | ||

| from launch_ros.descriptions import ComposableNode | ||

|

|

||

|

|

||

| def launch_setup(context, *args, **kwargs): | ||

|

|

||

| # set concat filter as a component | ||

| concat_component = ComposableNode( | ||

| package="pointcloud_preprocessor", | ||

| plugin="pointcloud_preprocessor::PointCloudConcatenateDataSynchronizerComponent", | ||

| name="concatenate_data", | ||

| remappings=[("output", "/sensing/lidar/concatenated/pointcloud")], | ||

| parameters=[ | ||

| { | ||

| "input_topics": [ | ||

| "/point_cloud/front_lidar", | ||

| "/point_cloud/rear_lidar", | ||

| "/point_cloud/side_left_lidar", | ||

| "/point_cloud/side_right_lidar", | ||

| "/point_cloud/top_lidar", | ||

| ], | ||

| "output_frame": LaunchConfiguration("base_frame"), | ||

| } | ||

| ], | ||

| extra_arguments=[{"use_intra_process_comms": LaunchConfiguration("use_intra_process")}], | ||

| ) | ||

|

|

||

| # set container to run all required components in the same process | ||

| container = ComposableNodeContainer( | ||

| name=LaunchConfiguration("container_name"), | ||

| namespace="", | ||

| package="rclcpp_components", | ||

| executable=LaunchConfiguration("container_executable"), | ||

| composable_node_descriptions=[], | ||

| condition=UnlessCondition(LaunchConfiguration("use_pointcloud_container")), | ||

| output="screen", | ||

| ) | ||

|

|

||

| target_container = ( | ||

| container | ||

| if UnlessCondition(LaunchConfiguration("use_pointcloud_container")).evaluate(context) | ||

| else LaunchConfiguration("container_name") | ||

| ) | ||

|

|

||

| # load concat or passthrough filter | ||

| concat_loader = LoadComposableNodes( | ||

| composable_node_descriptions=[concat_component], | ||

| target_container=target_container, | ||

| condition=IfCondition(LaunchConfiguration("use_concat_filter")), | ||

| ) | ||

|

|

||

| return [container, concat_loader] | ||

|

|

||

|

|

||

| def generate_launch_description(): | ||

|

|

||

| launch_arguments = [] | ||

|

|

||

| def add_launch_arg(name: str, default_value=None): | ||

| launch_arguments.append(DeclareLaunchArgument(name, default_value=default_value)) | ||

|

|

||

| add_launch_arg("base_frame", "base_link") | ||

| add_launch_arg("use_multithread", "False") | ||

| add_launch_arg("use_intra_process", "False") | ||

| add_launch_arg("use_pointcloud_container", "False") | ||

| add_launch_arg("container_name", "pointcloud_preprocessor_container") | ||

|

|

||

| set_container_executable = SetLaunchConfiguration( | ||

| "container_executable", | ||

| "component_container", | ||

| condition=UnlessCondition(LaunchConfiguration("use_multithread")), | ||

| ) | ||

|

|

||

| set_container_mt_executable = SetLaunchConfiguration( | ||

| "container_executable", | ||

| "component_container_mt", | ||

| condition=IfCondition(LaunchConfiguration("use_multithread")), | ||

| ) | ||

|

|

||

| return launch.LaunchDescription( | ||

| launch_arguments | ||

| + [set_container_executable, set_container_mt_executable] | ||

| + [OpaqueFunction(function=launch_setup)] | ||

| ) |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,48 @@ | ||

| <?xml version="1.0" encoding="UTF-8"?> | ||

| <launch> | ||

| <!-- Essential parameters --> | ||

| <arg name="vehicle_model" description="vehicle model name"/> | ||

| <arg name="use_pointcloud_container" default="true" description="launch pointcloud container"/> | ||

| <arg name="pointcloud_container_name" default="pointcloud_container"/> | ||

|

|

||

| <!-- Optional parameters --> | ||

| <arg name="launch_perception" default="true" description="launch perception"/> | ||

| <arg name="use_sim_time" default="false" description="use_sim_time"/> | ||

| <arg name="system_run_mode" default="online" description="run mode in system"/> | ||

| <arg name="rviz" default="true" description="launch rviz"/> | ||

| <arg name="rviz_config" default="$(find-pkg-share perception_benchmark_tool)/rviz/waymo.rviz" description="rviz config"/> | ||

|

|

||

| <!-- Global parameters --> | ||

| <group scoped="false"> | ||

| <include file="$(find-pkg-share global_parameter_loader)/launch/global_params.launch.py"> | ||

| <arg name="use_sim_time" value="$(var use_sim_time)"/> | ||

| <arg name="vehicle_model" value="$(var vehicle_model)"/> | ||

| </include> | ||

| </group> | ||

|

|

||

| <include file="$(find-pkg-share perception_benchmark_tool)/launch/pointcloud_preprocessor.launch.py"> | ||

| <arg name="base_frame" value="base_link"/> | ||

| <arg name="use_intra_process" value="true"/> | ||

| <arg name="use_multithread" value="true"/> | ||

| <arg name="use_pointcloud_container" value="false"/> | ||

| <arg name="container_name" value="$(var pointcloud_container_name)"/> | ||

| <arg name="use_concat_filter" value="true"/> | ||

| </include> | ||

|

|

||

| <!-- Perception --> | ||

| <group if="$(var launch_perception)"> | ||

| <include file="$(find-pkg-share tier4_perception_launch)/launch/perception.launch.xml"> | ||

| <arg name="mode" value="lidar"/> | ||

| <arg name="vehicle_param_file" value="$(find-pkg-share $(var vehicle_model)_description)/config/vehicle_info.param.yaml"/> | ||

| <arg name="use_pointcloud_container" value="$(var use_pointcloud_container)"/> | ||

| <arg name="pointcloud_container_name" value="$(var pointcloud_container_name)"/> | ||

| <arg name="use_vector_map" value="false"/> | ||

| <arg name="use_pointcloud_map" value="false"/> | ||

| <arg name="use_object_filter" value="false"/> | ||

| <arg name="image_number" value="0"/> | ||

| </include> | ||

| </group> | ||

|

|

||

| <!-- Tools --> | ||

| <node pkg="rviz2" exec="rviz2" name="rviz2" output="screen" args="-d $(find-pkg-share perception_benchmark_tool)/rviz/waymo.rviz"/> | ||

| </launch> |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,31 @@ | ||

| <?xml version="1.0"?> | ||

| <?xml-model href="http://download.ros.org/schema/package_format3.xsd" schematypens="http://www.w3.org/2001/XMLSchema"?> | ||

| <package format="3"> | ||

| <name>perception_benchmark_tool</name> | ||

| <version>0.0.0</version> | ||

| <description>This package benchmark Autoware perception stack on Waymo dataset</description> | ||

| <maintainer email="kcolak@leodrive.ai">Kaan Colak</maintainer> | ||

| <license>Apache License 2.0</license> | ||

|

|

||

| <test_depend>ament_copyright</test_depend> | ||

| <test_depend>ament_flake8</test_depend> | ||

| <test_depend>ament_pep257</test_depend> | ||

| <test_depend>python3-pytest</test_depend> | ||

|

|

||

| <exec_depend>autoware_auto_perception_msgs</exec_depend> | ||

| <exec_depend>global_parameter_loader</exec_depend> | ||

| <exec_depend>rviz2</exec_depend> | ||

| <exec_depend>tier4_perception_msgs</exec_depend> | ||

|

|

||

| <depend>cv_bridge</depend> | ||

| <depend>geometry_msgs</depend> | ||

| <depend>std_srvs</depend> | ||

| <depend>tf2</depend> | ||

| <depend>tf2_geometry_msgs</depend> | ||

| <depend>tf2_ros</depend> | ||

| <depend>tf_transformations</depend> | ||

|

|

||

| <export> | ||

| <build_type>ament_python</build_type> | ||

| </export> | ||

| </package> |

Empty file.

Oops, something went wrong.