-

Notifications

You must be signed in to change notification settings - Fork 4.8k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

T265 pose tracking drift for slow movements #3970

Comments

|

Hi @rghl3 , |

|

Hi @SlavikLiman ,

In order to improve the confidence level, are there any suggestions like improving the features in the scene or any tuning methods/parameters? On what parameters, does the Confidence level depend upon?

By "enough motion", do you mean faster movements( thereby, exciting the imu) or noticeable changes in image features?

For our application, we prefer to use a stand-alone camera. But if that is the only option, then we might consider adding wheel encoder. |

|

@rghl3 ,

The confidence level depends on various parameters as lighting of the scene, number of features, etc. It seems form the video like your environment is rich enough in features. It's advised that the T265 gains a high confidence in order to obtain a high quality of tracking.

This could be achieved by moving the T265 (probably in a slightly higher speed, than the speed your rover moves). After a high confidence is achieved you should be able to move in your original speed.

As I previously stated we highly recommend providing wheel odometry in order to gain best quality of tracking. |

|

Hi @SlavikLiman ,

What is the ideal frequency for wheel encoder input to t265? Also, from the ros wrapper for librealsense, it seems only the Linear z velocity is used. |

|

Hi @rghl3 , |

|

Hi @SlavikLiman , |

|

Hi @rghl3 , |

|

Hi @SlavikLiman , Since I am using ros convention, I have considered wheel odom and the pose input to be in standard convention, as a result the Axis-angle representation. |

|

Hi @rghl3 ,

|

|

Hi @SlavikLiman ,

Are any log files needed, if in case, to reproduce the results on your part? Also, I observed more undershoots (ie -0.5m drift) rather than overshoot (+0.5m drift) |

|

Hi @rghl3 ,

Please make sure that you have a high confidence (green trace) and repeat your experiment. |

|

Hi @SlavikLiman , |

|

Hi @rghl3 ,

|

|

Hi @SlavikLiman , |

|

Hi @rghl3 One more question prior to us pointing you to do a return/exchange to see if it is a HW issue Thanks |

|

Hi @RealSenseCustomerSupport , |

|

Hi @SlavikLiman and @RealSenseCustomerSupport, |

|

@rghl3 , |

|

Hi @SlavikLiman,

We tested the camera in the above said conditions. We could obtain the claimed accuracy(1% drift) for 60% of the runs. However the accuracy dropped to around 5% for the remaining, thus being inconsistent.

What are the suggested requirements to achieve the above accuracy consitently (such as lighting, features)? |

|

Hi @rghl3 , |

|

Hi @SlavikLiman, |

|

Hi @SlavikLiman,

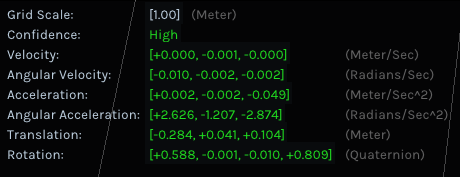

In continuation to the above, I get the following errors for the untracked movement.

I am running t265 and d415 simultaneously. The slam error speed occurs when I rotate the camera, and then out of frame resources error, followed by untracked movements. Any ideas on what might be going wrong? |

|

Thank you for highlighting the drift issues occurring with the T265 tracking system. We have moved our focus to our next generation of products and consequently, we will not be addressing this issue in the T265. |

Issue Description

Hi,

I have connected the camera to laptop. When I move the camera slowly along the forward direction, the camera pose tracking doesn't follow the movements(almost stationary) . When moved a distance of around 6 meters, the 3d tracking shows it to be around 0.5meters. Here is the link for the video. Please have a look at it. (Grid size is 1m).

As I am using it on a rover platform moving at a slow speed, these untracked pose measurements result in accumulated drifts over long runs.

The text was updated successfully, but these errors were encountered: