-

Notifications

You must be signed in to change notification settings - Fork 24

Commit

This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository.

feat(dashboard): zero line when no data found (#1480)

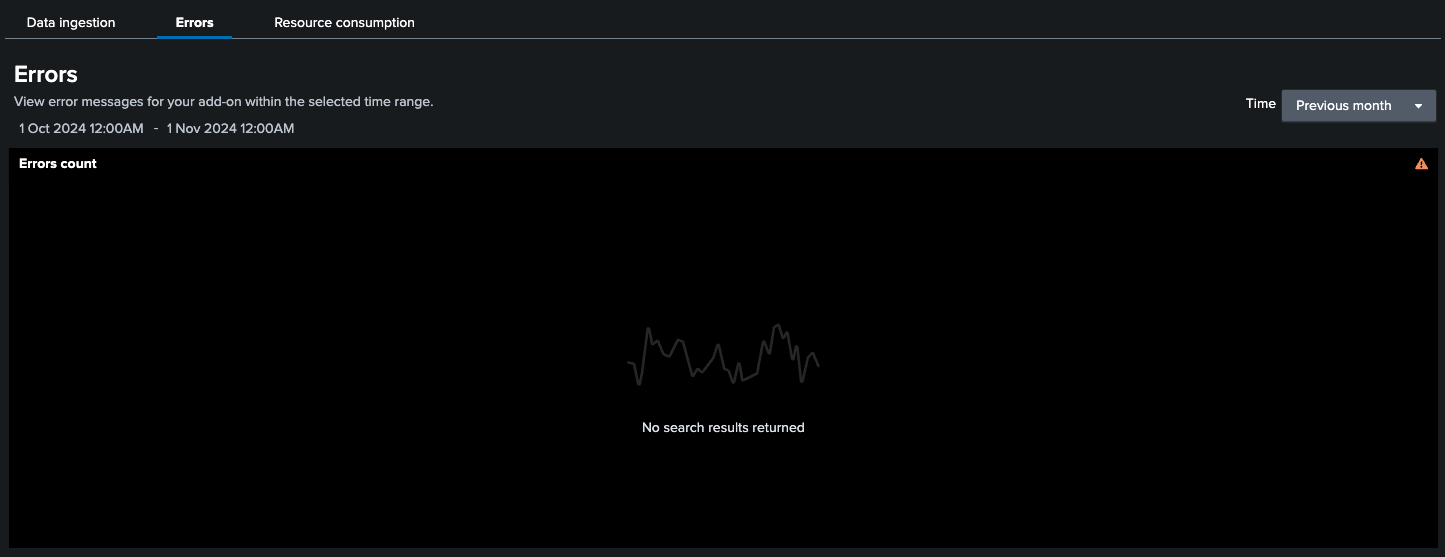

**Issue number:** https://splunk.atlassian.net/browse/ADDON-76195 ### PR Type **What kind of change does this PR introduce?** * [x] Feature * [ ] Bug Fix * [ ] Refactoring (no functional or API changes) * [ ] Documentation Update * [ ] Maintenance (dependency updates, CI, etc.) ## Summary Zero line is displayed when no data found for all dashboard charts, except those in resource tab. Pros: - zero line chart displayed instead of information 'No data found" Cons: - it definitely will generate some costs due to it making some computations to generate default zero data, but comparing to general search operations it should be negligible, as even for whole previous year it generates event every 5 min and locally it takes about 0,12 sec, plus query is cached to 10 min, so if there aren't real time queries it shouldn't generate more computations even after page refreshes. Sum up: Comparing to data search this operation shouldn't be a problem. Some info regarding Smart Sources (https://splunkui.splunk.com/Packages/dashboard-docs/Smartsources ). ``` enableSmartSourceDS: true, ``` it is a feature that enables referencing already finished search jobs and its results inside next searches (here it is used to verify if there are any results for table data, if not then default values are applied if there are next code is ignored). ### Changes For data charts where "No data found" message is displayed there is displayed chart with zero line, simulating none of the events to appear. ### User experience Please describe the user experience before and after this change. Screenshots are welcome for additional context. #### Overview before changes: <img width="1453" alt="Screenshot 2024-11-24 at 22 23 02" src="https://github.com/user-attachments/assets/def2bd45-a0a1-4697-a03a-80c20d13a6b4"> #### Overview after changes: <img width="1466" alt="Screenshot 2024-11-24 at 22 19 24" src="https://github.com/user-attachments/assets/6c7a408f-fdb5-428e-b844-19fed408a630"> #### Data Ingestion tab before changes:  #### Data Ingestion tab after changes: <img width="1470" alt="Screenshot 2024-11-24 at 22 19 53" src="https://github.com/user-attachments/assets/882c2bcc-5625-4388-934b-b70bc250951f"> #### Error tab before changes:  #### Error tab after changes: <img width="1471" alt="Screenshot 2024-11-24 at 22 21 08" src="https://github.com/user-attachments/assets/9c940c09-8aea-4217-acb6-b6c5e5fab8b6"> #### Tables for errors is filled with 0 data when data volume found but number of events got no data. <img width="1468" alt="Screenshot 2025-01-20 at 11 05 49" src="https://github.com/user-attachments/assets/d1bc0462-4039-46f7-8ce2-52ea605f79a4" /> #### Resource consumption tab after changes remains the same: <img width="1472" alt="Screenshot 2024-11-24 at 22 21 44" src="https://github.com/user-attachments/assets/def8af22-4241-49e9-afeb-1ad1ace5c511"> ## Checklist If an item doesn't apply to your changes, leave it unchecked. * [ ] I have performed a self-review of this change according to the [development guidelines](https://splunk.github.io/addonfactory-ucc-generator/contributing/#development-guidelines) * [ ] Tests have been added/modified to cover the changes [(testing doc)](https://splunk.github.io/addonfactory-ucc-generator/contributing/#build-and-test) * [ ] Changes are documented * [x] PR title and description follows the [contributing principles](https://splunk.github.io/addonfactory-ucc-generator/contributing/#pull-requests)

- Loading branch information

1 parent

1d55507

commit d1cad41

Showing

13 changed files

with

253 additions

and

32 deletions.

There are no files selected for viewing

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

Oops, something went wrong.