-

Notifications

You must be signed in to change notification settings - Fork 13k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Compiler spends all available memory when large array is initialized with lazy_static #93215

Comments

|

In fairness to rustc, that array is 14.9 GB. If you only have 8 GB of memory, you'll never be able to run this program even if you do manage to compile it. And if you do have enough memory to get through whatever's going on here, it looks to me like the incremental compilation system isn't prepared to handle this: (this is a local build because I want debug symbols) All that said, I feel like this shouldn't be so memory-intensive. It looks like the memory consumption appears to be from the MIR interpreter's |

So I believe what you have here is a very large We could maybe reduce the cost of some of that tracking, but it's still not great overall. Your executable would likely also need to be 14.9GiB in size, because the What you may want to do instead is That results in successful compilation and this runtime message (on the playground): |

|

Ah, the allocations are all happening along a code path that calls Peak memory of the build is 44.8 GB, btw. |

|

Yea, there are a few extra copies of values happening that we could probably avoid, but we always need at least two at some point during CTFE. It may help to implement a "deinit source on move" scheme. Basically CTFE treats |

It's likely something like The |

|

OP's example is insufficiently minimized. A build of this program peaks at 33.6 GB: const ROWS: usize = 100000;

const COLS: usize = 10000;

static TWODARRAY: [[u128; COLS]; ROWS] = [[0; COLS]; ROWS];

fn main() {}Perhaps that makes sense, if we're doing a copy at all of this huge array, because that's twice the size of the array. Right? |

I do have more than 8 GiB of memory, but rustc was spending 100+ GiB, which exceeds array size by far. And even if it didn't, I would hope to be able to allocate large arrays without the compiler having to reproduce the allocation. I often compile programs locally and then deploy them on servers with much more RAM, more CPU cores, etc.

I neglected to note in the issue that I am aware of the possibility of heap-allocating the array. In this case, however, I was explicitly experimenting with allocating the large array statically, akin to a C declaration of |

|

It seems like replacing the InitMask (which is basically a BitSet, not sure why we have a distinct impl of it for MIR allocations?) with something like the recently-added IntervalSet may make sense -- presumably it's pretty rare for a truly random pattern of initialization to occur. |

|

@Mark-Simulacrum I believe something like

To what end? Accessing it won't be more efficient than a pointer to a heap area - passing a Putting it in a Out of curiosity, I tried something similar (tho probably not sound on non-trivially-copyable types, heh) in C++ (using

That's worse than the OP example (in terms of initialization state), I'd say this is closer: pub static BIG: Option<[u128; 1 << 30]> = None;For Surprisingly, this is the best I can get to compile on godbolt even fully-uninitialized: pub static BIG_UNINIT: MaybeUninit<[u128; 1 << 27]> = MaybeUninit::uninit();(though you'll want to replace |

|

@Mark-Simulacrum I think I was mistaken to point to

It looks to me like there is currently

On godbolt I see "Compiler returned: 137" which from Googling means something else sent the compiler a SIGKILL. The last build that works peaks at 2.1 GB, and of course the next power of 2 is 4.1 GB. I think this is supposed to be unsurprising, because const eval has to construct the const. I suspect there's a (quite generous) 4 GB memory limit in Godbolt. So perhaps this is "as expected, but unfortunate"?

Wowee, that's significantly worse. Can you share the |

That should actually be the original code in the report. The line from |

yea, we could lazily grow it and treat anything beyond the size as uninitialized. |

Basically, we have If it is possible, without perf loss for typical CTFE, to use the same data structure for both of these cases, that would be nice. :D But I think the problem is that testing if bit |

If intervals lengths were to be replaced with prefix sums of their lengths test if i-th bit is set can be performed in logarithmic time. |

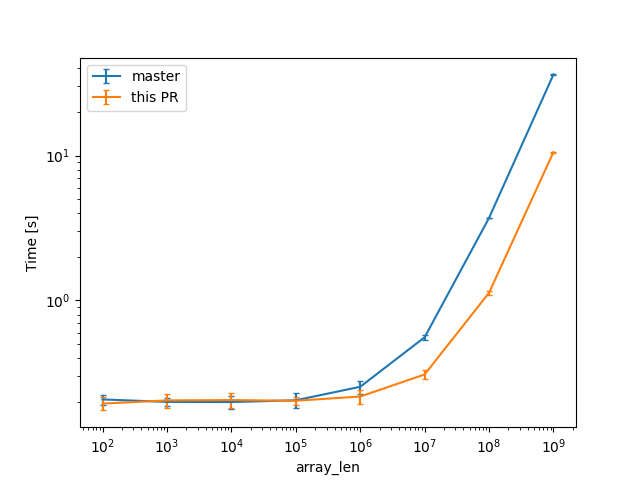

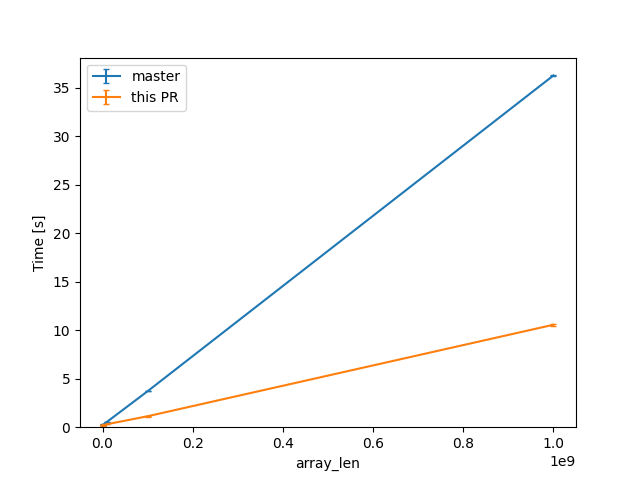

CTFE interning: don't walk allocations that don't need it The interning of const allocations visits the mplace looking for references to intern. Walking big aggregates like big static arrays can be costly, so we only do it if the allocation we're interning contains references or interior mutability. Walking ZSTs was avoided before, and this optimization is now applied to cases where there are no references/relocations either. --- While initially looking at this in the context of rust-lang#93215, I've been testing with smaller allocations than the 16GB one in that issue, and with different init/uninit patterns (esp. via padding). In that example, by default, `eval_to_allocation_raw` is the heaviest query followed by `incr_comp_serialize_result_cache`. So I'll show numbers when incremental compilation is disabled, to focus on the const allocations themselves at 95% of the compilation time, at bigger array sizes on these minimal examples like `static ARRAY: [u64; LEN] = [0; LEN];`. That is a close construction to parts of the `ctfe-stress-test-5` benchmark, which has const allocations in the megabytes, while most crates usually have way smaller ones. This PR will have the most impact in these situations, as the walk during the interning starts to dominate the runtime. Unicode crates (some of which are present in our benchmarks) like `ucd`, `encoding_rs`, etc come to mind as having bigger than usual allocations as well, because of big tables of code points (in the hundreds of KB, so still an order of magnitude or 2 less than the stress test). In a check build, for a single static array shown above, from 100 to 10^9 u64s (for lengths in powers of ten), the constant factors are lowered: (log scales for easier comparisons)  (linear scale for absolute diff at higher Ns)  For one of the alternatives of that issue ```rust const ROWS: usize = 100_000; const COLS: usize = 10_000; static TWODARRAY: [[u128; COLS]; ROWS] = [[0; COLS]; ROWS]; ``` we can see a similar reduction of around 3x (from 38s to 12s or so). For the same size, the slowest case IIRC is when there are uninitialized bytes e.g. via padding ```rust const ROWS: usize = 100_000; const COLS: usize = 10_000; static TWODARRAY: [[(u64, u8); COLS]; ROWS] = [[(0, 0); COLS]; ROWS]; ``` then interning/walking does not dominate anymore (but means there is likely still some interesting work left to do here). Compile times in this case rise up quite a bit, and avoiding interning walks has less impact: around 23%, from 730s on master to 568s with this PR.

Make init mask lazy for fully initialized/uninitialized const allocations There are a few optimization opportunities in the `InitMask` and related const `Allocation`s (e.g. by taking advantage of the fact that it's a bitset that represents initialization, which is often entirely initialized or uninitialized in a single call, or gradually built up, etc). There's a few overwrites to the same state, multiple writes in a row to the same indices, the RLE scheme for `memcpy` doesn't always compress, etc. Here, we start with: - avoiding materializing the bitset's blocks if the allocation is fully initialized/uninitialized - dealloc blocks when fully overwriting, including when participating in `memcpy`s - take care of the fixme about allocating blocks of 0s before overwriting them to the expected value - expanding unit test coverage of the init mask This should be most visible on benchmarks and crates where const allocations dominate the runtime (like `ctfe-stress-5` of course), but I was especially looking at the worst cases from rust-lang#93215. This first change allows the majority of `set_range` calls to stay with a lazy init mask when bootstrapping rustc (not that the init mask is a big part of the process in cpu time or memory usage). r? `@oli-obk` I have another in-progress branch where I'll switch the singular initialized/uninitialized value to a watermark, recording the point after which everything is uninitialized. That will take care of cases where full initialization is monotonic and done in multiple steps (e.g. an array of a type without padding), which should then allow the vast majority of const allocations' init masks to stay lazy during bootstrapping (though interestingly I've seen such gradual initialization in both left-to-right and right-to-left directions, and I don't think a single watermark can handle both).

I tried this code:

I expected it to compile successfully.

Instead, the compiler spent all available RAM and swap, and I had to kill it. From

top:Meta

rustc --version --verbose:The text was updated successfully, but these errors were encountered: