-

Notifications

You must be signed in to change notification settings - Fork 13k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

convenient self-profile option? #50780

Comments

|

Excluding the reuse part, isn't this what -Ztime-passes is for? I've been told that profiling queries are meant to replace -Ztime-passes, but when I followed the instructions at https://forge.rust-lang.org/profile-queries.html I couldn't get it to work -- it produced empty files. Here are some ways that I think -Ztime-passes could be improved:

A lot of improvements could be made by recording data and then having a post-processing step, which could aggregate insignificant buckets, for example. I don't know whether that post-processing should happen within rustc itself or be a separate tool. |

|

@nnethercote I think that "time-passes" and the profile-queries concepts probably ought to be merged, and renamed to something like I think my preference would be that when we declare queries, we also declare a "phase" or something that is supposed to be used for a coarse-grained dump (and then you could use |

|

I'd like to work on this. |

|

It would be great if we had a solution that can deal well with parallelism, potentially also showing points of contention. We have the (possibly bitrotted) That being said, I don't think we should try to fix any of the existing solutions but build a new one. It should support at least a pure text summary format that can easily be collected by users and pasted in bug reports. An additional, more detailed output format that can be easily processed by other tools would be great too. @nnethercote, are there any lessons to be learned from FF's profiling support? Or the valgrind-based tools? Also cc the whole @rust-lang/wg-compiler-performance |

|

Apologies for the slow reply. Text-based output is indeed extremely convenient for pasting into bugs, etc., so I recommend it whenever it's appropriate. The simplest thing to do is just dump out the final text directly from rustc, as -Ztime-passes currently does, but that's inflexible. The alternative is to write data to a file and then have a separate viewing tool. If the data has a tree-like structure (as -Ztime-passes already does) then the ability to expand/collapse subtrees is very useful. Firefox's about:memory is a good example of this. Here's some sample output: It's all text so it can be copy and pasted easily. (The lines are Unicode light box-drawing chars \u2500, \u2502, \u2514, \u251c, which usually render nicely in terminals and text boxes.) But it's also interactive: when viewing it within Firefox you can click on any of those lines that have "++" or "--" to expand or collapse a sub-tree. Also, by default it only shows as expanded the sub-trees that exceed a certain threshold; this is good because you can collect arbitrarily detailed data (i.e. as many buckets, sub-buckets, sub-sub-buckets, etc., as you like) without it being overwhelming. I would recommend using a web browser as your display tool. One possibility is to generate an HTML page(s) directly from rustc, as is done by the profile-queries. Another possibility is to generate some other intermediate format (JSON?) and then write a webpage/website that reads that in, as is done by rustc-perf's site. The latter approach might be more flexible. |

|

I've got a very basic version of this here. Here's a sample of the output: It might be nice to have a category for "incremental overhead" such as hashing & serializing/deserializing the graph? Perhaps |

|

That looks very cool! Some feedback:

|

|

This is awesome work @wesleywiser! 💯 I agree with @michaelwoerister's suggestion that we include I've gone ahead and made it valid Markdown, too, so that it can easily be pasted for nice display =)

Finally, I think we display the time as a percentage of the total for each phase. As a last point, I think it'd be great if — to determine the total time — we do a single measurement from the "outside". This will help us to ensure we don't have "leakage" — that is, time that is not accounted for. Or at least it will give us an idea how much leakage there is. |

|

Thanks for the feedback @nikomatsakis and @michaelwoerister! Those are all great ideas and I'll start working on those. |

|

So it looks like the output is being produced directly by rustc, is that right? |

|

@nnethercote Yes, that's correct. |

|

Progress update: I've implemented much of the above feedback. The output now looks like this: In addition, |

|

One suggestion: the JSON file should contain enough data to exactly recreate the

If it's possible to get the full command line that rustc was invoked with, I suggest including that in the JSON output too. That way if you end up with a bunch of different JSON files you have full data on how each one was created. Finally, whenever you have coarse categories like this, the natural inclination is for users to want more detail. "Codegen takes 2065ms... can we break that down some more?" (I experienced this back in the early days of Firefox's about:memory.) This naturally takes us back to the idea of a tree-like structure, where you have categories, sub-categories, sub-sub-categories, etc. |

|

a while ago I did some experiments with replacing time-passes with chrome tracing events I have updated my branch of rustc now and have some results posted on this page. a sad thing is that the pages generated only works in chrome. For some of the events I have extra data to describe the event so it is possible to see why some parts takes more time. maybe some thing similar can be used for the verbose mode. |

|

@wesleywiser this looks really nice. |

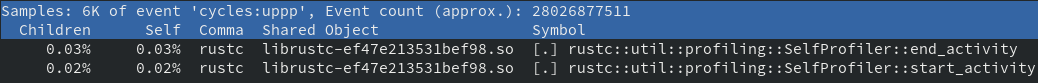

Implement a self profiler This is a work in progress implementation of #50780. I'd love feedback on the overall structure and code as well as some specific things: - [The query categorization mechanism](https://github.com/rust-lang/rust/compare/master...wesleywiser:wip_profiling?expand=1#diff-19e0a69c10eff31eb2d16805e79f3437R101). This works but looks kind of ugly to me. Perhaps there's a better way? - [The profiler assumes only one activity can run at a time](https://github.com/rust-lang/rust/compare/master...wesleywiser:wip_profiling?expand=1#diff-f8a403b2d88d873e4b27c097c614a236R177). This is obviously incompatible with the ongoing parallel queries. - [The output code is just a bunch of `format!()`s](https://github.com/rust-lang/rust/compare/master...wesleywiser:wip_profiling?expand=1#diff-f8a403b2d88d873e4b27c097c614a236R91). Is there a better way to generate markdown or json in the compiler? - [The query categorizations are likely wrong](https://github.com/rust-lang/rust/compare/master...wesleywiser:wip_profiling?expand=1#diff-19e0a69c10eff31eb2d16805e79f3437R101). I've marked what seemed obvious to me but I'm sure I got a lot of them wrong. The overhead currently seems very low. Running `perf` on a sample compilation with profiling enabled reveals:

|

I believe this can be closed now. We have the |

It'd be really nice if there were an easy way to breakdown compilation time into a few categories, and to get a summary of reuse:

Something that we could conveniently copy and paste into bug reports to get an overall sense of what is going. This would replace and/or build on the existing profile options. (Even better would be if we could then have an option to get per-query data, later on.)

cc @nnethercote @michaelwoerister

The text was updated successfully, but these errors were encountered: