-

Notifications

You must be signed in to change notification settings - Fork 4.8k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

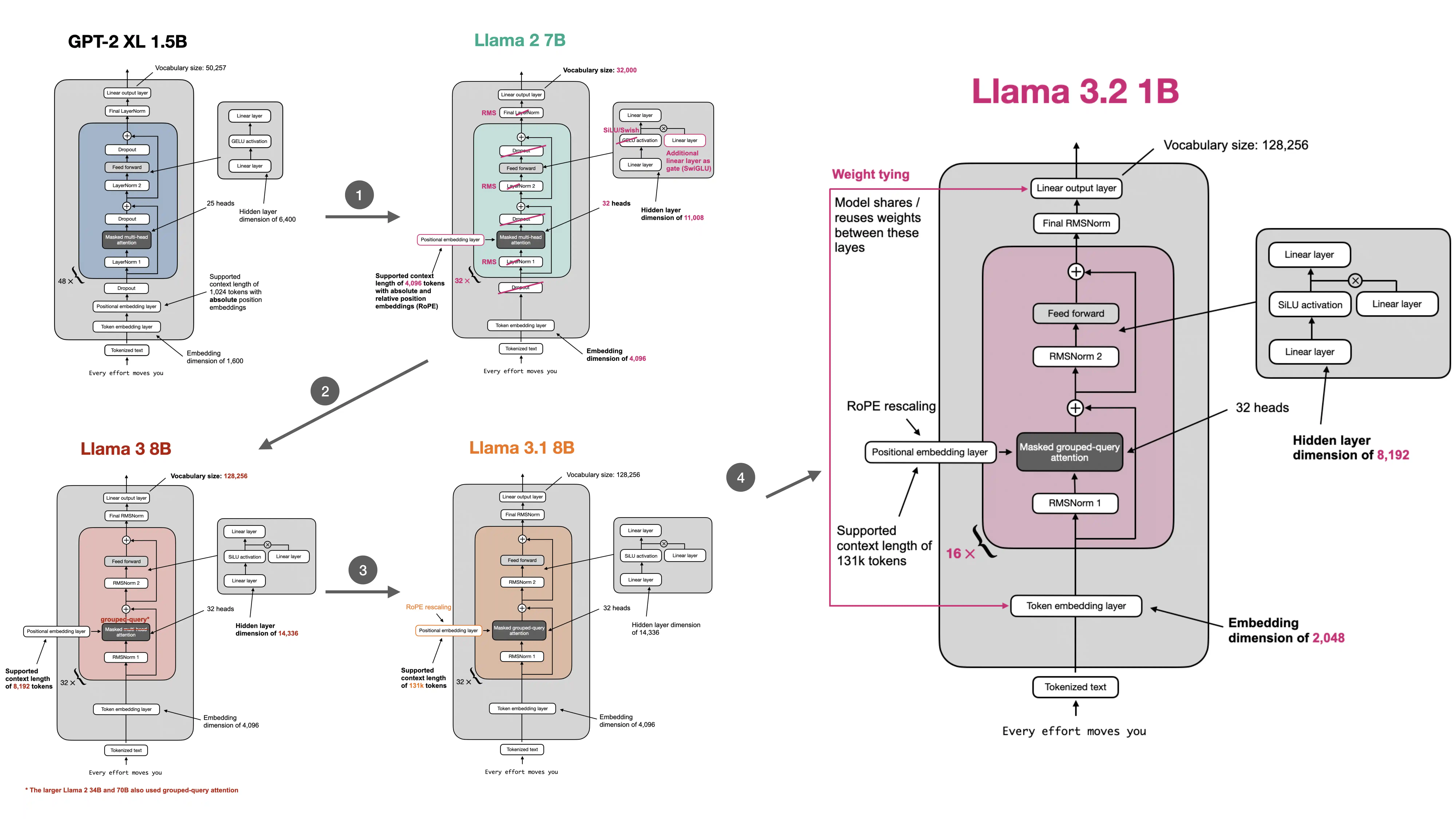

minor fixes: Llama 3.2 standalone #420

Conversation

d-kleine

commented

d-kleine

commented

Oct 26, 2024

- minor fixes and improvements for Llama 3.2 standalone nb

|

Check out this pull request on See visual diffs & provide feedback on Jupyter Notebooks. Powered by ReviewNB |

|

Good catch regarding the 72. I also reformatted the RoPE base as float to make it consistent with the other RoPE float settings. |

|

@rasbt Thanks! I just took a look into the updated figure, the "32 heads" of Llama 3 8B are still bold print. Also, I have seen another information in the figure that might need an update:

You could also add the information that Llama 2 already used GQA for the larger models (34b and 70b) for improved inferencescalability. I think this an interesting information for the figure. |

|

Thanks, I will try to update it in the next few days! |

|

Looks like I had fixed the "heads" in the Llama figure but then forgot to apply it to some of the figures where it's used as subfigure. Good call regarding the RoPE btw. Should be taken care of now! |

|

Looks great, thanks! Superb comprehensive overview btw! |