This repository has been archived by the owner on Sep 18, 2024. It is now read-only.

-

Notifications

You must be signed in to change notification settings - Fork 1.8k

[Model Compression] admm pruner #4116

Merged

Merged

Changes from all commits

Commits

Show all changes

7 commits

Select commit

Hold shift + click to select a range

cf05b6e

add admm pruner

J-shang 7db5fa0

update docstr

J-shang f312f7b

Merge branch 'master' into compression_v2_admm

J-shang 7229202

add validation

J-shang aca6cfa

rename row to rho

J-shang 1dbba22

Merge remote-tracking branch 'upstream/master' into compression_v2_admm

J-shang 89fa3f2

update

J-shang File filter

Filter by extension

Conversations

Failed to load comments.

Loading

Jump to

Jump to file

Failed to load files.

Loading

Diff view

Diff view

There are no files selected for viewing

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

Add this suggestion to a batch that can be applied as a single commit.

This suggestion is invalid because no changes were made to the code.

Suggestions cannot be applied while the pull request is closed.

Suggestions cannot be applied while viewing a subset of changes.

Only one suggestion per line can be applied in a batch.

Add this suggestion to a batch that can be applied as a single commit.

Applying suggestions on deleted lines is not supported.

You must change the existing code in this line in order to create a valid suggestion.

Outdated suggestions cannot be applied.

This suggestion has been applied or marked resolved.

Suggestions cannot be applied from pending reviews.

Suggestions cannot be applied on multi-line comments.

Suggestions cannot be applied while the pull request is queued to merge.

Suggestion cannot be applied right now. Please check back later.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

please use a clear name rather than

ZandU.There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

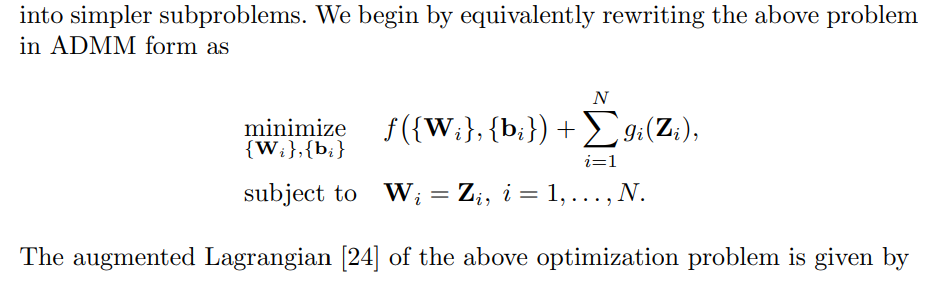

Ucan be named asscaled_dual_variable, but I have no idea to nameZ. The author rewrites the origin problem to 2-block optimization, andZcan be seen as another solution ofweight. I think usingZis because in ADMM, the second optimization goal is usually denoted asz.And in compression, the problem is

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Got it.