-

Notifications

You must be signed in to change notification settings - Fork 45

Configuration of Azure

Configuration on the Azure side of things depends on the data input. Changes to configuration are immediate and require no restarts of either Splunk or the modular input / add-on.

Activity Logs are configured to be Exported to an Event Hub (or storage account) at subscription-and-region scope. In the Azure portal, scroll to the bottom of the left nav and click the > More Services button. Type "activity". Select "Activity Log" from the list. In the blade that pops up, select 'Export' from the menu.

In the blade that pops up, select the subscription and regions that you want to export. Check the box to export to an event hub and set the retention that you want messages to stay in the event hub. If you're ingesting the messages into Splunk, they're picked up within a minute of appearance in the Event Hub. So you could think of the retention in event hub as a backup. Click the "Select a service bus namespace" button and configure the blade that pops up as shown.

Diagnostic Logs are configured on a resource-by-resource basis. This configuration can be done in the portal, using the Azure CLI, PowerShell or code. I'll use the portal as an example here. This page gives full details: How to enable collection of Diagnostic Logs

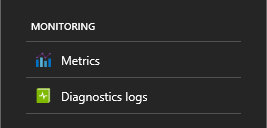

Find the Monitoring section of the blade of the resource you want to configure and click 'Diagnostic Logs':

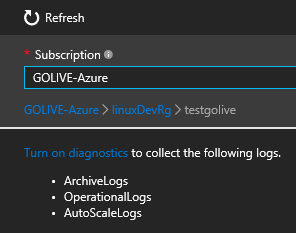

This will look different for different resources. Not all have Metrics and not all have Diagnostic Logs. Some have different options altogether or no Monitoring section at all. The blade that pops up is also resource specific. There will be a link or button for configuration of diagnostic logs such as the following example:

Switch on diagnostics and go through the configuration of the event hub as shown:

Most resources allow you to pick the hub name or leave it blank to use the default hub name. If you pick the name, you must also create the hub in the hub namespace. Give it a name such as "insights-logs-diagnostics" and [mandatory] set the number of partitions to 4.

Full details regarding metrics are on this page: Overview of metrics in Microsoft Azure. As it states, metrics for resources that emit them are available at all times without any additional configuration. To reduce the volume and avoid collecting data that you don't want, the add-on looks at the Metrics tag associated with each resource.

If there is no Metrics tag, there will be no metrics collected for that resource.

This page gives a list of all metrics that are currently supported by Azure Monitor: Supported metrics with Azure Monitor. The Metrics tag should be set to a comma-separated list of the metrics that you want to track. If you want all available metrics, set Metrics = *.

For example:

It may seem expedient to simply set Metrics:* for all resources and let the add-on sort out the details. And in a perfect world, this would work fine. The add-on doesn't care. However, there are limits and throttles around just about everything in computing. One of these is the length of the query string that can be sent to the ARM api's. The Redis Cache resource has quite a number of available metrics (for example). Requesting all available metrics for Redis Cache blows past the query string limits (2048 bytes). Best practice is in cases like this to limit the number of metrics requested to a top-level set for the typical case, then drill down as the situation demands.

For Redis, the 'typical case' metrics are:

connectedclients,cachehits,totalkeys,serverLoad,percentProcessorTime,totalcommandsprocessed,usedmemoryRss,usedmemory,evictedkeys,expiredkeys,getcommands,cacheRead,cacheWrite,cachemisses,setcommands

As additional cases surface, this document will be updated.