Commit

This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository.

[SPARK-49034][CORE] Support server-side

sparkProperties replacement…

… in REST Submission API

### What changes were proposed in this pull request?

Like SPARK-49033, this PR aims to support server-side `sparkProperties` replacement in REST Submission API.

- For example, ephemeral Spark clusters with server-side environment variables can provide backend-resource and information without touching client-side applications and configurations.

- The place holder pattern is `{{SERVER_ENVIRONMENT_VARIABLE_NAME}}` style like the following.

https://github.com/apache/spark/blob/163e512c53208301a8511310023d930d8b77db96/docs/configuration.md?plain=1#L694

https://github.com/apache/spark/blob/163e512c53208301a8511310023d930d8b77db96/core/src/main/scala/org/apache/spark/deploy/rest/StandaloneRestServer.scala#L233-L234

### Why are the changes needed?

A user can submits an environment variable holder like `{{AWS_ENDPOINT_URL}}` in order to use server-wide environment variables of Spark Master.

```

$ SPARK_MASTER_OPTS="-Dspark.master.rest.enabled=true" \

AWS_ENDPOINT_URL=ENDPOINT_FOR_THIS_CLUSTER \

sbin/start-master.sh

$ sbin/start-worker.sh spark://$(hostname):7077

```

```

curl -s -k -XPOST http://localhost:6066/v1/submissions/create \

--header "Content-Type:application/json;charset=UTF-8" \

--data '{

"appResource": "",

"sparkProperties": {

"spark.master": "spark://localhost:7077",

"spark.app.name": "",

"spark.submit.deployMode": "cluster",

"spark.hadoop.fs.s3a.endpoint": "{{AWS_ENDPOINT_URL}}",

"spark.jars": "/Users/dongjoon/APACHE/spark-merge/examples/target/scala-2.13/jars/spark-examples_2.13-4.0.0-SNAPSHOT.jar"

},

"clientSparkVersion": "",

"mainClass": "org.apache.spark.examples.SparkPi",

"environmentVariables": {},

"action": "CreateSubmissionRequest",

"appArgs": [ "10000" ]

}'

```

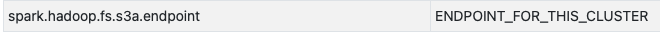

- http://localhost:4040/environment/

### Does this PR introduce _any_ user-facing change?

No. This is a new feature and disabled by default via `spark.master.rest.enabled (default: false)`

### How was this patch tested?

Pass the CIs with newly added test case.

### Was this patch authored or co-authored using generative AI tooling?

No.

Closes apache#47511 from dongjoon-hyun/SPARK-49034-2.

Authored-by: Dongjoon Hyun <dhyun@apple.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>- Loading branch information