κυβερνήτης: means helmsman or ship pilot

K8S, 8 characters between K and S

-

Kubernetes is written in Go

-

Inspired by Google's borg

-

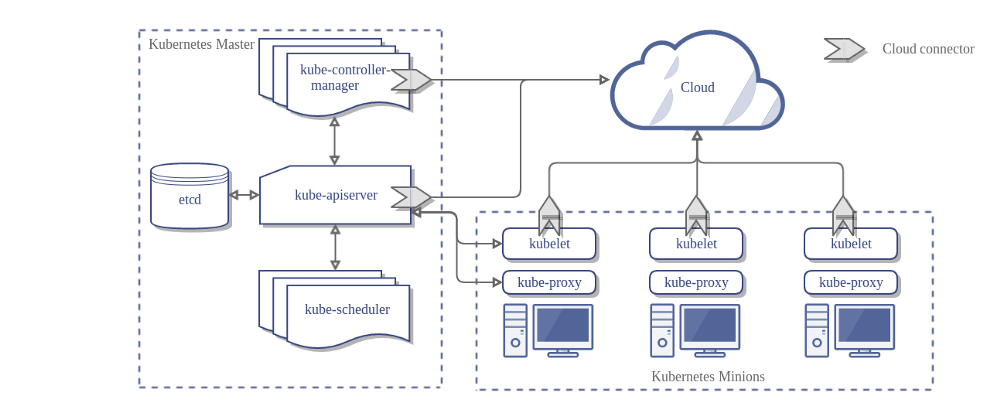

Each node in the cluster runs kubelet (

/var/lib/kubeletdocs), kube-proxy & container engine (Docker/cri-o/rkt) -

kubelet ensures access or creation of storage, Secrets or ConfigMaps

-

Master Node contains kube-apiserver, kube-scheduler, Controllers and etcd db

- Controllers: (aka: operators) "A control loop that watches the shared state of the cluster through the kube-apiserver and makes changes attempting to move the current state towards the desired state." [docs]

- "A loop process receives an obj or object, which is an array of deltas from the DeltaFIFO queue. As long as the delta is not of the type Deleted, the logic of the controller is used to create or modify some object until it matches the specification." [2.19]

- Informer (e.g: SharedInformer) creates cache of the state for kube-apiserver requests.

- Ingress Controller a daemon, deployed as a Kubernetes Pod, that watches the apiserver's

/ingressesendpoint. gh doc - Ingress provide load balancing, SSL termination and name-based virtual hosting. docs

- Deployment deploys a ReplicaSet, a controller which deploys the containers [Create, scale]

- "The default, newest, and feature-filled controller for containers is a Deployment" LFD259 training material

- DaemonSet: Normally, the machine that a Pod runs on is selected by the Kubernetes scheduler. However, Pods created by the DaemonSet controller have the machine already selected

- ReplicaSet

- "It is strongly recommended to make sure that the bare Pods do not have labels which match the selector of one of your ReplicaSets… it can acquire other Pods" [docs]

- StatefulSet: Manages the deployment and scaling of a set of Pods , and provides guarantees about the ordering and uniqueness.

- Job: creates one or more Pods and ensures that a specified number of them successfully terminate.

- CronJob: creates Jobs on a time-based schedule.

- etcd db is a b+ tree key-value store.

- There could be followers to the master db; managed thru kubeadm

- kube-apiserver handles both internal and external traffic, the only agent that connects to the etcd db.

- kube-scheduler deploys the Pod, quota validation.

- uses the PodSpec to determine the best node for deployment.

- Resource requests and limits:

spec.containers[].resources.limits.cpuspec.containers[].resources.requests.cpuspec.containers[].resources.limits.memoryspec.containers[].resources.requests.memoryspec.containers[].resources.limits.ephemeral-storagespec.containers[].resources.requests.ephemeral-storage

- Controllers: (aka: operators) "A control loop that watches the shared state of the cluster through the kube-apiserver and makes changes attempting to move the current state towards the desired state." [docs]

-

Pod, a group of co-located containers that share the same IP address (could be for logging and/or different functionality)

- State: "To check state of container, you can use

kubectl describe pod [POD_NAME]" docs - Probe

- "diagnostic performed periodically by the kubelet on a Container. To perform a diagnostic, the kubelet calls a Handler implemented by the Container" docs

- readinessProbe : ready to accept traffic?

execw zero exithttpGetcode returns[ 200-399]

- livenessProbe

- sidecar: a container dedicated to performing a helper task, like handling logs and responding to requests. [2.17 LFD259]

- pause container is used to get an IP address, then all the containers in the pod will use its network namespace

- Pods can communicate w each other via loopback interface, IPC or writing files to common filesystem.

- State: "To check state of container, you can use

-

Container options: Docker, CRI, Rkt 🚀 , CRI-O

-

runC is part of Kubernetes, unlike Docker, is not bound to higher-level tools and that is more portable across operating systems and environments.

-

buildah & PodMan (pod-manager) allow building images with and without Dockerfiles while not requiring any root privileges

-

Annotations are for meta-data

-

Supervisord monitors kubelet and docker processes

-

Fluentd could be used for cluster-wide logging

- the command to bootstrap the cluster. [docs]

- Starting with v1.15.1, kubeadm allows easy deployment of a multi-master cluster with stacked etcd or an external database cluster.

Authentication -login-> Authorization -requests-> Admission Control (other checks)

- Client Certificate:

--client-ca-file=SOMEFILE - Webhook Token Authentication

- Static Token(s) File:

--token-auth-file=SOMEFILE - Bootstrap Tokens (alpha): bootstrapping a new Kubernetes cluster.

- Static Password File:

--basic-auth-file=SOMEFILE - Service Account Tokens: This is an automatically enabled authenticator that uses signed bearer tokens to verify the requests. These tokens get attached to Pods using the ServiceAccount Admission Controller, which allows in-cluster processes to talk to the API server.

- OpenID Connect Tokens

--experimental-keystone-url=<AuthURL>- Authenticating Proxy

- Node Authorizer

- Webhook:

--authorization-webhook-config-file=WebhookAuthz.json - Role-Based Access Control (RBAC) Authorizer (& ClusterRole)

--authorization-mode=RBAC

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: pod-read-access

namespace: lfs158

subjects:

- kind: User

name: nkhare

apiGroup: rbac.authorization.k8s.io

roleRef:

kind: Role

name: pod-reader

apiGroup: rbac.authorization.k8s.io- Attribute-Based Access Control (ABAC) Authorizer

--authorization-mode=ABAC--authorization-policy-file=Policy.json

{

"apiVersion": "abac.authorization.kubernetes.io/v1beta1",

"kind": "Policy",

"spec": {

"user": "nkhare",

"namespace": "lfs158",

"resource": "pods",

"readonly": true

}

}- after API requests are authenticated and authorized.

- admission-control, which takes a comma-delimited, ordered list of admission controller names, e.g:

--admission-control=NamespaceLifecycle,ResourceQuota,PodSecurityPolicy,DefaultStorageClass

- External-to-pod communications

- "defines a logical set of Pods and a policy by which to access them" [docs]

- Service is a microservice handling access polices and traffic; NodePort or LoadBalancer

- Logically, via Labels & Selectors, groups Pods and a policy to access them

- load balancing while selecting the Pods

- By default, each Service also gets an IP address, which is routable only inside the cluster

- Service can have multipile IP:Port endpoints

- ServiceType

- Decide the access scope

- ClusterIP is the default ServiceType w a 0000-32767 NodePort exposed to all worker nodes

- ExternalName

- Has no Selectors & endpoints

- Accessible within the cluster

- Returns a CNAME record of an externally configured Service.

- Service Discovery

- Environment Variables for the (previously) created services as:

<SERVICE_NAME>_SERVICE_HOST<SERVICE_NAME>_SERVICE_PORT<SERVICE_NAME>_PORT<SERVICE_NAME>_PORT_<PORT>_TCP<SERVICE_NAME>_PORT_<PORT>_TCP_PROTO<SERVICE_NAME>_PORT_<PORT>_TCP_PORT<SERVICE_NAME>_PORT_<PORT>_TCP_ADDR- DNS add-on

- Services, within the same Namespace, can reach to each other by their name.

- example:

apiVersion: v1

kind: Service

metadata:

name: ServiceName

labels:

app: mysvc

spec:

type: NodePort

ports:

- port: 80 #

targetPort: web-port #forwarded to

protocol: TCP

selector:

app: mysvc- Runs in worker nodes

- Configures the iptable for forwarding to the Service endpoint(s)

- ConfigMap

- Create from directory

- Create from file/url

- Create from literal values

- Environment variables in Pod

- Secret data is stored as plain text inside etcd, thus limit access

- does not appear in

kubectl get secret [secret]andkubectl describe secret [secret] - As Pod Volume

- Environment Variable

kubectl get podskubectl exec -it <Pod-Name> --/bin/bashkubectl run <Deploy-Name> --image=<repo>/<app-name>:<version>

- Monitoring the resource usage cluster-wide

- data collector for unified logging layer

TODO, trying to summarize the official examples. docs

apiVersion: apps/v1

kind: Deployment

metadata:

name: DEPLOYMENT_NAME

labels: (DEPLOYMENT|SERVICE)

appdb: rsvpdb

spec:

replicas: 1

selector:

matchLabels: (DEPLOYMENT|SERVICE)

appdb: rsvpdb

template:

metadata:

labels: (DEPLOYMENT)

appdb: rsvpdb

spec:

containers:

- name: DEPLOYMENT_CONTAINER_NAME

image: DEPLOYMENT_IMAGE:TAG

ports:

- containerPort: DeploymentPort

apiVersion: v1

kind: Service

metadata:

name: mongodb

labels: (DEPLOYMENT|SERVICE)

app: rsvpdb

spec:

ports:

- port: 27017

protocol: TCP

selector: (DEPLOYMENT|SERVICE)

appdb: rsvpdb

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: pod-read-access

namespace: lfs158

subjects:

- kind: User

name: nkhare

apiGroup: rbac.authorization.k8s.io

roleRef:

kind: Role

name: pod-reader

apiGroup: rbac.authorization.k8s.io