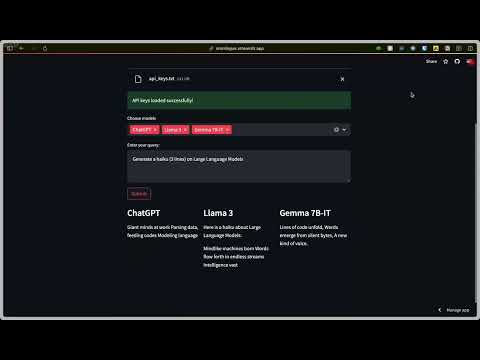

Omnilogue is a Streamlit-based application that allows users to query multiple Language Models (LLMs) simultaneously. It supports ChatGPT, Claude, Llama 3, and Gemma 2B-9B-IT.

Live demo: https://omnilogue.streamlit.app

- Query multiple LLMs simultaneously

- Upload API keys securely

- Select models based on available API keys

- Compare responses from different models

- Clone the repository:

git clone https://github.com/parthmshah1302/omnilogue.git

cd omnilogue- Create a conda environment using the provided

environment.ymlfile:

conda env create -f environment.yml- Activate the environment:

conda activate multi-llm-app- Create an API keys file:

Create a text file named

api_keys.txtwith the following content (no double quotes):

OPENAI_API_KEY=your_openai_api_key

ANTHROPIC_API_KEY=your_anthropic_api_key

GROQ_API_KEY=your_groq_api_keyReplace your_openai_api_key, your_anthropic_api_key, and your_groq_api_key with your actual API keys.

- Run the Streamlit app:

streamlit run app.py- Open your web browser and navigate to

http://localhost:8501to use the app locally.

- Upload your

api_keys.txtfile using the file uploader in the app. - Select the models you want to query from the available options.

- Enter your query in the text area.

- Click the "Submit" button to send your query to the selected models.

- View the responses from each model in the respective columns.

The app is currently deployed at https://omnilogue.streamlit.app. You can use this link to access the application without local setup.

Never share your API keys publicly. The api_keys.txt file should be kept secure and not committed to version control. The deployed version does not store the API keys anywhere.

Contributions are welcome! Please feel free to submit a Pull Request.

This project is licensed under the MIT License - see the LICENSE file for details.