RStudio offers a polished experience for developing R programs that is impossible to reproduce in a notebook. Databricks offers two choices for sticking with your favorite IDE - hosted in the cloud or on your local machine. In this section we'll go over setup and best practices for both options.

Contents

The simplest way to use RStudio with Databricks is to use Databricks Runtime (DBR) for Machine Learning 7.0+. The open source version of Rstudio Server will automatically installed on the driver node of a cluster:

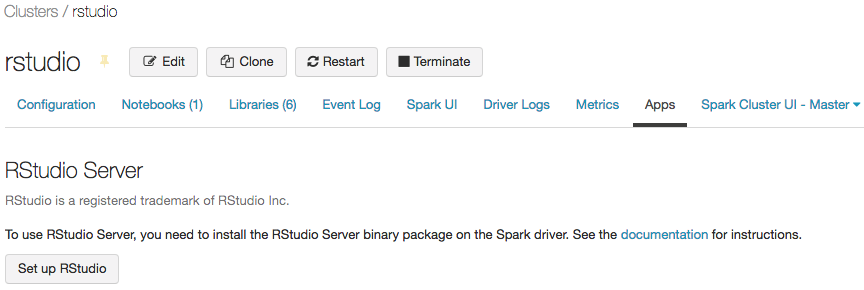

You can then launch hosted RStudio Server from the 'Apps' tab in the Cluster UI:

For earlier versions of DBR you can attach the RStudio Server installation script to your cluster. Full details for setting up hosted Rstudio Server can be found in the official docs here. If you have a license for RStudio Server Pro you can set that up as well.

After the init script has been installed on the cluster, login information can be found in the Apps tab of the cluster UI as before.

The hosted RStudio experience should feel nearly identical to your desktop experience. In fact, you can also customize the environment further by supplying an additional init script to modify Rprofile.site.

(This section is taken from Databricks documentation.)

From the RStudio UI, you can import the SparkR package and set up a SparkR session to launch Spark jobs on your cluster.

library(SparkR)

sparkR.session()You can also attach the sparklyr package and set up a Spark connection.

SparkR::sparkR.session()

library(sparklyr)

sc <- spark_connect(method = "databricks")This will display the tables registered in the metastore.

Tables from the default database will be shown, but you can switch the database using sparklyr::tbl_change_db().

## Change from default database to non-default database

tbl_change_db(sc, "not_a_default_db")Databricks clusters are treated as ephemeral computational resources - the default configuration is for the cluster to automatically terminate after 120 minutes of inactivity. This applies to your hosted instance of RStudio as well. To persist your work you'll need to use DBFS or integrate with version control.

By default, the working directory in RStudio will be on the driver node. To change it to a path on DBFS, simply set it with setwd().

setwd("/dbfs/my_folder_that_will_persist")You can also access DBFS in the File Explorer. Click on the ... all the way to the right and enter /dbfs/ at the prompt.

Then the contents of DBFS will be available:

You can store RStudio Projects on DBFS and any other arbitrary file. When your cluster is terminated at the end of your session, the work will be there for you when you return.

The first step is to disable websockets from within RStudio's options:

Once that is complete, a GitHub repo can be connected by creating a new project from the Project dropdown menu at the top right of RStudio. Select Version Control, and on the next window select the git repo that you want to work with on Databricks. When you click Create Project, the repo will be cloned to the subdirectory you chose on the driver node and git integration will be visible from RStudio.

At this point you can resume your usual workflow of checking out branches, committing new code, and pushing changes to the remote repo.

Instead of GitHub, you can also use the Databricks File System (DBFS) to persist files associated with the R project. Since DBFS enables users to treat buckets in object storage as local storage by prepending the write path with /dbfs/, this is very easy to do with the RStudio terminal window or with system() commands.

For example, an entire R project can be copied into DBFS via a single cp command.

system("cp -r /driver/my_r_project /dbfs/my_r_project")Databricks Connect is a library that allows users to remotely access Spark on Databricks clusters from their local machine. At a high level the architecture looks like this:

Documentation can be found here, but essentially you will install the client library locally, configure the cluster on Databricks, and then authenticate with a token. At that point you'll be able to connect to Spark on Databricks from your local RStudio instance and develop freely.

Something important to note when using the Rstudio integrations:

- Loss of notebook functionality: magic commands that work in Databricks notebooks, do not work within Rstudio

dbutilsis not supported