English | 简体中文 | 繁體中文 | 한국어 | Español | 日本語 | हिन्दी | Русский | Рortuguês | తెలుగు | Français | Deutsch | Tiếng Việt | العربية | اردو |

🤗 Transformers는 텍스트, 비전, 오디오와 같은 다양한 분야에서 여러 과제를 수행하는 수천 개의 사전 학습된 모델을 제공합니다.

제공되는 모델을 통해 다음 과제를 수행할 수 있습니다.

- 📝 텍스트: 100개 이상의 언어들로, 텍스트 분류, 정보 추출, 질문 답변, 요약, 번역 및 문장 생성

- 🖼️ 이미지: 이미지 분류(Image Classification), 객체 탐지(Object Detection) 및 분할(Segmentation)

- 🗣️ 오디오: 음성 인식(Speech Recognition) 및 오디오 분류(Audio Classification)

Transformer의 모델은 표를 통한 질의응답(Table QA), 광학 문자 인식(Optical Character Recognition), 스캔 한 문서에서 정보 추출, 비디오 분류 및 시각적 질의응답과 같은 여러 분야가 결합된 과제 또한 수행할 수 있습니다.

🤗 Transformers는 이러한 사전학습 모델을 빠르게 다운로드해 특정 텍스트에 사용하고, 원하는 데이터로 fine-tuning해 커뮤니티나 우리의 모델 허브에 공유할 수 있도록 API를 제공합니다. 또한, 모델 구조를 정의하는 각 파이썬 모듈은 완전히 독립적이여서 연구 실험을 위해 손쉽게 수정할 수 있습니다.

🤗 Transformers는 가장 유명한 3개의 딥러닝 라이브러리를 지원합니다. 이들은 서로 완벽히 연동됩니다 — Jax, PyTorch, TensorFlow. 간단하게 이 라이브러리 중 하나로 모델을 학습하고, 또 다른 라이브러리로 추론을 위해 모델을 불러올 수 있습니다.

대부분의 모델을 모델 허브 페이지에서 바로 테스트해 볼 수 있습니다. 공개 및 비공개 모델을 위한 비공개 모델 호스팅, 버전 관리, 추론 API도 제공합니다.

아래 몇 가지 예시가 있습니다:

자연어 처리:

- BERT로 마스킹된 단어 완성하기

- Electra를 이용한 개체명 인식

- GPT-2로 텍스트 생성하기

- RoBERTa로 자연어 추론하기

- BART를 이용한 요약

- DistilBERT를 이용한 질문 답변

- T5로 번역하기

컴퓨터 비전:

- ViT와 함께하는 이미지 분류

- DETR로 객체 탐지하기

- SegFormer로 의미적 분할(semantic segmentation)하기

- Mask2Former로 판옵틱 분할(panoptic segmentation)하기

- Depth Anything으로 깊이 추정(depth estimation)하기

- VideoMAE와 함께하는 비디오 분류

- OneFormer로 유니버설 분할(universal segmentation)하기

오디오:

멀티 모달(Multimodal Task):

- TAPAS로 표 안에서 질문 답변하기

- ViLT와 함께하는 시각적 질의응답

- LLaVa로 이미지에 설명 넣기

- SigLIP와 함께하는 제로 샷(zero-shot) 이미지 분류

- LayoutLM으로 문서 안에서 질문 답변하기

- X-CLIP과 함께하는 제로 샷(zero-shot) 비디오 분류

- OWLv2로 진행하는 제로 샷(zero-shot) 객체 탐지

- CLIPSeg로 진행하는 제로 샷(zero-shot) 이미지 분할

- SAM과 함께하는 자동 마스크 생성

Transformer와 글쓰기 는 이 저장소의 텍스트 생성 능력에 관한 Hugging Face 팀의 공식 데모입니다.

Transformers는 사전 학습된 모델들을 이용하는 도구를 넘어 Transformers와 함께 빌드 된 프로젝트 및 Hugging Face Hub를 위한 하나의 커뮤니티입니다. 우리는 Transformers를 통해 개발자, 연구자, 학생, 교수, 엔지니어 및 모든 이들이 꿈을 품은 프로젝트(Dream Project)를 빌드 할 수 있길 바랍니다.

Transformers에 달린 100,000개의 별을 축하하기 위해, 우리는 커뮤니티를 주목하고자 Transformers를 품고 빌드 된 100개의 어마어마한 프로젝트들을 선별하여 awesome-transformers 페이지에 나열하였습니다.

만일 소유한 혹은 사용하고 계신 프로젝트가 이 리스트에 꼭 등재되어야 한다고 믿으신다면, PR을 열고 추가하여 주세요!

주어진 입력(텍스트, 이미지, 오디오, ...)에 바로 모델을 사용할 수 있도록, 우리는 pipeline API를 제공합니다. Pipeline은 사전학습 모델과 그 모델을 학습할 때 적용한 전처리 방식을 하나로 합칩니다. 다음은 긍정적인 텍스트와 부정적인 텍스트를 분류하기 위해 pipeline을 사용한 간단한 예시입니다:

>>> from transformers import pipeline

# 감정 분석 파이프라인을 할당하세요

>>> classifier = pipeline('sentiment-analysis')

>>> classifier('We are very happy to introduce pipeline to the transformers repository.')

[{'label': 'POSITIVE', 'score': 0.9996980428695679}]코드의 두 번째 줄은 pipeline이 사용하는 사전학습 모델을 다운로드하고 캐시로 저장합니다. 세 번째 줄에선 그 모델이 주어진 텍스트를 평가합니다. 여기서 모델은 99.97%의 확률로 텍스트가 긍정적이라고 평가했습니다.

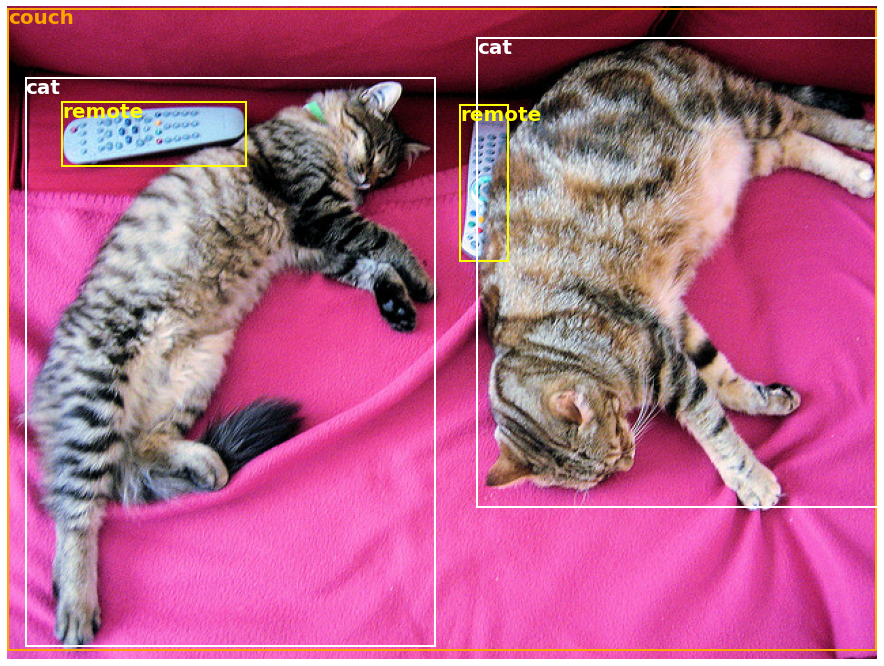

자연어 처리(NLP) 뿐만 아니라 컴퓨터 비전, 발화(Speech) 과제들을 사전 학습된 pipeline으로 바로 수행할 수 있습니다. 예를 들어, 사진에서 손쉽게 객체들을 탐지할 수 있습니다.:

>>> import requests

>>> from PIL import Image

>>> from transformers import pipeline

# 귀여운 고양이가 있는 이미지를 다운로드하세요

>>> url = "https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/coco_sample.png"

>>> image_data = requests.get(url, stream=True).raw

>>> image = Image.open(image_data)

# 객체 감지를 위한 파이프라인을 할당하세요

>>> object_detector = pipeline('object-detection')

>>> object_detector(image)

[{'score': 0.9982201457023621,

'label': 'remote',

'box': {'xmin': 40, 'ymin': 70, 'xmax': 175, 'ymax': 117}},

{'score': 0.9960021376609802,

'label': 'remote',

'box': {'xmin': 333, 'ymin': 72, 'xmax': 368, 'ymax': 187}},

{'score': 0.9954745173454285,

'label': 'couch',

'box': {'xmin': 0, 'ymin': 1, 'xmax': 639, 'ymax': 473}},

{'score': 0.9988006353378296,

'label': 'cat',

'box': {'xmin': 13, 'ymin': 52, 'xmax': 314, 'ymax': 470}},

{'score': 0.9986783862113953,

'label': 'cat',

'box': {'xmin': 345, 'ymin': 23, 'xmax': 640, 'ymax': 368}}]위와 같이, 우리는 이미지에서 탐지된 객체들에 대하여 객체를 감싸는 박스와 확률 리스트를 얻을 수 있습니다. 왼쪽이 원본 이미지이며 오른쪽은 해당 이미지에 탐지된 결과를 표시하였습니다.

이 튜토리얼에서 pipeline API가 지원하는 다양한 과제를 확인할 수 있습니다.

코드 3줄로 원하는 과제에 맞게 사전학습 모델을 다운로드 받고 사용할 수 있습니다. 다음은 PyTorch 버전입니다:

>>> from transformers import AutoTokenizer, AutoModel

>>> tokenizer = AutoTokenizer.from_pretrained("google-bert/bert-base-uncased")

>>> model = AutoModel.from_pretrained("google-bert/bert-base-uncased")

>>> inputs = tokenizer("Hello world!", return_tensors="pt")

>>> outputs = model(**inputs)다음은 TensorFlow 버전입니다:

>>> from transformers import AutoTokenizer, TFAutoModel

>>> tokenizer = AutoTokenizer.from_pretrained("google-bert/bert-base-uncased")

>>> model = TFAutoModel.from_pretrained("google-bert/bert-base-uncased")

>>> inputs = tokenizer("Hello world!", return_tensors="tf")

>>> outputs = model(**inputs)토크나이저는 사전학습 모델의 모든 전처리를 책임집니다. 그리고 (위의 예시처럼) 1개의 스트링이나 리스트도 처리할 수 있습니다. 토크나이저는 딕셔너리를 반환하는데, 이는 다운스트림 코드에 사용하거나 언패킹 연산자 ** 를 이용해 모델에 바로 전달할 수도 있습니다.

모델 자체는 일반적으로 사용되는 Pytorch nn.Module이나 TensorFlow tf.keras.Model입니다. 이 튜토리얼은 이러한 모델을 표준적인 PyTorch나 TensorFlow 학습 과정에서 사용하는 방법, 또는 새로운 데이터로 파인 튜닝하기 위해 Trainer API를 사용하는 방법을 설명해 줍니다.

-

손쉽게 사용할 수 있는 최첨단 모델:

- 자연어 이해(NLU)와 생성(NLG), 컴퓨터 비전, 오디오 과제에서 뛰어난 성능을 보입니다.

- 교육자와 실무자에게 진입 장벽이 낮습니다.

- 3개의 클래스만 배우면 바로 사용할 수 있습니다.

- 하나의 API로 모든 사전학습 모델을 사용할 수 있습니다.

-

더 적은 계산 비용, 더 적은 탄소 발자국:

- 연구자들은 모델을 계속 다시 학습시키는 대신 학습된 모델을 공유할 수 있습니다.

- 실무자들은 학습에 필요한 시간과 비용을 절약할 수 있습니다.

- 모든 분야를 통틀어서 400,000개 이상의 사전 학습된 모델이 있는 수십 개의 아키텍처.

-

모델의 각 생애주기에 적합한 프레임워크:

- 코드 3줄로 최첨단 모델을 학습하세요.

- 목적에 알맞게 모델을 TF2.0/Pytorch/Jax 프레임 워크 중 하나로 이동시키세요.

- 학습, 평가, 공개 등 각 단계에 맞는 프레임워크를 원하는대로 선택하세요.

-

필요한 대로 모델이나 예시를 커스터마이즈하세요:

- 우리는 저자가 공개한 결과를 재현하기 위해 각 모델 구조의 예시를 제공합니다.

- 모델 내부 구조는 가능한 일관적으로 공개되어 있습니다.

- 빠른 실험을 위해 모델 파일은 라이브러리와 독립적으로 사용될 수 있습니다.

- 이 라이브러리는 신경망 블록을 만들기 위한 모듈이 아닙니다. 연구자들이 여러 파일을 살펴보지 않고 바로 각 모델을 사용할 수 있도록, 모델 파일 코드의 추상화 수준을 적정하게 유지했습니다.

- 학습 API는 모든 모델에 적용할 수 있도록 만들어지진 않았지만, 라이브러리가 제공하는 모델들에 적용할 수 있도록 최적화되었습니다. 일반적인 머신 러닝을 위해선, 다른 라이브러리를 사용하세요(예를 들면, Accelerate).

- 가능한 많은 사용 예시를 보여드리고 싶어서, 예시 폴더의 스크립트를 준비했습니다. 이 스크립트들을 수정 없이 특정한 문제에 바로 적용하지 못할 수 있습니다. 필요에 맞게 일부 코드를 수정해야 할 수 있습니다.

이 저장소는 Python 3.9+, Flax 0.4.1+, PyTorch 2.0+, TensorFlow 2.6+에서 테스트 되었습니다.

가상 환경에 🤗 Transformers를 설치하세요. Python 가상 환경에 익숙하지 않다면, 사용자 가이드를 확인하세요.

우선, 사용할 Python 버전으로 가상 환경을 만들고 실행하세요.

그 다음, Flax, PyTorch, TensorFlow 중 적어도 하나는 설치해야 합니다. 플랫폼에 맞는 설치 명령어를 확인하기 위해 TensorFlow 설치 페이지, PyTorch 설치 페이지, Flax 설치 페이지를 확인하세요.

이들 중 적어도 하나가 설치되었다면, 🤗 Transformers는 다음과 같이 pip을 이용해 설치할 수 있습니다:

pip install transformers예시들을 체험해보고 싶거나, 최최최첨단 코드를 원하거나, 새로운 버전이 나올 때까지 기다릴 수 없다면 라이브러리를 소스에서 바로 설치하셔야 합니다.

🤗 Transformers는 다음과 같이 conda로 설치할 수 있습니다:

conda install conda-forge::transformers노트:

huggingface채널에서transformers를 설치하는 것은 사용이 중단되었습니다.

Flax, PyTorch, TensorFlow 설치 페이지에서 이들을 conda로 설치하는 방법을 확인하세요.

노트: 윈도우 환경에서 캐싱의 이점을 위해 개발자 모드를 활성화할 수 있습니다. 만약 여러분에게 있어서 선택이 아닌 필수라면 이 이슈를 통해 알려주세요.

🤗 Transformers가 제공하는 모든 모델 체크포인트 는 huggingface.co 모델 허브에 완벽히 연동되어 있습니다. 개인과 기관이 모델 허브에 직접 업로드할 수 있습니다.

🤗 Transformers는 다음 모델들을 제공합니다: 각 모델의 요약은 여기서 확인하세요.

각 모델이 Flax, PyTorch, TensorFlow으로 구현되었는지 또는 🤗 Tokenizers 라이브러리가 지원하는 토크나이저를 사용하는지 확인하려면, 이 표를 확인하세요.

이 구현은 여러 데이터로 검증되었고 (예시 스크립트를 참고하세요) 오리지널 구현의 성능과 같아야 합니다. 도큐먼트의 Examples 섹션에서 성능에 대한 자세한 설명을 확인할 수 있습니다.

| 섹션 | 설명 |

|---|---|

| 도큐먼트 | 전체 API 도큐먼트와 튜토리얼 |

| 과제 요약 | 🤗 Transformers가 지원하는 과제들 |

| 전처리 튜토리얼 | Tokenizer 클래스를 이용해 모델을 위한 데이터 준비하기 |

| 학습과 파인 튜닝 | 🤗 Transformers가 제공하는 모델 PyTorch/TensorFlow 학습 과정과 Trainer API에서 사용하기 |

| 퀵 투어: 파인 튜닝/사용 스크립트 | 다양한 과제에서 모델을 파인 튜닝하는 예시 스크립트 |

| 모델 공유 및 업로드 | 커뮤니티에 파인 튜닝된 모델을 업로드 및 공유하기 |

🤗 Transformers 라이브러리를 인용하고 싶다면, 이 논문을 인용해 주세요:

@inproceedings{wolf-etal-2020-transformers,

title = "Transformers: State-of-the-Art Natural Language Processing",

author = "Thomas Wolf and Lysandre Debut and Victor Sanh and Julien Chaumond and Clement Delangue and Anthony Moi and Pierric Cistac and Tim Rault and Rémi Louf and Morgan Funtowicz and Joe Davison and Sam Shleifer and Patrick von Platen and Clara Ma and Yacine Jernite and Julien Plu and Canwen Xu and Teven Le Scao and Sylvain Gugger and Mariama Drame and Quentin Lhoest and Alexander M. Rush",

booktitle = "Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing: System Demonstrations",

month = oct,

year = "2020",

address = "Online",

publisher = "Association for Computational Linguistics",

url = "https://www.aclweb.org/anthology/2020.emnlp-demos.6",

pages = "38--45"

}