diff --git a/README.md b/README.md

index c336d4c..dbb6893 100644

--- a/README.md

+++ b/README.md

@@ -1 +1,96 @@

-# perception_benchmark_tool

\ No newline at end of file

+# perception_benchmark_tool

+

+This package contains a benchmark tool for testing the perception stack of Autoware.Universe with the Waymo dataset.

+

+## Installation

+

+

+For testing 3D Object Tracking with Waymo Dataset, follow the given steps.

+

+1- Download the Waymo dataset validation segment(.tfrecord) files from the given link. Unpack it to the desired directory.

+

+

+

+

+```bash

+cd ~/Downloads/

+tar -xvf validation_validation_0000.tar

+```

+

+Change the dataset folder from "benchmark_runner.launch.py"

+

+2- Install the Waymo Open Dataset Toolkit.

+

+```bash

+mkdir -p ~/perception_benchmark_ws/src

+cd ~/perception_benchmark_ws/src

+git clone https://github.com/autowarefoundation/perception_benchmark_tool.git

+pip3 install protobuf==3.9.2 numpy==1.19.2

+pip3 install waymo-open-dataset-tf-2-6-0

+```

+

+3- For running Autoware.Universe with the Waymo evaluation node,

+

+Lidar point clouds and camera images are encoded in the .tfrecord file. It may take about ~60-90 seconds to decode

+the data back.

+

+Go to the Autoware folder and source it,

+```bash

+source install/setup.bash

+```

+

+Build perception benchmark tool,

+```bash

+cd ~/perception_benchmark_ws/

+rosdep install -y --from-paths src --ignore-src --rosdistro $ROS_DISTRO

+colcon build

+source install/setup.bash

+```

+

+Run perception benchmark tool,

+```bash

+ros2 launch perception_benchmark_tool benchmark_runner.launch.py

+```

+

+This command will run the perception stack with the Waymo Dataset. We will get the ground truth and prediction files in the file

+paths we give as arguments to the launch file.

+

+Lidar point clouds and camera images are encoded in the .tfrecord file. It may take about ~60-90 seconds to decode

+the data back for each segment file.

+

+4- Install Waymo Open Dataset toolkit for metric computation:

+

+Follow the given command or instruction provided by the Waymo:

+

+```bash

+git clone https://github.com/waymo-research/waymo-open-dataset.git waymo-od

+cd waymo-od

+git checkout remotes/origin/master

+sudo apt-get install --assume-yes pkg-config zip g++ zlib1g-dev unzip python3 python3-pip

+BAZEL_VERSION=3.1.0

+wget https://github.com/bazelbuild/bazel/releases/download/${BAZEL_VERSION}/bazel-${BAZEL_VERSION}-installer-linux-x86_64.sh

+sudo bash bazel-${BAZEL_VERSION}-installer-linux-x86_64.sh

+sudo apt install build-essential

+./configure.sh

+bazel clean

+bazel build waymo_open_dataset/metrics/tools/compute_tracking_metrics_main

+```

+

+5- Evaluate tracking result

+

+```bash

+bazel-bin/waymo_open_dataset/metrics/tools/compute_tracking_metrics_main \

+~/benchmark_result/predictions.bin ~/benchmark_result/gt.bin

+```

+

+## Result

+

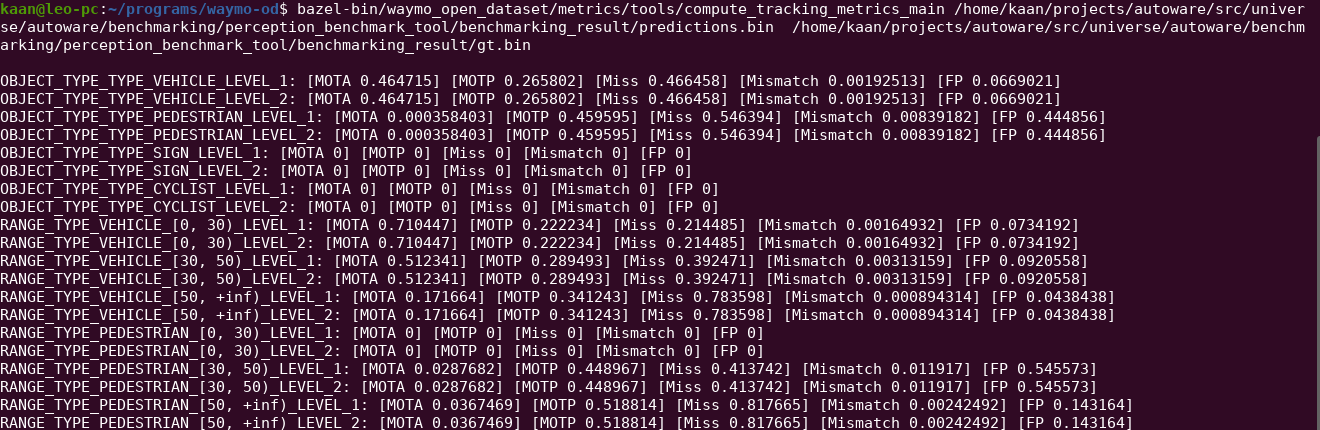

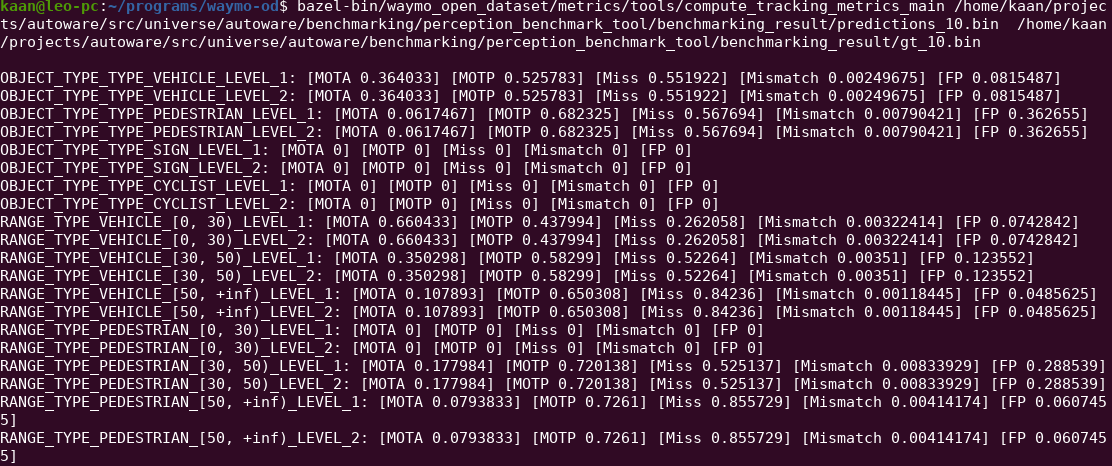

+The evaluation result of the Perception pipeline on the Waymo Dataset is presented below.

+

+### lidar_centerpoint

+

+

+

+### lidar_apollo_instance_segmentation

+

+

diff --git a/launch/benchmark_runner.launch.py b/launch/benchmark_runner.launch.py

new file mode 100644

index 0000000..2af0c7a

--- /dev/null

+++ b/launch/benchmark_runner.launch.py

@@ -0,0 +1,103 @@

+import os

+from os.path import join as joinPath

+

+from launch import LaunchDescription

+from launch.actions import DeclareLaunchArgument

+from launch.substitutions import LaunchConfiguration

+from launch_ros.actions import Node

+

+

+def generate_launch_description():

+

+ dataset_path_launch_arg = DeclareLaunchArgument(

+ "dataset_path",

+ default_value=joinPath(os.environ["HOME"], "validation_validation_0000"),

+ description="",

+ )

+

+ use_camera_launch_arg = DeclareLaunchArgument(

+ "use_camera",

+ default_value="False",

+ description="",

+ )

+

+ result_path_launch_arg = DeclareLaunchArgument(

+ "result_path",

+ default_value=joinPath(os.environ["HOME"], "benchmark_result"),

+ description="",

+ )

+

+ benchmark_frame_launch_arg = DeclareLaunchArgument("benchmark_frame", default_value="base_link")

+

+ launch_file_launch_arg = DeclareLaunchArgument(

+ "launch_file",

+ default_value="waymo_perception.launch.xml",

+ description="Launch file for testing perception stack",

+ )

+

+ vehicle_model_launch_arg = DeclareLaunchArgument(

+ "vehicle_model",

+ default_value="sample_vehicle",

+ description="",

+ )

+

+ sensor_model_launch_arg = DeclareLaunchArgument(

+ "sensor_model",

+ default_value="sample_sensor_kit",

+ description="",

+ )

+

+ benchmark_node = Node(

+ package="perception_benchmark_tool",

+ name="benchmark_node",

+ executable="benchmark_node",

+ output="screen",

+ parameters=[

+ {

+ "result_path": LaunchConfiguration("result_path"),

+ "benchmark_frame": LaunchConfiguration("benchmark_frame"),

+ }

+ ],

+ )

+

+ waymo_player_node = Node(

+ package="perception_benchmark_tool",

+ name="waymo_player_node",

+ executable="waymo_player_node",

+ output="screen",

+ parameters=[

+ {

+ "dataset_path": LaunchConfiguration("dataset_path"),

+ "use_camera": LaunchConfiguration("use_camera"),

+ }

+ ],

+ )

+

+ autoware_workflow_runner_node = Node(

+ package="perception_benchmark_tool",

+ name="autoware_workflow_runner_node",

+ executable="autoware_workflow_runner_node",

+ output="screen",

+ parameters=[

+ {

+ "launch_file": LaunchConfiguration("launch_file"),

+ "vehicle_model": LaunchConfiguration("vehicle_model"),

+ "sensor_model": LaunchConfiguration("sensor_model"),

+ }

+ ],

+ )

+

+ return LaunchDescription(

+ [

+ dataset_path_launch_arg,

+ use_camera_launch_arg,

+ benchmark_frame_launch_arg,

+ result_path_launch_arg,

+ launch_file_launch_arg,

+ vehicle_model_launch_arg,

+ sensor_model_launch_arg,

+ benchmark_node,

+ waymo_player_node,

+ autoware_workflow_runner_node,

+ ]

+ )

diff --git a/launch/pointcloud_preprocessor.launch.py b/launch/pointcloud_preprocessor.launch.py

new file mode 100644

index 0000000..c0c0f26

--- /dev/null

+++ b/launch/pointcloud_preprocessor.launch.py

@@ -0,0 +1,107 @@

+# Copyright 2020 Tier IV, Inc. All rights reserved.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+

+

+import launch

+from launch.actions import DeclareLaunchArgument

+from launch.actions import OpaqueFunction

+from launch.actions import SetLaunchConfiguration

+from launch.conditions import IfCondition

+from launch.conditions import UnlessCondition

+from launch.substitutions import LaunchConfiguration

+from launch_ros.actions import ComposableNodeContainer

+from launch_ros.actions import LoadComposableNodes

+from launch_ros.descriptions import ComposableNode

+

+

+def launch_setup(context, *args, **kwargs):

+

+ # set concat filter as a component

+ concat_component = ComposableNode(

+ package="pointcloud_preprocessor",

+ plugin="pointcloud_preprocessor::PointCloudConcatenateDataSynchronizerComponent",

+ name="concatenate_data",

+ remappings=[("output", "/sensing/lidar/concatenated/pointcloud")],

+ parameters=[

+ {

+ "input_topics": [

+ "/point_cloud/front_lidar",

+ "/point_cloud/rear_lidar",

+ "/point_cloud/side_left_lidar",

+ "/point_cloud/side_right_lidar",

+ "/point_cloud/top_lidar",

+ ],

+ "output_frame": LaunchConfiguration("base_frame"),

+ }

+ ],

+ extra_arguments=[{"use_intra_process_comms": LaunchConfiguration("use_intra_process")}],

+ )

+

+ # set container to run all required components in the same process

+ container = ComposableNodeContainer(

+ name=LaunchConfiguration("container_name"),

+ namespace="",

+ package="rclcpp_components",

+ executable=LaunchConfiguration("container_executable"),

+ composable_node_descriptions=[],

+ condition=UnlessCondition(LaunchConfiguration("use_pointcloud_container")),

+ output="screen",

+ )

+

+ target_container = (

+ container

+ if UnlessCondition(LaunchConfiguration("use_pointcloud_container")).evaluate(context)

+ else LaunchConfiguration("container_name")

+ )

+

+ # load concat or passthrough filter

+ concat_loader = LoadComposableNodes(

+ composable_node_descriptions=[concat_component],

+ target_container=target_container,

+ condition=IfCondition(LaunchConfiguration("use_concat_filter")),

+ )

+

+ return [container, concat_loader]

+

+

+def generate_launch_description():

+

+ launch_arguments = []

+

+ def add_launch_arg(name: str, default_value=None):

+ launch_arguments.append(DeclareLaunchArgument(name, default_value=default_value))

+

+ add_launch_arg("base_frame", "base_link")

+ add_launch_arg("use_multithread", "False")

+ add_launch_arg("use_intra_process", "False")

+ add_launch_arg("use_pointcloud_container", "False")

+ add_launch_arg("container_name", "pointcloud_preprocessor_container")

+

+ set_container_executable = SetLaunchConfiguration(

+ "container_executable",

+ "component_container",

+ condition=UnlessCondition(LaunchConfiguration("use_multithread")),

+ )

+

+ set_container_mt_executable = SetLaunchConfiguration(

+ "container_executable",

+ "component_container_mt",

+ condition=IfCondition(LaunchConfiguration("use_multithread")),

+ )

+

+ return launch.LaunchDescription(

+ launch_arguments

+ + [set_container_executable, set_container_mt_executable]

+ + [OpaqueFunction(function=launch_setup)]

+ )

diff --git a/launch/waymo_perception.launch.xml b/launch/waymo_perception.launch.xml

new file mode 100644

index 0000000..328fbd1

--- /dev/null

+++ b/launch/waymo_perception.launch.xml

@@ -0,0 +1,48 @@

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

diff --git a/package.xml b/package.xml

new file mode 100644

index 0000000..0edae5b

--- /dev/null

+++ b/package.xml

@@ -0,0 +1,31 @@

+

+

+

+ perception_benchmark_tool

+ 0.0.0

+ This package benchmark Autoware perception stack on Waymo dataset

+ Kaan Colak

+ Apache License 2.0

+

+ ament_copyright

+ ament_flake8

+ ament_pep257

+ python3-pytest

+

+ autoware_auto_perception_msgs

+ global_parameter_loader

+ rviz2

+ tier4_perception_msgs

+

+ cv_bridge

+ geometry_msgs

+ std_srvs

+ tf2

+ tf2_geometry_msgs

+ tf2_ros

+ tf_transformations

+

+

+ ament_python

+

+

diff --git a/perception_benchmark_tool/__init__.py b/perception_benchmark_tool/__init__.py

new file mode 100644

index 0000000..e69de29

diff --git a/perception_benchmark_tool/autoware_workflow_runner_node.py b/perception_benchmark_tool/autoware_workflow_runner_node.py

new file mode 100644

index 0000000..95a4d7c

--- /dev/null

+++ b/perception_benchmark_tool/autoware_workflow_runner_node.py

@@ -0,0 +1,154 @@

+# Copyright 2018 Autoware Foundation. All rights reserved.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+

+import signal

+from subprocess import DEVNULL

+from subprocess import Popen

+

+from autoware_auto_perception_msgs.msg import TrackedObjects

+import psutil

+import rclpy

+from rclpy.node import Node

+from std_msgs.msg import Bool

+from std_srvs.srv import Trigger

+

+

+class RunnerNode(Node):

+ def __init__(self):

+ super().__init__("autoware_workflow_runner_node")

+

+ self.declare_parameter("launch_file", "")

+ self.launch_file = self.get_parameter("launch_file").get_parameter_value().string_value

+

+ self.declare_parameter("vehicle_model", "")

+ self.vehicle_model = self.get_parameter("vehicle_model").get_parameter_value().string_value

+

+ self.declare_parameter("sensor_model", "")

+ self.sensor_model = self.get_parameter("sensor_model").get_parameter_value().string_value

+

+ self.autoware_pid = None

+ self.timer_subs_checker = None

+

+ self.client_read_dataset_futures = []

+ self.client_read_dataset = self.create_client(Trigger, "read_current_segment")

+ while not self.client_read_dataset.wait_for_service(timeout_sec=3.0):

+ self.get_logger().info("service not available, waiting again...")

+

+ self.client_read_frame_futures = []

+ self.client_read_dataset_frame = self.create_client(Trigger, "send_frame")

+ while not self.client_read_dataset_frame.wait_for_service(timeout_sec=3.0):

+ self.get_logger().info("service not available, waiting again...")

+

+ self.sub_segment_finished = self.create_subscription(

+ Bool, "segment_finished", self.segment_finished_callback, 1

+ )

+

+ self.sub_tracking = self.create_subscription(

+ TrackedObjects,

+ "/perception/object_recognition/tracking/objects",

+ self.tracked_objects_callback,

+ 10,

+ )

+

+ self.read_dataset_request()

+

+ def spin(self):

+

+ while rclpy.ok():

+ rclpy.spin_once(self)

+

+ incomplete_read_dataset_futures = []

+ for f in self.client_read_dataset_futures:

+ if f.done():

+ response = f.result()

+ if response.success:

+ self.autoware_pid = self.run_autoware()

+ self.timer_subs_checker = self.create_timer(

+ 2, self.wait_until_autoware_subs_ready

+ )

+ else:

+ incomplete_read_dataset_futures.append(f)

+

+ self.client_read_dataset_futures = incomplete_read_dataset_futures

+

+ incomplete_send_frame_futures = []

+ for f in self.client_read_frame_futures:

+ if f.done():

+ response = f.result()

+ else:

+ incomplete_send_frame_futures.append(f)

+

+ self.client_read_frame_futures = incomplete_send_frame_futures

+

+ def read_dataset_request(self):

+ req = Trigger.Request()

+ self.client_read_dataset_futures.append(self.client_read_dataset.call_async(req))

+

+ def read_frame_request(self):

+ req = Trigger.Request()

+ self.client_read_frame_futures.append(self.client_read_dataset_frame.call_async(req))

+

+ def segment_finished_callback(self, ready):

+ self.get_logger().info("Autoware is being killed. ")

+ self.kill_autoware(self.autoware_pid)

+ self.read_dataset_request()

+

+ def wait_until_autoware_subs_ready(self):

+

+ self.get_logger().info("Waiting for Autoware's subscriber to be ready")

+

+ if self.check_lidar_model_ready():

+ self.get_logger().info("Autoware ready.")

+ self.read_frame_request()

+ self.destroy_timer(self.timer_subs_checker)

+

+ def run_autoware(self):

+ cmd = (

+ "ros2 launch perception_benchmark_tool "

+ + self.launch_file

+ + " vehicle_model:="

+ + self.vehicle_model

+ + " sensor_model:="

+ + self.sensor_model

+ )

+ launch_process = Popen(cmd, text=False, shell=True, stdout=DEVNULL)

+ return launch_process.pid

+

+ def kill_autoware(self, parent_pid, sig=signal.SIGTERM):

+ try:

+ parent = psutil.Process(parent_pid)

+ except psutil.NoSuchProcess:

+ return

+ children = parent.children(recursive=True)

+ for process in children:

+ process.send_signal(sig)

+

+ def check_lidar_model_ready(self):

+ centerpoint_ready = self.count_publishers(

+ "/perception/object_recognition/detection/centerpoint/objects"

+ )

+ apollo_ready = self.count_publishers(

+ "/perception/object_recognition/detection/apollo/labeled_clusters"

+ )

+ return bool(centerpoint_ready or apollo_ready)

+

+ def tracked_objects_callback(self, tracked_objects):

+ self.read_frame_request()

+

+

+def main(args=None):

+ rclpy.init(args=args)

+ autoware_workflow_runner_node = RunnerNode()

+ autoware_workflow_runner_node.spin()

+ rclpy.shutdown()

diff --git a/perception_benchmark_tool/benchmark_node.py b/perception_benchmark_tool/benchmark_node.py

new file mode 100644

index 0000000..36689aa

--- /dev/null

+++ b/perception_benchmark_tool/benchmark_node.py

@@ -0,0 +1,231 @@

+# Copyright 2018 Autoware Foundation. All rights reserved.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+

+import os

+

+from autoware_auto_perception_msgs.msg import ObjectClassification

+from autoware_auto_perception_msgs.msg import TrackedObjects

+from geometry_msgs.msg import PoseStamped

+from message_filters import Subscriber

+from message_filters import TimeSynchronizer

+from perception_benchmark_tool.benchmark_tools.math_utils import euler_from_quaternion

+from perception_benchmark_tool.benchmark_tools.ros_utils import do_transform_pose_stamped

+import rclpy

+from rclpy.node import Node

+from std_msgs.msg import Bool

+from tf2_ros import TransformException

+from tf2_ros.buffer import Buffer

+from tf2_ros.transform_listener import TransformListener

+from waymo_open_dataset import label_pb2

+from waymo_open_dataset.protos import metrics_pb2

+

+

+def generate_context_name():

+ generate_context_name.__dict__.setdefault("count", 0)

+ generate_context_name.count += 1

+ return "context_" + str(generate_context_name.count)

+

+

+class PerceptionBenchmark(Node):

+ def __init__(self):

+ super().__init__("benchmark_node")

+

+ self.declare_parameter("benchmark_frame", "base_link")

+ self.benchmark_frame = (

+ self.get_parameter("benchmark_frame").get_parameter_value().string_value

+ )

+

+ self.declare_parameter("result_path", "")

+ self.result_path = self.get_parameter("result_path").get_parameter_value().string_value

+

+ if not os.path.exists(self.result_path):

+ os.makedirs(self.result_path)

+

+ self.prediction_result_path = self.result_path + "/prediction_result.bin"

+ self.gt_path = self.result_path + "/ground_truth_result.bin"

+

+ self.tf_buffer = Buffer()

+ self.tf_listener = TransformListener(buffer=self.tf_buffer, node=self, spin_thread=True)

+

+ self.sub_tracking = Subscriber(

+ self, TrackedObjects, "/perception/object_recognition/tracking/objects"

+ )

+

+ self.sub_tracking_gt = Subscriber(self, TrackedObjects, "/gt_objects")

+ self.time_sync = TimeSynchronizer([self.sub_tracking, self.sub_tracking_gt], 10)

+ self.time_sync.registerCallback(self.tracking_benchmark_callback)

+

+ self.sub_scene_finished = self.create_subscription(

+ Bool, "segment_finished", self.scene_changed_callback, 1

+ )

+

+ self.context_name = generate_context_name()

+

+ def tracking_benchmark_callback(self, tracking_objects, gt_tracking_objects):

+

+ prediction_proto_objects = metrics_pb2.Objects()

+ gt_proto_objects = metrics_pb2.Objects()

+

+ objects_stamp = tracking_objects.header.stamp

+ waymo_timestamp_as_micro = objects_stamp.sec * 1000000 + objects_stamp.nanosec / 1000

+

+ if tracking_objects.header.frame_id != self.benchmark_frame:

+ tracking_objects = self.transform_tracked_objects(tracking_objects)

+

+ # Convert tracked obejct to Waymo Proto objects

+ for tracked_object in tracking_objects.objects:

+

+ tracked_object_waymo = metrics_pb2.Object()

+ tracked_object_waymo.context_name = self.context_name

+ tracked_object_waymo.frame_timestamp_micros = int(waymo_timestamp_as_micro)

+

+ bbox_waymo = label_pb2.Label.Box()

+ bbox_waymo.center_x = tracked_object.kinematics.pose_with_covariance.pose.position.x

+ bbox_waymo.center_y = tracked_object.kinematics.pose_with_covariance.pose.position.y

+ bbox_waymo.center_z = tracked_object.kinematics.pose_with_covariance.pose.position.z

+ bbox_waymo.length = tracked_object.shape.dimensions.x

+ bbox_waymo.width = tracked_object.shape.dimensions.y

+ bbox_waymo.height = tracked_object.shape.dimensions.z

+

+ roll, pitch, yaw = euler_from_quaternion(

+ tracked_object.kinematics.pose_with_covariance.pose.orientation.x,

+ tracked_object.kinematics.pose_with_covariance.pose.orientation.y,

+ tracked_object.kinematics.pose_with_covariance.pose.orientation.z,

+ tracked_object.kinematics.pose_with_covariance.pose.orientation.w,

+ )

+

+ bbox_waymo.heading = yaw

+ tracked_object_waymo.object.box.CopyFrom(bbox_waymo)

+ tracked_object_waymo.score = 0.5

+ if tracked_object.classification[0].label == ObjectClassification.CAR:

+ tracked_object_waymo.object.type = label_pb2.Label.TYPE_VEHICLE

+ elif tracked_object.classification[0].label == ObjectClassification.PEDESTRIAN:

+ tracked_object_waymo.object.type = label_pb2.Label.TYPE_PEDESTRIAN

+ elif tracked_object.classification[0].label == ObjectClassification.BICYCLE:

+ tracked_object_waymo.object.type = label_pb2.Label.TYPE_CYCLIST

+ else:

+ continue

+

+ object_track_id = "".join(

+ str(tracked_object.object_id.uuid[e])

+ for e in range(0, len(tracked_object.object_id.uuid))

+ )

+ tracked_object_waymo.object.id = str(object_track_id)

+

+ prediction_proto_objects.objects.append(tracked_object_waymo)

+

+ with open(self.prediction_result_path, "ab+") as pred_file:

+ pred_file.write(prediction_proto_objects.SerializeToString())

+

+ if gt_tracking_objects.header.frame_id != self.benchmark_frame:

+ gt_tracking_objects = self.transform_tracked_objects(tracking_objects)

+

+ # Convert ground truth obejct to Waymo Proto objects

+ for gt_object in gt_tracking_objects.objects:

+

+ gt_waymo = metrics_pb2.Object()

+ gt_waymo.context_name = self.context_name

+ gt_waymo.frame_timestamp_micros = int(waymo_timestamp_as_micro)

+

+ bbox_waymo = label_pb2.Label.Box()

+ bbox_waymo.center_x = gt_object.kinematics.pose_with_covariance.pose.position.x

+ bbox_waymo.center_y = gt_object.kinematics.pose_with_covariance.pose.position.y

+ bbox_waymo.center_z = gt_object.kinematics.pose_with_covariance.pose.position.z

+ bbox_waymo.length = gt_object.shape.dimensions.x

+ bbox_waymo.width = gt_object.shape.dimensions.y

+ bbox_waymo.height = gt_object.shape.dimensions.z

+

+ roll, pitch, yaw = euler_from_quaternion(

+ gt_object.kinematics.pose_with_covariance.pose.orientation.x,

+ gt_object.kinematics.pose_with_covariance.pose.orientation.y,

+ gt_object.kinematics.pose_with_covariance.pose.orientation.z,

+ gt_object.kinematics.pose_with_covariance.pose.orientation.w,

+ )

+

+ bbox_waymo.heading = yaw

+ gt_waymo.object.box.CopyFrom(bbox_waymo)

+ gt_waymo.score = 0.5

+ if gt_object.classification[0].label == ObjectClassification.CAR:

+ gt_waymo.object.type = label_pb2.Label.TYPE_VEHICLE

+ elif gt_object.classification[0].label == ObjectClassification.PEDESTRIAN:

+ gt_waymo.object.type = label_pb2.Label.TYPE_PEDESTRIAN

+ elif gt_object.classification[0].label == ObjectClassification.BICYCLE:

+ gt_waymo.object.type = label_pb2.Label.TYPE_CYCLIST

+ else:

+ continue

+

+ object_track_id = "".join(

+ str(gt_object.object_id.uuid[e]) for e in range(0, len(gt_object.object_id.uuid))

+ )

+ gt_waymo.object.id = str(object_track_id)

+

+ gt_proto_objects.objects.append(gt_waymo)

+

+ with open(self.gt_path, "ab+") as gt_file:

+ gt_file.write(gt_proto_objects.SerializeToString())

+

+ def transform_tracked_objects(self, tracked_objects):

+

+ transformed_tracked_objects = TrackedObjects()

+ transformed_tracked_objects.header.stamp = tracked_objects.header.stamp

+ transformed_tracked_objects.header.frame_id = self.benchmark_frame

+

+ try:

+ trans = self.tf_buffer.lookup_transform(

+ self.benchmark_frame,

+ tracked_objects.header.frame_id,

+ tracked_objects.header.stamp,

+ rclpy.duration.Duration(seconds=1),

+ )

+

+ except TransformException as ex:

+ self.get_logger().info("Could not find transform:" + str(ex))

+ return None

+

+ for tracked_object in tracked_objects.objects:

+ tracked_objected_map_pose = PoseStamped()

+ tracked_objected_map_pose.header.stamp = tracked_objects.header.stamp

+ tracked_objected_map_pose.header.frame_id = tracked_objects.header.frame_id

+ tracked_objected_map_pose.pose.position = (

+ tracked_object.kinematics.pose_with_covariance.pose.position

+ )

+ tracked_objected_map_pose.pose.orientation = (

+ tracked_object.kinematics.pose_with_covariance.pose.orientation

+ )

+

+ object_transformed = do_transform_pose_stamped(tracked_objected_map_pose, trans)

+

+ transformed_tracked_object = tracked_object

+ transformed_tracked_object.kinematics.pose_with_covariance.pose.orientation = (

+ object_transformed.pose.orientation

+ )

+ transformed_tracked_object.kinematics.pose_with_covariance.pose.position = (

+ object_transformed.pose.position

+ )

+

+ transformed_tracked_objects.objects.append(transformed_tracked_object)

+

+ return transformed_tracked_objects

+

+ def scene_changed_callback(self, read_dataset):

+ self.context_name = generate_context_name()

+ self.tf_buffer.clear()

+ self.tf_listener = TransformListener(buffer=self.tf_buffer, node=self, spin_thread=True)

+

+

+def main(args=None):

+ rclpy.init(args=args)

+ benchmark_node = PerceptionBenchmark()

+ rclpy.spin(benchmark_node)

+ rclpy.shutdown()

diff --git a/perception_benchmark_tool/benchmark_tools/__init__.py b/perception_benchmark_tool/benchmark_tools/__init__.py

new file mode 100644

index 0000000..c40d127

--- /dev/null

+++ b/perception_benchmark_tool/benchmark_tools/__init__.py

@@ -0,0 +1,2 @@

+from .math_utils import *

+from .ros_utils import *

\ No newline at end of file

diff --git a/perception_benchmark_tool/benchmark_tools/datasets/__init__.py b/perception_benchmark_tool/benchmark_tools/datasets/__init__.py

new file mode 100644

index 0000000..21fbd49

--- /dev/null

+++ b/perception_benchmark_tool/benchmark_tools/datasets/__init__.py

@@ -0,0 +1 @@

+from .waymo_dataset import *

\ No newline at end of file

diff --git a/perception_benchmark_tool/benchmark_tools/datasets/waymo_dataset/__init__.py b/perception_benchmark_tool/benchmark_tools/datasets/waymo_dataset/__init__.py

new file mode 100644

index 0000000..86b2683

--- /dev/null

+++ b/perception_benchmark_tool/benchmark_tools/datasets/waymo_dataset/__init__.py

@@ -0,0 +1,6 @@

+from .waymo_dataset import WaymoDataset

+from .waymo_decode_data import *

+

+__all__ = [

+ "WaymoDataset",

+]

diff --git a/perception_benchmark_tool/benchmark_tools/datasets/waymo_dataset/waymo_dataset.py b/perception_benchmark_tool/benchmark_tools/datasets/waymo_dataset/waymo_dataset.py

new file mode 100644

index 0000000..d378c23

--- /dev/null

+++ b/perception_benchmark_tool/benchmark_tools/datasets/waymo_dataset/waymo_dataset.py

@@ -0,0 +1,55 @@

+# Copyright 2018 Autoware Foundation. All rights reserved.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+

+import os

+

+from perception_benchmark_tool.benchmark_tools.datasets.waymo_dataset.waymo_decode_data import (

+ extract_dataset_from_tfrecord,

+)

+

+

+class WaymoDataset:

+ def __init__(self, segment_path):

+

+ self.lidars_tf = {}

+ self.cameras_tf = {}

+

+ self.segment_path = os.path.join(segment_path)

+

+ (

+ self.dataset_scene_by_scene,

+ self.lidars_tf,

+ self.cameras_tf,

+ ) = extract_dataset_from_tfrecord(self.segment_path)

+

+ self.frame_counter = 0

+

+ def get_lidars_static_tf(self):

+ return self.lidars_tf

+

+ def get_cameras_static_tf(self):

+ return self.cameras_tf

+

+ def get_scene_from_dataset(self):

+ self.frame_counter += 1

+ return self.dataset_scene_by_scene[self.frame_counter - 1]

+

+ def is_finished(self):

+ if self.frame_counter < len(self.dataset_scene_by_scene):

+ return False

+ else:

+ return True

+

+ def get_all_dataset(self):

+ return self.dataset_scene_by_scene

diff --git a/perception_benchmark_tool/benchmark_tools/datasets/waymo_dataset/waymo_decode_data.py b/perception_benchmark_tool/benchmark_tools/datasets/waymo_dataset/waymo_decode_data.py

new file mode 100644

index 0000000..4fea813

--- /dev/null

+++ b/perception_benchmark_tool/benchmark_tools/datasets/waymo_dataset/waymo_decode_data.py

@@ -0,0 +1,260 @@

+# Copyright 2018 Autoware Foundation. All rights reserved.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+

+import numpy as np

+import tensorflow as tf

+from waymo_open_dataset import dataset_pb2 as open_dataset

+from waymo_open_dataset.utils import range_image_utils

+from waymo_open_dataset.utils import transform_utils

+

+

+def decode_camera_calibration(frame):

+ camera_calibrations = []

+

+ for camera_calibration in frame.context.camera_calibrations:

+ camera_name = open_dataset.CameraName.Name.Name(camera_calibration.name)

+ extrinsic = np.array(camera_calibration.extrinsic.transform).reshape(4, 4)

+ cam_intrinsic = np.array([[0, 0, 0, 0], [0, 0, 0, 0], [0, 0, 0, 0]])

+ cam_intrinsic[0, 0] = camera_calibration.intrinsic[0]

+ cam_intrinsic[1, 1] = camera_calibration.intrinsic[1]

+ cam_intrinsic[0, 2] = camera_calibration.intrinsic[2]

+ cam_intrinsic[1, 2] = camera_calibration.intrinsic[3]

+ cam_intrinsic[2, 2] = 1

+ width = camera_calibration.width

+ height = camera_calibration.height

+

+ # Swap the axes around

+ axes_transformation = np.array([[0, -1, 0, 0], [0, 0, -1, 0], [1, 0, 0, 0], [0, 0, 0, 1]])

+

+ vehicle_to_image = np.matmul(

+ cam_intrinsic, np.matmul(axes_transformation, np.linalg.inv(extrinsic))

+ )

+

+ camera_info = {

+ "cam_to_vehicle": extrinsic,

+ "cam_intrinsic": cam_intrinsic,

+ "width": width,

+ "height": height,

+ "vehicle_to_image": vehicle_to_image,

+ }

+

+ camera_calibration = [camera_name, camera_info]

+ camera_calibrations.append(camera_calibration)

+

+ return camera_calibrations

+

+

+def get_decoded_point_cloud(frame):

+ range_images = {}

+ camera_projections = {}

+ range_image_top_pose = None

+

+ for laser in frame.lasers:

+

+ if len(laser.ri_return1.range_image_compressed) > 0:

+ range_image_str_tensor = tf.io.decode_compressed(

+ laser.ri_return1.range_image_compressed, "ZLIB"

+ )

+ ri = open_dataset.MatrixFloat()

+ ri.ParseFromString(bytearray(range_image_str_tensor.numpy()))

+ range_images[laser.name] = [ri]

+

+ if laser.name == open_dataset.LaserName.TOP:

+ range_image_top_pose_str_tensor = tf.io.decode_compressed(

+ laser.ri_return1.range_image_pose_compressed, "ZLIB"

+ )

+ range_image_top_pose = open_dataset.MatrixFloat()

+ range_image_top_pose.ParseFromString(

+ bytearray(range_image_top_pose_str_tensor.numpy())

+ )

+

+ camera_projection_str_tensor = tf.io.decode_compressed(

+ laser.ri_return1.camera_projection_compressed, "ZLIB"

+ )

+ cp = open_dataset.MatrixInt32()

+ cp.ParseFromString(bytearray(camera_projection_str_tensor.numpy()))

+ camera_projections[laser.name] = [cp]

+

+ if len(laser.ri_return2.range_image_compressed) > 0:

+ range_image_str_tensor = tf.io.decode_compressed(

+ laser.ri_return2.range_image_compressed, "ZLIB"

+ )

+ ri = open_dataset.MatrixFloat()

+ ri.ParseFromString(bytearray(range_image_str_tensor.numpy()))

+ range_images[laser.name].append(ri)

+

+ camera_projection_str_tensor = tf.io.decode_compressed(

+ laser.ri_return2.camera_projection_compressed, "ZLIB"

+ )

+ cp = open_dataset.MatrixInt32()

+ cp.ParseFromString(bytearray(camera_projection_str_tensor.numpy()))

+ camera_projections[laser.name].append(cp)

+

+ calibrations = sorted(frame.context.laser_calibrations, key=lambda c: c.name)

+ points = []

+

+ frame_pose = tf.convert_to_tensor(np.reshape(np.array(frame.pose.transform), [4, 4]))

+ # [H, W, 6]

+ range_image_top_pose_tensor = tf.reshape(

+ tf.convert_to_tensor(range_image_top_pose.data), range_image_top_pose.shape.dims

+ )

+ # [H, W, 3, 3]

+ range_image_top_pose_tensor_rotation = transform_utils.get_rotation_matrix(

+ range_image_top_pose_tensor[..., 0],

+ range_image_top_pose_tensor[..., 1],

+ range_image_top_pose_tensor[..., 2],

+ )

+ range_image_top_pose_tensor_translation = range_image_top_pose_tensor[..., 3:]

+ range_image_top_pose_tensor = transform_utils.get_transform(

+ range_image_top_pose_tensor_rotation, range_image_top_pose_tensor_translation

+ )

+ for c in calibrations:

+ range_image = range_images[c.name][0]

+

+ if len(c.beam_inclinations) == 0:

+ beam_inclinations = range_image_utils.compute_inclination(

+ tf.constant([c.beam_inclination_min, c.beam_inclination_max]),

+ height=range_image.shape.dims[0],

+ )

+ else:

+ beam_inclinations = tf.constant(c.beam_inclinations)

+

+ beam_inclinations = tf.reverse(beam_inclinations, axis=[-1])

+ extrinsic = np.reshape(np.array(c.extrinsic.transform), [4, 4])

+

+ range_image_tensor = tf.reshape(

+ tf.convert_to_tensor(range_image.data), range_image.shape.dims

+ )

+ pixel_pose_local = None

+ frame_pose_local = None

+

+ if c.name == open_dataset.LaserName.TOP:

+ pixel_pose_local = range_image_top_pose_tensor

+ pixel_pose_local = tf.expand_dims(pixel_pose_local, axis=0)

+ frame_pose_local = tf.expand_dims(frame_pose, axis=0)

+ range_image_mask = range_image_tensor[..., 0] > 0

+ range_image_cartesian = range_image_utils.extract_point_cloud_from_range_image(

+ tf.expand_dims(range_image_tensor[..., 0], axis=0),

+ tf.expand_dims(extrinsic, axis=0),

+ tf.expand_dims(tf.convert_to_tensor(beam_inclinations), axis=0),

+ pixel_pose=pixel_pose_local,

+ frame_pose=frame_pose_local,

+ )

+

+ range_image_cartesian = tf.squeeze(range_image_cartesian, axis=0)

+ points_tensor = tf.gather_nd(range_image_cartesian, tf.where(range_image_mask))

+

+ laser = [open_dataset.LaserName.Name.Name(c.name), points_tensor.numpy()]

+ points.append(laser)

+

+ return points

+

+

+def decode_static_tf(frame):

+ lidars_transforms = {}

+ cameras_transforms = {}

+

+ for laser_calibration in frame.context.laser_calibrations:

+ laser_name = open_dataset.LaserName.Name.Name(laser_calibration.name)

+ extrinsic = np.array(laser_calibration.extrinsic.transform).reshape((4, 4))

+ lidars_transforms[f"{laser_name}_LASER_EXTRINSIC"] = extrinsic

+ for camera_calibration in frame.context.camera_calibrations:

+ camera_name = open_dataset.CameraName.Name.Name(camera_calibration.name)

+ extrinsic = (np.array(camera_calibration.extrinsic.transform)).reshape((4, 4))

+ cameras_transforms[f"{camera_name}_CAM_EXTRINSIC"] = extrinsic

+

+ return lidars_transforms, cameras_transforms

+

+

+def decode_vehicle_pose(frame, frame_id, trans_t_zero_inverse):

+ mat_trans_frame = np.array(

+ [

+ (

+ frame.pose.transform[0],

+ frame.pose.transform[1],

+ frame.pose.transform[2],

+ frame.pose.transform[3],

+ ),

+ (

+ frame.pose.transform[4],

+ frame.pose.transform[5],

+ frame.pose.transform[6],

+ frame.pose.transform[7],

+ ),

+ (

+ frame.pose.transform[8],

+ frame.pose.transform[9],

+ frame.pose.transform[10],

+ frame.pose.transform[11],

+ ),

+ (0, 0, 0, 1),

+ ]

+ )

+ # Define T inverse of odom:

+ if frame_id == 0:

+ trans_t_zero_inverse = np.linalg.inv(mat_trans_frame)

+

+ mat_trans_frame = np.dot(trans_t_zero_inverse, mat_trans_frame)

+ return mat_trans_frame, trans_t_zero_inverse

+

+

+def extract_dataset_from_tfrecord(path):

+ gpus = tf.config.list_physical_devices("GPU")

+ if gpus:

+ try:

+ # Currently, memory growth needs to be the same across GPUs

+ for gpu in gpus:

+ tf.config.experimental.set_memory_growth(gpu, True)

+ logical_gpus = tf.config.list_logical_devices("GPU")

+ print(len(gpus), "Physical GPUs,", len(logical_gpus), "Logical GPUs")

+ except RuntimeError as e:

+ # Memory growth must be set before GPUs have been initialized

+ print(e)

+

+ dataset = tf.data.TFRecordDataset(path, compression_type="")

+ extracted_dataset = []

+ trans_t_zero_inverse = np.array([0])

+

+ lidars_transforms = {}

+ cameras_transforms = {}

+

+ for frame_id, data in enumerate(dataset):

+ data_dict = {}

+ frame = open_dataset.Frame()

+ frame.ParseFromString(bytearray(data.numpy()))

+

+ if frame_id == 1:

+ lidars_transforms, cameras_transforms = decode_static_tf(frame)

+

+ for image in frame.images:

+ cam_name_str = open_dataset.CameraName.Name.Name(image.name)

+ data_dict[f"{cam_name_str}_IMAGE"] = tf.io.decode_jpeg(image.image).numpy()

+

+ for laser_name, point in get_decoded_point_cloud(frame):

+ data_dict[f"{laser_name}_LASER"] = point

+

+ for camera_name, camera_calibration in decode_camera_calibration(frame):

+ data_dict[f"{camera_name}_CAM_INFO"] = camera_calibration

+

+ data_dict["VEHICLE_POSE"], trans_t_zero_inverse = decode_vehicle_pose(

+ frame, frame_id, trans_t_zero_inverse

+ )

+

+ data_dict["TIMESTAMP_MICRO"] = frame.timestamp_micros

+ data_dict["FRAME_CONTEXT_NAME"] = frame.context.name

+ data_dict["GT_OBJECTS"] = frame.laser_labels

+

+ extracted_dataset.append(data_dict)

+

+ return extracted_dataset, lidars_transforms, cameras_transforms

diff --git a/perception_benchmark_tool/benchmark_tools/math_utils.py b/perception_benchmark_tool/benchmark_tools/math_utils.py

new file mode 100644

index 0000000..6556dba

--- /dev/null

+++ b/perception_benchmark_tool/benchmark_tools/math_utils.py

@@ -0,0 +1,156 @@

+# Copyright 2018 Autoware Foundation. All rights reserved.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+

+import math

+from typing import Iterable

+from typing import Optional

+from typing import Tuple

+

+from geometry_msgs.msg import TransformStamped

+import numpy as np

+

+

+def is_rotation_matrix(R):

+ r_transpose = np.transpose(R)

+ should_be_identity = np.dot(r_transpose, R)

+ identity = np.identity(3, dtype=R.dtype)

+ n = np.linalg.norm(identity - should_be_identity)

+ return n < 1e-6

+

+

+def rotation_matrix_to_euler_angles(R):

+ assert is_rotation_matrix(R)

+

+ sy = math.sqrt(R[0, 0] * R[0, 0] + R[1, 0] * R[1, 0])

+

+ singular = sy < 1e-6

+

+ if not singular:

+ x = math.atan2(R[2, 1], R[2, 2])

+ y = math.atan2(-R[2, 0], sy)

+ z = math.atan2(R[1, 0], R[0, 0])

+ else:

+ x = math.atan2(-R[1, 2], R[1, 1])

+ y = math.atan2(-R[2, 0], sy)

+ z = 0

+

+ return np.array([x, y, z])

+

+

+def euler_from_quaternion(x, y, z, w):

+ # Convert a quaternion into euler angles (roll, pitch, yaw),

+ # roll is rotation around x in radians (counterclockwise),

+ # pitch is rotation around y in radians (counterclockwise),

+ # yaw is rotation around z in radians (counterclockwise)

+

+ t0 = +2.0 * (w * x + y * z)

+ t1 = +1.0 - 2.0 * (x * x + y * y)

+ roll_x = math.atan2(t0, t1)

+

+ t2 = +2.0 * (w * y - z * x)

+ t2 = +1.0 if t2 > +1.0 else t2

+ t2 = -1.0 if t2 < -1.0 else t2

+ pitch_y = math.asin(t2)

+

+ t3 = +2.0 * (w * z + x * y)

+ t4 = +1.0 - 2.0 * (y * y + z * z)

+ yaw_z = math.atan2(t3, t4)

+

+ return roll_x, pitch_y, yaw_z # in radians

+

+

+def cart_to_homo(mat):

+ ret = np.eye(4)

+ if mat.shape == (3, 3):

+ ret[:3, :3] = mat

+ elif mat.shape == (3, 4):

+ ret[:3, :] = mat

+ else:

+ raise ValueError(mat.shape)

+ return ret

+

+

+def build_affine(

+ rotation: Optional[Iterable] = None, translation: Optional[Iterable] = None

+) -> np.ndarray:

+ affine = np.eye(4)

+ if rotation is not None:

+ affine[:3, :3] = get_mat_from_quat(np.asarray(rotation))

+ if translation is not None:

+ affine[:3, 3] = np.asarray(translation)

+ return affine

+

+

+def transform_to_affine(transform: TransformStamped) -> np.ndarray:

+ transform = transform.transform

+ transform_rotation_matrix = [

+ transform.rotation.w,

+ transform.rotation.x,

+ transform.rotation.y,

+ transform.rotation.z,

+ ]

+ transform_translation = [

+ transform.translation.x,

+ transform.translation.y,

+ transform.translation.z,

+ ]

+ return build_affine(transform_rotation_matrix, transform_translation)

+

+

+def get_mat_from_quat(quaternion: np.ndarray) -> np.ndarray:

+ Nq = np.sum(np.square(quaternion))

+ if Nq < np.finfo(np.float64).eps:

+ return np.eye(3)

+

+ XYZ = quaternion[1:] * 2.0 / Nq

+ wXYZ = XYZ * quaternion[0]

+ xXYZ = XYZ * quaternion[1]

+ yYZ = XYZ[1:] * quaternion[2]

+ zZ = XYZ[2] * quaternion[3]

+

+ return np.array(

+ [

+ [1.0 - (yYZ[0] + zZ), xXYZ[1] - wXYZ[2], xXYZ[2] + wXYZ[1]],

+ [xXYZ[1] + wXYZ[2], 1.0 - (xXYZ[0] + zZ), yYZ[1] - wXYZ[0]],

+ [xXYZ[2] - wXYZ[1], yYZ[1] + wXYZ[0], 1.0 - (xXYZ[0] + yYZ[0])],

+ ]

+ )

+

+

+def get_quat_from_mat(rot_mat: np.ndarray) -> np.ndarray:

+ # Decompose rotation matrix

+ Qxx, Qyx, Qzx, Qxy, Qyy, Qzy, Qxz, Qyz, Qzz = rot_mat.flat

+ # Create matrix

+ K = (

+ np.array(

+ [

+ [Qxx - Qyy - Qzz, 0, 0, 0],

+ [Qyx + Qxy, Qyy - Qxx - Qzz, 0, 0],

+ [Qzx + Qxz, Qzy + Qyz, Qzz - Qxx - Qyy, 0],

+ [Qyz - Qzy, Qzx - Qxz, Qxy - Qyx, Qxx + Qyy + Qzz],

+ ]

+ )

+ / 3.0

+ )

+ vals, vecs = np.linalg.eigh(K)

+ # Select largest eigenvector and reorder to w,x,y,z

+ q = vecs[[3, 0, 1, 2], np.argmax(vals)]

+ # Invert quaternion if w is negative (results in positive w)

+ if q[0] < 0:

+ q *= -1

+ return q

+

+

+def decompose_affine(affine: np.ndarray) -> Tuple[np.ndarray, np.ndarray]:

+ return get_quat_from_mat(affine[:3, :3]), affine[:3, 3]

diff --git a/perception_benchmark_tool/benchmark_tools/ros_utils.py b/perception_benchmark_tool/benchmark_tools/ros_utils.py

new file mode 100644

index 0000000..cf3f366

--- /dev/null

+++ b/perception_benchmark_tool/benchmark_tools/ros_utils.py

@@ -0,0 +1,123 @@

+# Copyright 2018 Autoware Foundation. All rights reserved.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+

+from cv_bridge import CvBridge

+from geometry_msgs.msg import Pose

+from geometry_msgs.msg import PoseStamped

+from geometry_msgs.msg import TransformStamped

+import numpy as np

+from perception_benchmark_tool.benchmark_tools.math_utils import build_affine

+from perception_benchmark_tool.benchmark_tools.math_utils import decompose_affine

+from perception_benchmark_tool.benchmark_tools.math_utils import rotation_matrix_to_euler_angles

+from perception_benchmark_tool.benchmark_tools.math_utils import transform_to_affine

+from sensor_msgs.msg import CameraInfo

+from sensor_msgs.msg import PointCloud2

+from sensor_msgs.msg import PointField

+import tf_transformations

+

+

+def create_point_cloud_mgs(frame_id, lidar_frame, ros_time):

+ msg = PointCloud2()

+ msg.header.stamp = ros_time.to_msg()

+ msg.header.frame_id = frame_id

+ msg.height = 1

+ msg.width = len(lidar_frame)

+ msg.point_step = 12

+ msg.row_step = 12 * msg.width

+ msg.is_dense = False

+ msg.fields = [

+ PointField(name="x", offset=0, datatype=PointField.FLOAT32, count=1),

+ PointField(name="y", offset=4, datatype=PointField.FLOAT32, count=1),

+ PointField(name="z", offset=8, datatype=PointField.FLOAT32, count=1),

+ ]

+ msg.is_bigendian = False

+ # We pass `data` directly to we avoid using `data` setter.

+ # Otherwise ROS2 converts data to `array.array` which slows down as it copies memory internally.

+ # Both, `bytearray` and `array.array`, implement Python buffer protocol, so we should not see unpredictable

+ # behavior.

+ # deepcode ignore W0212: Avoid conversion from `bytearray` to `array.array`.

+ msg._data = lidar_frame

+ return msg

+

+

+def create_image_msgs(frame_id, camera_frame, ros_time):

+ cv_bridge = CvBridge()

+ image_mgs = cv_bridge.cv2_to_imgmsg(cvim=camera_frame, encoding="rgb8")

+ image_mgs.header.stamp = ros_time.to_msg()

+ image_mgs.header.frame_id = frame_id

+ return image_mgs

+

+

+def create_camera_info(frame_id, camera_calibration, ros_time):

+ camera_info = CameraInfo()

+ camera_info.header.frame_id = frame_id

+ camera_info.header.stamp = ros_time.to_msg()

+ camera_info.width = camera_calibration["width"]

+ camera_info.height = camera_calibration["height"]

+ camera_info.p = (

+ np.array(camera_calibration["vehicle_to_image"]).astype(float).flatten().tolist()

+ )

+ return camera_info

+

+

+def make_transform_stamped(header_frame_id, child_frame_id, lidar_transform, ros_time):

+ rot_mat = lidar_transform[0:3, 0:3]

+ [rx, ry, rz] = rotation_matrix_to_euler_angles(rot_mat)

+ static_transform_stamped = TransformStamped()

+ static_transform_stamped.header.stamp = ros_time.to_msg()

+ static_transform_stamped.header.frame_id = header_frame_id

+ static_transform_stamped.child_frame_id = child_frame_id

+ static_transform_stamped.transform.translation.x = float(lidar_transform[0, 3])

+ static_transform_stamped.transform.translation.y = float(lidar_transform[1, 3])

+ static_transform_stamped.transform.translation.z = float(lidar_transform[2, 3])

+ quat = tf_transformations.quaternion_from_euler(rx, ry, rz)

+ static_transform_stamped.transform.rotation.x = quat[0]

+ static_transform_stamped.transform.rotation.y = quat[1]

+ static_transform_stamped.transform.rotation.z = quat[2]

+ static_transform_stamped.transform.rotation.w = quat[3]

+

+ return static_transform_stamped

+

+

+def do_transform_pose(pose: Pose, transform: TransformStamped) -> Pose:

+ quaternion, point = decompose_affine(

+ np.matmul(

+ transform_to_affine(transform),

+ build_affine(

+ translation=[pose.position.x, pose.position.y, pose.position.z],

+ rotation=[

+ pose.orientation.w,

+ pose.orientation.x,

+ pose.orientation.y,

+ pose.orientation.z,

+ ],

+ ),

+ )

+ )

+ res = Pose()

+ res.position.x = point[0]

+ res.position.y = point[1]

+ res.position.z = point[2]

+ res.orientation.w = quaternion[0]

+ res.orientation.x = quaternion[1]

+ res.orientation.y = quaternion[2]

+ res.orientation.z = quaternion[3]

+ return res

+

+

+def do_transform_pose_stamped(pose: PoseStamped, transform: TransformStamped) -> PoseStamped:

+ res = PoseStamped()

+ res.pose = do_transform_pose(pose.pose, transform)

+ res.header = transform.header

+ return res

diff --git a/perception_benchmark_tool/waymo_player_node.py b/perception_benchmark_tool/waymo_player_node.py

new file mode 100644

index 0000000..5aea05b

--- /dev/null

+++ b/perception_benchmark_tool/waymo_player_node.py

@@ -0,0 +1,405 @@

+# Copyright 2018 Autoware Foundation. All rights reserved.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+

+from glob import glob

+

+from autoware_auto_perception_msgs.msg import ObjectClassification

+from autoware_auto_perception_msgs.msg import Shape

+from autoware_auto_perception_msgs.msg import TrackedObject

+from autoware_auto_perception_msgs.msg import TrackedObjects

+from geometry_msgs.msg import TransformStamped

+from .benchmark_tools.datasets.waymo_dataset import WaymoDataset

+from .benchmark_tools.math_utils import rotation_matrix_to_euler_angles

+from .benchmark_tools.ros_utils import create_camera_info

+from .benchmark_tools.ros_utils import create_image_msgs

+from .benchmark_tools.ros_utils import create_point_cloud_mgs

+from .benchmark_tools.ros_utils import make_transform_stamped

+import rclpy

+from rclpy.clock import ClockType

+from rclpy.node import Node

+from rclpy.time import Time

+from sensor_msgs.msg import CameraInfo

+from sensor_msgs.msg import Image

+from sensor_msgs.msg import PointCloud2

+from sensor_msgs.msg import PointField

+from std_msgs.msg import Bool

+from std_srvs.srv import Trigger

+from tf2_ros import TransformBroadcaster

+from tf2_ros.static_transform_broadcaster import StaticTransformBroadcaster

+import tf_transformations

+from unique_identifier_msgs.msg import UUID

+from waymo_open_dataset import label_pb2

+from waymo_open_dataset.protos import metrics_pb2

+

+

+def get_tfrecord_paths(path):

+ tf_record_list = glob(path + "/*.tfrecord")

+ if len(tf_record_list) > 0:

+ return tf_record_list

+ else:

+ return None

+

+

+class PlayerNode(Node):

+ def __init__(self):

+ super().__init__("waymo_player_node")

+

+ self.declare_parameter("dataset_path", "")

+ dataset_path = self.get_parameter("dataset_path").get_parameter_value().string_value

+ self.tf_list = get_tfrecord_paths(dataset_path)

+

+ self.declare_parameter("use_camera", False)

+ self.use_camera = self.get_parameter("use_camera").get_parameter_value().bool_value

+

+ self.tf_segment_idx = 0

+

+ self.srv_read_scene_data = self.create_service(

+ Trigger, "read_current_segment", self.read_dataset_segment

+ )

+ self.srv_read_scene_data = self.create_service(

+ Trigger, "send_frame", self.frame_processed_callback

+ )

+ self.pub_segment_finished = self.create_publisher(Bool, "segment_finished", 1)

+

+ self.dataset = None

+ self.current_scene_processed = False

+

+ self.pub_lidar_front = self.create_publisher(PointCloud2, "/point_cloud/front_lidar", 10)

+ self.pub_lidar_rear = self.create_publisher(PointCloud2, "/point_cloud/rear_lidar", 10)

+ self.pub_lidar_side_left = self.create_publisher(

+ PointCloud2, "/point_cloud/side_left_lidar", 10

+ )

+ self.pub_lidar_side_right = self.create_publisher(

+ PointCloud2, "/point_cloud/side_right_lidar", 10

+ )

+ self.pub_lidar_top = self.create_publisher(PointCloud2, "/point_cloud/top_lidar", 10)

+

+ self.pub_gt_objects = self.create_publisher(TrackedObjects, "/gt_objects", 10)

+

+ if self.use_camera:

+ self.pub_camera_front = self.create_publisher(Image, "/front_camera", 10)

+ self.pub_camera_front_left = self.create_publisher(Image, "/front_left_camera", 10)

+ self.pub_camera_front_right = self.create_publisher(Image, "/front_right_camera", 10)

+ self.pub_camera_side_left = self.create_publisher(Image, "/side_left_camera", 10)

+ self.pub_camera_side_right = self.create_publisher(Image, "/side_right_camera", 10)

+

+ self.pub_cam_info_front = self.create_publisher(CameraInfo, "/front_cam_info", 10)

+ self.pub_cam_info_front_left = self.create_publisher(

+ CameraInfo, "/front_left_cam_info", 10

+ )

+ self.pub_cam_info_front_right = self.create_publisher(

+ CameraInfo, "/front_right_cam_info", 10

+ )

+ self.pub_cam_info_side_left = self.create_publisher(

+ CameraInfo, "/side_left_cam_info", 10

+ )

+ self.pub_cam_info_side_right = self.create_publisher(

+ CameraInfo, "/side_right_cam_info", 10

+ )

+

+ self.point_fields = [

+ PointField(name="x", offset=0, datatype=PointField.FLOAT32, count=1),

+ PointField(name="y", offset=4, datatype=PointField.FLOAT32, count=1),

+ PointField(name="z", offset=8, datatype=PointField.FLOAT32, count=1),

+ ]

+

+ self.pose_broadcaster = TransformBroadcaster(self)

+ self.static_tf_publisher = StaticTransformBroadcaster(self)

+

+ self.waymo_evaluation_frame = "base_link"

+

+ self.prediction_proto_objects = metrics_pb2.Objects()

+ self.gt_proto_objects = metrics_pb2.Objects()

+

+ def read_dataset_segment(self, request, response):

+

+ if self.tf_segment_idx >= len(self.tf_list):

+ self.get_logger().info("All Waymo segments in the given path have been processed.")

+ exit()

+

+ self.get_logger().info("Waymo segment decoding from dataset...")

+ self.dataset = WaymoDataset(self.tf_list[self.tf_segment_idx])

+ self.get_logger().info("Waymo segment decoded")

+ self.tf_segment_idx += 1

+ response.success = True

+ response.message = "Segment readed."

+ return response

+

+ def frame_processed_callback(self, request, response):

+

+ if not self.is_dataset_finished():

+ self.publish_scene()

+ response.success = True

+ response.message = "Frame published."

+ return response

+

+ else:

+ self.get_logger().info("Waymo segment finished.")

+ msg = Bool()

+ msg.data = True

+ self.pub_segment_finished.publish(msg)

+

+ response.success = False

+ response.message = "Dataset finished."

+ return response

+

+ # Below part copied

+ def publish_scene(self):

+ self.set_scene_processed(True)

+

+ current_scene = self.dataset.get_scene_from_dataset()

+ scene_time_as_ros_time = Time(

+ nanoseconds=int(current_scene["TIMESTAMP_MICRO"]) * 1000, clock_type=ClockType.ROS_TIME

+ )

+

+ self.publish_static_tf(scene_time_as_ros_time)

+ self.publish_pose(current_scene["VEHICLE_POSE"], scene_time_as_ros_time)

+ self.publish_lidar_data(current_scene, scene_time_as_ros_time)

+ self.publish_gt_objects(current_scene, scene_time_as_ros_time)

+

+ if self.use_camera:

+ self.publish_camera_images(current_scene, scene_time_as_ros_time)

+ self.publish_camera_info(current_scene, scene_time_as_ros_time)

+

+ def is_dataset_finished(self):

+ return self.dataset.is_finished()

+

+ def publish_pose(self, vehicle_pose, ros_time):

+ transform_stamped = TransformStamped()

+ transform_stamped.header.stamp = ros_time.to_msg()

+ transform_stamped.header.frame_id = "map"

+ transform_stamped.child_frame_id = "base_link"

+

+ rot_mat = vehicle_pose[0:3, 0:3]

+ [rx, ry, rz] = rotation_matrix_to_euler_angles(rot_mat)

+

+ transform_stamped.transform.translation.x = float(vehicle_pose[0, 3])

+ transform_stamped.transform.translation.y = float(vehicle_pose[1, 3])

+ transform_stamped.transform.translation.z = float(vehicle_pose[2, 3])

+

+ quat = tf_transformations.quaternion_from_euler(float(rx), float(ry), float(rz))

+

+ transform_stamped.transform.rotation.x = quat[0]

+ transform_stamped.transform.rotation.y = quat[1]

+ transform_stamped.transform.rotation.z = quat[2]

+ transform_stamped.transform.rotation.w = quat[3]

+

+ self.pose_broadcaster.sendTransform(transform_stamped)

+

+ def publish_static_tf(self, ros_time):

+ lidar_transforms = self.dataset.get_lidars_static_tf()

+

+ # Front lidar

+ static_ts_front_lidar = make_transform_stamped(

+ "base_link", "front_laser", lidar_transforms["FRONT_LASER_EXTRINSIC"], ros_time

+ )

+ # Rear lidar

+ static_ts_rear_lidar = make_transform_stamped(

+ "base_link", "rear_laser", lidar_transforms["REAR_LASER_EXTRINSIC"], ros_time

+ )

+ # Side left lidar

+ static_ts_side_left_lidar = make_transform_stamped(

+ "base_link", "side_left_laser", lidar_transforms["SIDE_LEFT_LASER_EXTRINSIC"], ros_time

+ )

+ # Side right lidar

+ static_ts_side_right_lidar = make_transform_stamped(

+ "base_link",

+ "side_right_laser",

+ lidar_transforms["SIDE_RIGHT_LASER_EXTRINSIC"],

+ ros_time,

+ )

+ # Top lidar

+ static_ts_top_lidar = make_transform_stamped(

+ "base_link", "top_laser", lidar_transforms["TOP_LASER_EXTRINSIC"], ros_time

+ )

+

+ camera_transforms = self.dataset.get_cameras_static_tf()

+ # Front camera

+ static_ts_front_camera = make_transform_stamped(

+ "base_link", "front_camera", camera_transforms["FRONT_CAM_EXTRINSIC"], ros_time

+ )

+ # Front left camera

+ static_ts_front_left_camera = make_transform_stamped(

+ "base_link",

+ "front_left_camera",

+ camera_transforms["FRONT_LEFT_CAM_EXTRINSIC"],

+ ros_time,

+ )

+ # Front right camera

+ static_ts_front_right_camera = make_transform_stamped(

+ "base_link",

+ "front_right_camera",

+ camera_transforms["FRONT_RIGHT_CAM_EXTRINSIC"],

+ ros_time,

+ )

+ # Side left camera

+ static_ts_side_left_camera = make_transform_stamped(

+ "base_link", "side_left_camera", camera_transforms["SIDE_LEFT_CAM_EXTRINSIC"], ros_time

+ )

+ # Side right camera

+ static_ts_side_right_camera = make_transform_stamped(

+ "base_link",

+ "side_right_camera",

+ camera_transforms["SIDE_RIGHT_CAM_EXTRINSIC"],

+ ros_time,

+ )

+

+ self.static_tf_publisher.sendTransform(

+ [

+ static_ts_front_lidar,

+ static_ts_rear_lidar,

+ static_ts_side_left_lidar,

+ static_ts_side_right_lidar,

+ static_ts_top_lidar,

+ static_ts_front_camera,

+ static_ts_front_left_camera,

+ static_ts_front_right_camera,

+ static_ts_side_left_camera,

+ static_ts_side_right_camera,

+ ]

+ )

+

+ def publish_camera_images(self, current_scene, ros_time_now):

+ self.pub_camera_front.publish(

+ create_image_msgs("front_camera", current_scene["FRONT_IMAGE"], ros_time_now)

+ )

+ self.pub_camera_front_left.publish(

+ create_image_msgs("front_left_camera", current_scene["FRONT_LEFT_IMAGE"], ros_time_now)

+ )

+ self.pub_camera_front_right.publish(

+ create_image_msgs(

+ "front_right_camera", current_scene["FRONT_RIGHT_IMAGE"], ros_time_now

+ )

+ )

+ self.pub_camera_side_left.publish(

+ create_image_msgs("side_left_camera", current_scene["SIDE_LEFT_IMAGE"], ros_time_now)

+ )

+ self.pub_camera_side_right.publish(

+ create_image_msgs("side_right_camera", current_scene["SIDE_RIGHT_IMAGE"], ros_time_now)

+ )

+

+ def publish_camera_info(self, current_scene, ros_time_now):

+ self.pub_cam_info_front.publish(

+ create_camera_info("base_link", current_scene["FRONT_CAM_INFO"], ros_time_now)

+ )

+ self.pub_cam_info_front_left.publish(

+ create_camera_info("base_link", current_scene["FRONT_LEFT_CAM_INFO"], ros_time_now)

+ )

+ self.pub_cam_info_front_right.publish(

+ create_camera_info("base_link", current_scene["FRONT_RIGHT_CAM_INFO"], ros_time_now)

+ )

+ self.pub_cam_info_side_left.publish(

+ create_camera_info("base_link", current_scene["SIDE_LEFT_CAM_INFO"], ros_time_now)

+ )

+ self.pub_cam_info_side_right.publish(

+ create_camera_info("base_link", current_scene["SIDE_RIGHT_CAM_INFO"], ros_time_now)

+ )

+

+ def publish_lidar_data(self, current_scene, ros_time_now):

+ self.pub_lidar_top.publish(

+ create_point_cloud_mgs("base_link", current_scene["TOP_LASER"], ros_time_now)

+ )

+ self.pub_lidar_front.publish(

+ create_point_cloud_mgs("base_link", current_scene["FRONT_LASER"], ros_time_now)

+ )

+ self.pub_lidar_side_left.publish(

+ create_point_cloud_mgs("base_link", current_scene["SIDE_LEFT_LASER"], ros_time_now)

+ )

+ self.pub_lidar_side_right.publish(

+ create_point_cloud_mgs("base_link", current_scene["SIDE_RIGHT_LASER"], ros_time_now)

+ )

+ self.pub_lidar_rear.publish(

+ create_point_cloud_mgs("base_link", current_scene["REAR_LASER"], ros_time_now)

+ )

+

+ def publish_gt_objects(self, current_scene, ros_time):

+

+ ground_truth_objects = TrackedObjects()

+ ground_truth_objects.header.frame_id = "base_link"

+ ground_truth_objects.header.stamp = ros_time.to_msg()

+

+ for gt_object in current_scene["GT_OBJECTS"]:

+

+ if gt_object.num_lidar_points_in_box <= 0:

+ continue

+

+ gt_detected_object = TrackedObject()

+ object_classification = ObjectClassification()

+ gt_detected_object.existence_probability = 1.0

+

+ if gt_object.type == label_pb2.Label.TYPE_VEHICLE:

+ object_classification.label = ObjectClassification.CAR

+ gt_detected_object.shape.type = Shape.BOUNDING_BOX

+ elif gt_object.type == label_pb2.Label.TYPE_PEDESTRIAN:

+ object_classification.label = ObjectClassification.PEDESTRIAN

+ gt_detected_object.shape.type = Shape.CYLINDER

+ elif gt_object.type == label_pb2.Label.TYPE_CYCLIST:

+ object_classification.label = ObjectClassification.BICYCLE

+ gt_detected_object.shape.type = Shape.BOUNDING_BOX

+ else:

+ continue

+

+ gt_detected_object.classification.append(object_classification)

+

+ # Pedestrian bounding boxes x and y fixed in Autoware

+ if gt_object.type == label_pb2.Label.TYPE_PEDESTRIAN:

+ gt_detected_object.shape.dimensions.x = 1.0

+ gt_detected_object.shape.dimensions.y = 1.0

+ gt_detected_object.shape.dimensions.z = gt_object.box.height

+ else:

+ gt_detected_object.shape.dimensions.x = gt_object.box.length

+ gt_detected_object.shape.dimensions.y = gt_object.box.width

+ gt_detected_object.shape.dimensions.z = gt_object.box.height

+

+ gt_detected_object.kinematics.pose_with_covariance.pose.position.x = (

+ gt_object.box.center_x

+ )

+ gt_detected_object.kinematics.pose_with_covariance.pose.position.y = (

+ gt_object.box.center_y

+ )

+ gt_detected_object.kinematics.pose_with_covariance.pose.position.z = (

+ gt_object.box.center_z

+ )

+

+ q = tf_transformations.quaternion_from_euler(0, 0, gt_object.box.heading)

+

+ gt_detected_object.kinematics.pose_with_covariance.pose.orientation.x = q[0]

+ gt_detected_object.kinematics.pose_with_covariance.pose.orientation.y = q[1]

+ gt_detected_object.kinematics.pose_with_covariance.pose.orientation.z = q[2]

+ gt_detected_object.kinematics.pose_with_covariance.pose.orientation.w = q[3]

+

+ str_1_encoded = gt_object.id.encode(encoding="UTF-8")

+ uuid_msg = UUID()

+

+ for i in range(16):

+ uuid_msg.uuid[i] = str_1_encoded[i]

+

+ gt_detected_object.object_id = uuid_msg

+ ground_truth_objects.objects.append(gt_detected_object)

+

+ self.pub_gt_objects.publish(ground_truth_objects)

+

+ def scene_processed(self):

+ return self.current_scene_processed

+

+ def set_scene_processed(self, value):

+ self.current_scene_processed = value

+

+

+def main(args=None):

+ rclpy.init(args=args)

+ perception_benchmark = PlayerNode()

+ rclpy.spin(perception_benchmark)

+ perception_benchmark.destroy_node()

+ rclpy.shutdown()

diff --git a/resource/perception_benchmark_tool b/resource/perception_benchmark_tool

new file mode 100644

index 0000000..e69de29

diff --git a/rviz/waymo.rviz b/rviz/waymo.rviz

new file mode 100644

index 0000000..d6f8fe1

--- /dev/null

+++ b/rviz/waymo.rviz

@@ -0,0 +1,364 @@

+Panels:

+ - Class: rviz_common/Displays

+ Help Height: 0

+ Name: Displays

+ Property Tree Widget:

+ Expanded:

+ - /Global Options1

+ Splitter Ratio: 0.5688889026641846

+ Tree Height: 482

+ - Class: rviz_common/Selection

+ Name: Selection

+ - Class: rviz_common/Tool Properties

+ Expanded: ~

+ Name: Tool Properties

+ Splitter Ratio: 0.5886790156364441

+ - Class: rviz_common/Views

+ Expanded:

+ - /Current View1

+ Name: Views

+ Splitter Ratio: 0.5

+ - Class: tier4_localization_rviz_plugin/InitialPoseButtonPanel

+ Name: InitialPoseButtonPanel

+ - Class: rviz_common/Time

+ Experimental: false

+ Name: Time

+ SyncMode: 0

+ SyncSource: ""

+Visualization Manager:

+ Class: ""

+ Displays:

+ - Class: rviz_default_plugins/TF

+ Enabled: true

+ Frame Timeout: 15

+ Frames:

+ All Enabled: true

+ Marker Scale: 1

+ Name: TF

+ Show Arrows: true

+ Show Axes: true

+ Show Names: true

+ Tree:

+ {}

+ Update Interval: 0

+ Value: true

+ - Alpha: 0.5

+ Cell Size: 1

+ Class: rviz_default_plugins/Grid

+ Color: 160; 160; 164

+ Enabled: false

+ Line Style:

+ Line Width: 0.029999999329447746

+ Value: Lines

+ Name: Grid

+ Normal Cell Count: 0

+ Offset:

+ X: 0

+ Y: 0

+ Z: 0

+ Plane: XY

+ Plane Cell Count: 10

+ Reference Frame:

+ Value: false

+ - Alpha: 0.4000000059604645

+ Autocompute Intensity Bounds: true

+ Autocompute Value Bounds:

+ Max Value: 5

+ Min Value: -1

+ Value: false

+ Axis: Z

+ Channel Name: intensity

+ Class: rviz_default_plugins/PointCloud2

+ Color: 255; 255; 255

+ Color Transformer: AxisColor

+ Decay Time: 0

+ Enabled: false

+ Invert Rainbow: false

+ Max Color: 255; 255; 255

+ Max Intensity: 4096

+ Min Color: 0; 0; 0

+ Min Intensity: 0

+ Name: ConcatenatePointCloud

+ Position Transformer: XYZ

+ Selectable: false

+ Size (Pixels): 1

+ Size (m): 0.019999999552965164

+ Style: Points

+ Topic:

+ Depth: 5

+ Durability Policy: Volatile

+ Filter size: 10

+ History Policy: Keep Last

+ Reliability Policy: Best Effort

+ Value: /sensing/lidar/concatenated/pointcloud

+ Use Fixed Frame: false

+ Use rainbow: true

+ Value: false

+ - Alpha: 0.9990000128746033

+ Autocompute Intensity Bounds: true

+ Autocompute Value Bounds:

+ Max Value: 15

+ Min Value: -2

+ Value: false

+ Axis: Z

+ Channel Name: z

+ Class: rviz_default_plugins/PointCloud2

+ Color: 200; 200; 200

+ Color Transformer: FlatColor

+ Decay Time: 0

+ Enabled: true

+ Invert Rainbow: false

+ Max Color: 255; 255; 255

+ Max Intensity: 15

+ Min Color: 0; 0; 0

+ Min Intensity: -5

+ Name: NoGroundPointCloud

+ Position Transformer: XYZ

+ Selectable: false

+ Size (Pixels): 3

+ Size (m): 0.019999999552965164

+ Style: Points

+ Topic:

+ Depth: 5

+ Durability Policy: Volatile

+ Filter size: 10

+ History Policy: Keep Last

+ Reliability Policy: Best Effort

+ Value: /perception/obstacle_segmentation/pointcloud

+ Use Fixed Frame: true

+ Use rainbow: true

+ Value: true

+ - BUS:

+ Alpha: 0.9990000128746033

+ Color: 30; 144; 255

+ CAR:

+ Alpha: 0.9990000128746033

+ Color: 30; 144; 255

+ CYCLIST:

+ Alpha: 0.9990000128746033

+ Color: 119; 11; 32

+ Class: autoware_auto_perception_rviz_plugin/TrackedObjects

+ Display 3d polygon: true

+ Display Label: true

+ Display PoseWithCovariance: true

+ Display Predicted Path Confidence: true

+ Display Predicted Paths: true

+ Display Twist: true

+ Display UUID: true

+ Display Velocity: true

+ Enabled: true

+ Line Width: 0.029999999329447746

+ MOTORCYCLE:

+ Alpha: 0.9990000128746033

+ Color: 119; 11; 32

+ Name: TrackedObjectsPrediction

+ Namespaces:

+ {}

+ PEDESTRIAN:

+ Alpha: 0.9990000128746033

+ Color: 255; 192; 203

+ TRAILER:

+ Alpha: 0.9990000128746033

+ Color: 30; 144; 255

+ TRUCK:

+ Alpha: 0.9990000128746033

+ Color: 30; 144; 255

+ Topic:

+ Depth: 5

+ Durability Policy: Volatile

+ History Policy: Keep Last

+ Reliability Policy: Reliable

+ Value: /perception/object_recognition/tracking/objects

+ UNKNOWN:

+ Alpha: 0.9990000128746033

+ Color: 255; 255; 255

+ Value: true

+ - BUS:

+ Alpha: 0.9990000128746033

+ Color: 255; 0; 0

+ CAR:

+ Alpha: 0.9990000128746033

+ Color: 255; 0; 0

+ CYCLIST:

+ Alpha: 0.9990000128746033

+ Color: 255; 255; 0

+ Class: autoware_auto_perception_rviz_plugin/TrackedObjects

+ Display 3d polygon: true

+ Display Label: true

+ Display PoseWithCovariance: true

+ Display Predicted Path Confidence: true

+ Display Predicted Paths: true

+ Display Twist: true

+ Display UUID: true

+ Display Velocity: true

+ Enabled: true

+ Line Width: 0.029999999329447746

+ MOTORCYCLE:

+ Alpha: 0.9990000128746033

+ Color: 255; 255; 0

+ Name: TrackedObjectsGroundTruth

+ Namespaces:

+ {}

+ PEDESTRIAN:

+ Alpha: 0.9990000128746033

+ Color: 0; 255; 0

+ TRAILER: