diff --git a/ros/src/computing/perception/detection/packages/cv_tracker/launch/yolo2.launch b/ros/src/computing/perception/detection/packages/cv_tracker/launch/yolo2.launch

index 40ae85e5471..1480bf5c15f 100644

--- a/ros/src/computing/perception/detection/packages/cv_tracker/launch/yolo2.launch

+++ b/ros/src/computing/perception/detection/packages/cv_tracker/launch/yolo2.launch

@@ -1,26 +1,21 @@

-

-

-

-

-

-

-

-

-

-

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

-

-

-

-

-

-

-

-

-

-

-

-

-

diff --git a/ros/src/computing/perception/detection/packages/cv_tracker/nodes/yolo2/src/yolo2_node.cpp b/ros/src/computing/perception/detection/packages/cv_tracker/nodes/yolo2/src/yolo2_node.cpp

index 11f919c34ce..c37edfe026f 100644

--- a/ros/src/computing/perception/detection/packages/cv_tracker/nodes/yolo2/src/yolo2_node.cpp

+++ b/ros/src/computing/perception/detection/packages/cv_tracker/nodes/yolo2/src/yolo2_node.cpp

@@ -191,7 +191,7 @@ class Yolo2DetectorNode

void image_callback(const sensor_msgs::ImageConstPtr& in_image_message)

{

std::vector< RectClassScore > detections;

- //darknet_image = yolo_detector_.convert_image(in_image_message);

+ //darknet_image_ = yolo_detector_.convert_image(in_image_message);

darknet_image = convert_ipl_to_image(in_image_message);

diff --git a/ros/src/computing/perception/detection/packages/yolo3_detector/CMakeLists.txt b/ros/src/computing/perception/detection/packages/yolo3_detector/CMakeLists.txt

new file mode 100644

index 00000000000..58e8594b18a

--- /dev/null

+++ b/ros/src/computing/perception/detection/packages/yolo3_detector/CMakeLists.txt

@@ -0,0 +1,154 @@

+cmake_minimum_required(VERSION 2.8.12)

+project(yolo3_detector)

+

+find_package(catkin REQUIRED COMPONENTS

+ cv_bridge

+ image_transport

+ roscpp

+ sensor_msgs

+ std_msgs

+ autoware_msgs

+ )

+

+find_package(CUDA)

+find_package(OpenCV REQUIRED)

+find_package(OpenMP)

+

+catkin_package(CATKIN_DEPENDS

+ cv_bridge

+ image_transport

+ roscpp

+ sensor_msgs

+ std_msgs

+ autoware_msgs

+ )

+

+set(CMAKE_CXX_FLAGS "-std=c++11 -O3 -g -Wall ${CMAKE_CXX_FLAGS}")

+

+IF (CUDA_FOUND)

+ list(APPEND CUDA_NVCC_FLAGS "--std=c++11 -I$${PROJECT_SOURCE_DIR}/darknet/src -I${PROJECT_SOURCE_DIR}/src -DGPU")

+ SET(CUDA_PROPAGATE_HOST_FLAGS OFF)

+

+ #darknet

+ cuda_add_library(yolo3_detector_lib SHARED

+ darknet/src/activation_kernels.cu

+ darknet/src/avgpool_layer_kernels.cu

+ darknet/src/convolutional_kernels.cu

+ darknet/src/crop_layer_kernels.cu

+ darknet/src/col2im_kernels.cu

+ darknet/src/blas_kernels.cu

+ darknet/src/deconvolutional_kernels.cu

+ darknet/src/dropout_layer_kernels.cu

+ darknet/src/im2col_kernels.cu

+ darknet/src/maxpool_layer_kernels.cu

+

+ darknet/src/gemm.c

+ darknet/src/utils.c

+ darknet/src/cuda.c

+ darknet/src/deconvolutional_layer.c

+ darknet/src/convolutional_layer.c

+ darknet/src/list.c

+ darknet/src/image.c

+ darknet/src/activations.c

+ darknet/src/im2col.c

+ darknet/src/col2im.c

+ darknet/src/blas.c

+ darknet/src/crop_layer.c

+ darknet/src/dropout_layer.c

+ darknet/src/maxpool_layer.c

+ darknet/src/softmax_layer.c

+ darknet/src/data.c

+ darknet/src/matrix.c

+ darknet/src/network.c

+ darknet/src/connected_layer.c

+ darknet/src/cost_layer.c

+ darknet/src/parser.c

+ darknet/src/option_list.c

+ darknet/src/detection_layer.c

+ darknet/src/route_layer.c

+ darknet/src/upsample_layer.c

+ darknet/src/box.c

+ darknet/src/normalization_layer.c

+ darknet/src/avgpool_layer.c

+ darknet/src/layer.c

+ darknet/src/local_layer.c

+ darknet/src/shortcut_layer.c

+ darknet/src/logistic_layer.c

+ darknet/src/activation_layer.c

+ darknet/src/rnn_layer.c

+ darknet/src/gru_layer.c

+ darknet/src/crnn_layer.c

+ darknet/src/batchnorm_layer.c

+ darknet/src/region_layer.c

+ darknet/src/reorg_layer.c

+ darknet/src/tree.c

+ darknet/src/lstm_layer.c

+ darknet/src/l2norm_layer.c

+ darknet/src/yolo_layer.c

+ )

+

+ target_compile_definitions(yolo3_detector_lib PUBLIC -DGPU)

+ cuda_add_cublas_to_target(yolo3_detector_lib)

+

+ if (OPENMP_FOUND)

+ set_target_properties(yolo3_detector_lib PROPERTIES

+ COMPILE_FLAGS ${OpenMP_CXX_FLAGS}

+ LINK_FLAGS ${OpenMP_CXX_FLAGS}

+ )

+ endif ()

+

+ target_include_directories(yolo3_detector_lib PRIVATE

+ ${OpenCV_INCLUDE_DIR}

+ ${catkin_INCLUDE_DIRS}

+ ${Boost_INCLUDE_DIRS}

+ ${CUDA_INCLUDE_DIRS}

+ ${PROJECT_SOURCE_DIR}/darknet

+ ${PROJECT_SOURCE_DIR}/darknet/src

+ ${PROJECT_SOURCE_DIR}/src

+ )

+

+ target_link_libraries(yolo3_detector_lib

+ ${OpenCV_LIBRARIES}

+ ${catkin_LIBRARIES}

+ ${PCL_LIBRARIES}

+ ${Qt5Core_LIBRARIES}

+ ${CUDA_LIBRARIES}

+ ${CUDA_CUBLAS_LIBRARIES}

+ ${CUDA_curand_LIBRARY}

+ )

+

+ add_dependencies(yolo3_detector_lib

+ ${catkin_EXPORTED_TARGETS}

+ )

+

+ #ros node

+ cuda_add_executable(yolo3_detector

+ src/yolo3_node.cpp

+ src/yolo3_detector.cpp

+ src/yolo3_detector.h

+ )

+

+ target_compile_definitions(yolo3_detector PUBLIC -DGPU)

+

+ target_include_directories(yolo3_detector PRIVATE

+ ${CUDA_INCLUDE_DIRS}

+ ${catkin_INCLUDE_DIRS}

+ ${PROJECT_SOURCE_DIR}/darknet

+ ${PROJECT_SOURCE_DIR}/darknet/src

+ ${PROJECT_SOURCE_DIR}/src

+ )

+

+ target_link_libraries(yolo3_detector

+ ${catkin_LIBRARIES}

+ ${OpenCV_LIBS}

+ ${CUDA_LIBRARIES}

+ ${CUDA_CUBLAS_LIBRARIES}

+ ${CUDA_curand_LIBRARY}

+ yolo3_detector_lib

+ )

+ add_dependencies(yolo3_detector

+ ${catkin_EXPORTED_TARGETS}

+ )

+ELSE()

+ message("YOLO3 won't be built, CUDA was not found.")

+ENDIF ()

\ No newline at end of file

diff --git a/ros/src/computing/perception/detection/packages/yolo3_detector/README.md b/ros/src/computing/perception/detection/packages/yolo3_detector/README.md

new file mode 100644

index 00000000000..8b1e794c1f3

--- /dev/null

+++ b/ros/src/computing/perception/detection/packages/yolo3_detector/README.md

@@ -0,0 +1,49 @@

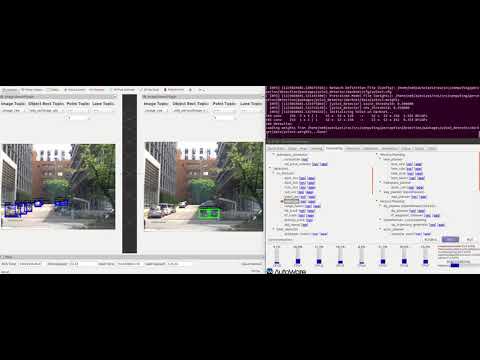

+# Yolo3 Detector

+

+Autoware package based on Yolov3 image detector.

+

+### Requirements

+

+* NVIDIA GPU with CUDA installed

+* Pretrained YOLOv3 model on COCO dataset.

+

+### How to launch

+

+* From a sourced terminal:

+

+`roslaunch yolo3_detector yolo3.launch`

+

+* From Runtime Manager:

+

+Computing Tab -> Detection/ cv_detector -> `yolo3_wa`

+

+### Parameters

+

+Launch file available parameters:

+

+|Parameter| Type| Description|

+----------|-----|--------

+|`score_threshold`|*Double* |Detections with a confidence value larger than this value will be displayed. Default `0.5`.|

+|`nms_threshold`|*Double*|Non-Maximum suppresion area threshold ratio to merge proposals. Default `0.45`.|

+|`network_definition_file`|*String*|Network architecture definition configuration file. Default `yolov3.cfg`.|

+|`pretrained_model_file`|*String*|Path to pretrained model. Default `yolov3.weights`.|

+|`camera_id`|*String*|Camera workspace. Default `/`.|

+|`image_src`|*String*|Image source topic. Default `/image_raw`.|

+

+### Subscribed topics

+

+|Topic|Type|Objective|

+------|----|---------

+|`/image_raw`|`sensor_msgs/Image`|Source image stream to perform detection.|

+|`/config/Yolo3`|`autoware_msgs/ConfigSsd`|Configuration adjustment for threshold.|

+

+### Published topics

+

+|Topic|Type|Objective|

+------|----|---------

+|`/obj_car/image_obj`|`autoware_msgs/image_obj`|Contains the coordinates of the bounding box in image coordinates for objects detected as vehicles.|

+|`/obj_person/image_obj`|`autoware_msgs/image_obj`|Contains the coordinates of the bounding box in image coordinates for objects detected as pedestrian.|

+

+### Video

+

+[](https://www.youtube.com/watch?v=pO4vM4ehI98)

diff --git a/ros/src/computing/perception/detection/packages/yolo3_detector/darknet/cfg/yolov3-voc.cfg b/ros/src/computing/perception/detection/packages/yolo3_detector/darknet/cfg/yolov3-voc.cfg

new file mode 100644

index 00000000000..3f3e8dfb31b

--- /dev/null

+++ b/ros/src/computing/perception/detection/packages/yolo3_detector/darknet/cfg/yolov3-voc.cfg

@@ -0,0 +1,785 @@

+[net]

+# Testing

+ batch=1

+ subdivisions=1

+# Training

+# batch=64

+# subdivisions=16

+width=416

+height=416

+channels=3

+momentum=0.9

+decay=0.0005

+angle=0

+saturation = 1.5

+exposure = 1.5

+hue=.1

+

+learning_rate=0.001

+burn_in=1000

+max_batches = 50200

+policy=steps

+steps=40000,45000

+scales=.1,.1

+

+

+

+[convolutional]

+batch_normalize=1

+filters=32

+size=3

+stride=1

+pad=1

+activation=leaky

+

+# Downsample

+

+[convolutional]

+batch_normalize=1

+filters=64

+size=3

+stride=2

+pad=1

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+filters=32

+size=1

+stride=1

+pad=1

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+filters=64

+size=3

+stride=1

+pad=1

+activation=leaky

+

+[shortcut]

+from=-3

+activation=linear

+

+# Downsample

+

+[convolutional]

+batch_normalize=1

+filters=128

+size=3

+stride=2

+pad=1

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+filters=64

+size=1

+stride=1

+pad=1

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+filters=128

+size=3

+stride=1

+pad=1

+activation=leaky

+

+[shortcut]

+from=-3

+activation=linear

+

+[convolutional]

+batch_normalize=1

+filters=64

+size=1

+stride=1

+pad=1

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+filters=128

+size=3

+stride=1

+pad=1

+activation=leaky

+

+[shortcut]

+from=-3

+activation=linear

+

+# Downsample

+

+[convolutional]

+batch_normalize=1

+filters=256

+size=3

+stride=2

+pad=1

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+filters=128

+size=1

+stride=1

+pad=1

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+filters=256

+size=3

+stride=1

+pad=1

+activation=leaky

+

+[shortcut]

+from=-3

+activation=linear

+

+[convolutional]

+batch_normalize=1

+filters=128

+size=1

+stride=1

+pad=1

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+filters=256

+size=3

+stride=1

+pad=1

+activation=leaky

+

+[shortcut]

+from=-3

+activation=linear

+

+[convolutional]

+batch_normalize=1

+filters=128

+size=1

+stride=1

+pad=1

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+filters=256

+size=3

+stride=1

+pad=1

+activation=leaky

+

+[shortcut]

+from=-3

+activation=linear

+

+[convolutional]

+batch_normalize=1

+filters=128

+size=1

+stride=1

+pad=1

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+filters=256

+size=3

+stride=1

+pad=1

+activation=leaky

+

+[shortcut]

+from=-3

+activation=linear

+

+

+[convolutional]

+batch_normalize=1

+filters=128

+size=1

+stride=1

+pad=1

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+filters=256

+size=3

+stride=1

+pad=1

+activation=leaky

+

+[shortcut]

+from=-3

+activation=linear

+

+[convolutional]

+batch_normalize=1

+filters=128

+size=1

+stride=1

+pad=1

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+filters=256

+size=3

+stride=1

+pad=1

+activation=leaky

+

+[shortcut]

+from=-3

+activation=linear

+

+[convolutional]

+batch_normalize=1

+filters=128

+size=1

+stride=1

+pad=1

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+filters=256

+size=3

+stride=1

+pad=1

+activation=leaky

+

+[shortcut]

+from=-3

+activation=linear

+

+[convolutional]

+batch_normalize=1

+filters=128

+size=1

+stride=1

+pad=1

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+filters=256

+size=3

+stride=1

+pad=1

+activation=leaky

+

+[shortcut]

+from=-3

+activation=linear

+

+# Downsample

+

+[convolutional]

+batch_normalize=1

+filters=512

+size=3

+stride=2

+pad=1

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+filters=256

+size=1

+stride=1

+pad=1

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+filters=512

+size=3

+stride=1

+pad=1

+activation=leaky

+

+[shortcut]

+from=-3

+activation=linear

+

+

+[convolutional]

+batch_normalize=1

+filters=256

+size=1

+stride=1

+pad=1

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+filters=512

+size=3

+stride=1

+pad=1

+activation=leaky

+

+[shortcut]

+from=-3

+activation=linear

+

+

+[convolutional]

+batch_normalize=1

+filters=256

+size=1

+stride=1

+pad=1

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+filters=512

+size=3

+stride=1

+pad=1

+activation=leaky

+

+[shortcut]

+from=-3

+activation=linear

+

+

+[convolutional]

+batch_normalize=1

+filters=256

+size=1

+stride=1

+pad=1

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+filters=512

+size=3

+stride=1

+pad=1

+activation=leaky

+

+[shortcut]

+from=-3

+activation=linear

+

+[convolutional]

+batch_normalize=1

+filters=256

+size=1

+stride=1

+pad=1

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+filters=512

+size=3

+stride=1

+pad=1

+activation=leaky

+

+[shortcut]

+from=-3

+activation=linear

+

+

+[convolutional]

+batch_normalize=1

+filters=256

+size=1

+stride=1

+pad=1

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+filters=512

+size=3

+stride=1

+pad=1

+activation=leaky

+

+[shortcut]

+from=-3

+activation=linear

+

+

+[convolutional]

+batch_normalize=1

+filters=256

+size=1

+stride=1

+pad=1

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+filters=512

+size=3

+stride=1

+pad=1

+activation=leaky

+

+[shortcut]

+from=-3

+activation=linear

+

+[convolutional]

+batch_normalize=1

+filters=256

+size=1

+stride=1

+pad=1

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+filters=512

+size=3

+stride=1

+pad=1

+activation=leaky

+

+[shortcut]

+from=-3

+activation=linear

+

+# Downsample

+

+[convolutional]

+batch_normalize=1

+filters=1024

+size=3

+stride=2

+pad=1

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+filters=512

+size=1

+stride=1

+pad=1

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+filters=1024

+size=3

+stride=1

+pad=1

+activation=leaky

+

+[shortcut]

+from=-3

+activation=linear

+

+[convolutional]

+batch_normalize=1

+filters=512

+size=1

+stride=1

+pad=1

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+filters=1024

+size=3

+stride=1

+pad=1

+activation=leaky

+

+[shortcut]

+from=-3

+activation=linear

+

+[convolutional]

+batch_normalize=1

+filters=512

+size=1

+stride=1

+pad=1

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+filters=1024

+size=3

+stride=1

+pad=1

+activation=leaky

+

+[shortcut]

+from=-3

+activation=linear

+

+[convolutional]

+batch_normalize=1

+filters=512

+size=1

+stride=1

+pad=1

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+filters=1024

+size=3

+stride=1

+pad=1

+activation=leaky

+

+[shortcut]

+from=-3

+activation=linear

+

+######################

+

+[convolutional]

+batch_normalize=1

+filters=512

+size=1

+stride=1

+pad=1

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+size=3

+stride=1

+pad=1

+filters=1024

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+filters=512

+size=1

+stride=1

+pad=1

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+size=3

+stride=1

+pad=1

+filters=1024

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+filters=512

+size=1

+stride=1

+pad=1

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+size=3

+stride=1

+pad=1

+filters=1024

+activation=leaky

+

+[convolutional]

+size=1

+stride=1

+pad=1

+filters=75

+activation=linear

+

+[yolo]

+mask = 6,7,8

+anchors = 10,13, 16,30, 33,23, 30,61, 62,45, 59,119, 116,90, 156,198, 373,326

+classes=20

+num=9

+jitter=.3

+ignore_thresh = .5

+truth_thresh = 1

+random=1

+

+[route]

+layers = -4

+

+[convolutional]

+batch_normalize=1

+filters=256

+size=1

+stride=1

+pad=1

+activation=leaky

+

+[upsample]

+stride=2

+

+[route]

+layers = -1, 61

+

+

+

+[convolutional]

+batch_normalize=1

+filters=256

+size=1

+stride=1

+pad=1

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+size=3

+stride=1

+pad=1

+filters=512

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+filters=256

+size=1

+stride=1

+pad=1

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+size=3

+stride=1

+pad=1

+filters=512

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+filters=256

+size=1

+stride=1

+pad=1

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+size=3

+stride=1

+pad=1

+filters=512

+activation=leaky

+

+[convolutional]

+size=1

+stride=1

+pad=1

+filters=75

+activation=linear

+

+[yolo]

+mask = 3,4,5

+anchors = 10,13, 16,30, 33,23, 30,61, 62,45, 59,119, 116,90, 156,198, 373,326

+classes=20

+num=9

+jitter=.3

+ignore_thresh = .5

+truth_thresh = 1

+random=1

+

+[route]

+layers = -4

+

+[convolutional]

+batch_normalize=1

+filters=128

+size=1

+stride=1

+pad=1

+activation=leaky

+

+[upsample]

+stride=2

+

+[route]

+layers = -1, 36

+

+

+

+[convolutional]

+batch_normalize=1

+filters=128

+size=1

+stride=1

+pad=1

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+size=3

+stride=1

+pad=1

+filters=256

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+filters=128

+size=1

+stride=1

+pad=1

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+size=3

+stride=1

+pad=1

+filters=256

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+filters=128

+size=1

+stride=1

+pad=1

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+size=3

+stride=1

+pad=1

+filters=256

+activation=leaky

+

+[convolutional]

+size=1

+stride=1

+pad=1

+filters=75

+activation=linear

+

+[yolo]

+mask = 0,1,2

+anchors = 10,13, 16,30, 33,23, 30,61, 62,45, 59,119, 116,90, 156,198, 373,326

+classes=20

+num=9

+jitter=.3

+ignore_thresh = .5

+truth_thresh = 1

+random=1

+

diff --git a/ros/src/computing/perception/detection/packages/yolo3_detector/darknet/cfg/yolov3.cfg b/ros/src/computing/perception/detection/packages/yolo3_detector/darknet/cfg/yolov3.cfg

new file mode 100644

index 00000000000..5f3ab621302

--- /dev/null

+++ b/ros/src/computing/perception/detection/packages/yolo3_detector/darknet/cfg/yolov3.cfg

@@ -0,0 +1,789 @@

+[net]

+# Testing

+batch=1

+subdivisions=1

+# Training

+# batch=64

+# subdivisions=16

+width=416

+height=416

+channels=3

+momentum=0.9

+decay=0.0005

+angle=0

+saturation = 1.5

+exposure = 1.5

+hue=.1

+

+learning_rate=0.001

+burn_in=1000

+max_batches = 500200

+policy=steps

+steps=400000,450000

+scales=.1,.1

+

+[convolutional]

+batch_normalize=1

+filters=32

+size=3

+stride=1

+pad=1

+activation=leaky

+

+# Downsample

+

+[convolutional]

+batch_normalize=1

+filters=64

+size=3

+stride=2

+pad=1

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+filters=32

+size=1

+stride=1

+pad=1

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+filters=64

+size=3

+stride=1

+pad=1

+activation=leaky

+

+[shortcut]

+from=-3

+activation=linear

+

+# Downsample

+

+[convolutional]

+batch_normalize=1

+filters=128

+size=3

+stride=2

+pad=1

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+filters=64

+size=1

+stride=1

+pad=1

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+filters=128

+size=3

+stride=1

+pad=1

+activation=leaky

+

+[shortcut]

+from=-3

+activation=linear

+

+[convolutional]

+batch_normalize=1

+filters=64

+size=1

+stride=1

+pad=1

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+filters=128

+size=3

+stride=1

+pad=1

+activation=leaky

+

+[shortcut]

+from=-3

+activation=linear

+

+# Downsample

+

+[convolutional]

+batch_normalize=1

+filters=256

+size=3

+stride=2

+pad=1

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+filters=128

+size=1

+stride=1

+pad=1

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+filters=256

+size=3

+stride=1

+pad=1

+activation=leaky

+

+[shortcut]

+from=-3

+activation=linear

+

+[convolutional]

+batch_normalize=1

+filters=128

+size=1

+stride=1

+pad=1

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+filters=256

+size=3

+stride=1

+pad=1

+activation=leaky

+

+[shortcut]

+from=-3

+activation=linear

+

+[convolutional]

+batch_normalize=1

+filters=128

+size=1

+stride=1

+pad=1

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+filters=256

+size=3

+stride=1

+pad=1

+activation=leaky

+

+[shortcut]

+from=-3

+activation=linear

+

+[convolutional]

+batch_normalize=1

+filters=128

+size=1

+stride=1

+pad=1

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+filters=256

+size=3

+stride=1

+pad=1

+activation=leaky

+

+[shortcut]

+from=-3

+activation=linear

+

+

+[convolutional]

+batch_normalize=1

+filters=128

+size=1

+stride=1

+pad=1

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+filters=256

+size=3

+stride=1

+pad=1

+activation=leaky

+

+[shortcut]

+from=-3

+activation=linear

+

+[convolutional]

+batch_normalize=1

+filters=128

+size=1

+stride=1

+pad=1

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+filters=256

+size=3

+stride=1

+pad=1

+activation=leaky

+

+[shortcut]

+from=-3

+activation=linear

+

+[convolutional]

+batch_normalize=1

+filters=128

+size=1

+stride=1

+pad=1

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+filters=256

+size=3

+stride=1

+pad=1

+activation=leaky

+

+[shortcut]

+from=-3

+activation=linear

+

+[convolutional]

+batch_normalize=1

+filters=128

+size=1

+stride=1

+pad=1

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+filters=256

+size=3

+stride=1

+pad=1

+activation=leaky

+

+[shortcut]

+from=-3

+activation=linear

+

+# Downsample

+

+[convolutional]

+batch_normalize=1

+filters=512

+size=3

+stride=2

+pad=1

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+filters=256

+size=1

+stride=1

+pad=1

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+filters=512

+size=3

+stride=1

+pad=1

+activation=leaky

+

+[shortcut]

+from=-3

+activation=linear

+

+

+[convolutional]

+batch_normalize=1

+filters=256

+size=1

+stride=1

+pad=1

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+filters=512

+size=3

+stride=1

+pad=1

+activation=leaky

+

+[shortcut]

+from=-3

+activation=linear

+

+

+[convolutional]

+batch_normalize=1

+filters=256

+size=1

+stride=1

+pad=1

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+filters=512

+size=3

+stride=1

+pad=1

+activation=leaky

+

+[shortcut]

+from=-3

+activation=linear

+

+

+[convolutional]

+batch_normalize=1

+filters=256

+size=1

+stride=1

+pad=1

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+filters=512

+size=3

+stride=1

+pad=1

+activation=leaky

+

+[shortcut]

+from=-3

+activation=linear

+

+[convolutional]

+batch_normalize=1

+filters=256

+size=1

+stride=1

+pad=1

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+filters=512

+size=3

+stride=1

+pad=1

+activation=leaky

+

+[shortcut]

+from=-3

+activation=linear

+

+

+[convolutional]

+batch_normalize=1

+filters=256

+size=1

+stride=1

+pad=1

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+filters=512

+size=3

+stride=1

+pad=1

+activation=leaky

+

+[shortcut]

+from=-3

+activation=linear

+

+

+[convolutional]

+batch_normalize=1

+filters=256

+size=1

+stride=1

+pad=1

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+filters=512

+size=3

+stride=1

+pad=1

+activation=leaky

+

+[shortcut]

+from=-3

+activation=linear

+

+[convolutional]

+batch_normalize=1

+filters=256

+size=1

+stride=1

+pad=1

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+filters=512

+size=3

+stride=1

+pad=1

+activation=leaky

+

+[shortcut]

+from=-3

+activation=linear

+

+# Downsample

+

+[convolutional]

+batch_normalize=1

+filters=1024

+size=3

+stride=2

+pad=1

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+filters=512

+size=1

+stride=1

+pad=1

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+filters=1024

+size=3

+stride=1

+pad=1

+activation=leaky

+

+[shortcut]

+from=-3

+activation=linear

+

+[convolutional]

+batch_normalize=1

+filters=512

+size=1

+stride=1

+pad=1

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+filters=1024

+size=3

+stride=1

+pad=1

+activation=leaky

+

+[shortcut]

+from=-3

+activation=linear

+

+[convolutional]

+batch_normalize=1

+filters=512

+size=1

+stride=1

+pad=1

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+filters=1024

+size=3

+stride=1

+pad=1

+activation=leaky

+

+[shortcut]

+from=-3

+activation=linear

+

+[convolutional]

+batch_normalize=1

+filters=512

+size=1

+stride=1

+pad=1

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+filters=1024

+size=3

+stride=1

+pad=1

+activation=leaky

+

+[shortcut]

+from=-3

+activation=linear

+

+######################

+

+[convolutional]

+batch_normalize=1

+filters=512

+size=1

+stride=1

+pad=1

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+size=3

+stride=1

+pad=1

+filters=1024

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+filters=512

+size=1

+stride=1

+pad=1

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+size=3

+stride=1

+pad=1

+filters=1024

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+filters=512

+size=1

+stride=1

+pad=1

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+size=3

+stride=1

+pad=1

+filters=1024

+activation=leaky

+

+[convolutional]

+size=1

+stride=1

+pad=1

+filters=255

+activation=linear

+

+

+[yolo]

+mask = 6,7,8

+anchors = 10,13, 16,30, 33,23, 30,61, 62,45, 59,119, 116,90, 156,198, 373,326

+classes=80

+num=9

+jitter=.3

+ignore_thresh = .5

+truth_thresh = 1

+random=1

+

+

+[route]

+layers = -4

+

+[convolutional]

+batch_normalize=1

+filters=256

+size=1

+stride=1

+pad=1

+activation=leaky

+

+[upsample]

+stride=2

+

+[route]

+layers = -1, 61

+

+

+

+[convolutional]

+batch_normalize=1

+filters=256

+size=1

+stride=1

+pad=1

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+size=3

+stride=1

+pad=1

+filters=512

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+filters=256

+size=1

+stride=1

+pad=1

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+size=3

+stride=1

+pad=1

+filters=512

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+filters=256

+size=1

+stride=1

+pad=1

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+size=3

+stride=1

+pad=1

+filters=512

+activation=leaky

+

+[convolutional]

+size=1

+stride=1

+pad=1

+filters=255

+activation=linear

+

+

+[yolo]

+mask = 3,4,5

+anchors = 10,13, 16,30, 33,23, 30,61, 62,45, 59,119, 116,90, 156,198, 373,326

+classes=80

+num=9

+jitter=.3

+ignore_thresh = .5

+truth_thresh = 1

+random=1

+

+

+

+[route]

+layers = -4

+

+[convolutional]

+batch_normalize=1

+filters=128

+size=1

+stride=1

+pad=1

+activation=leaky

+

+[upsample]

+stride=2

+

+[route]

+layers = -1, 36

+

+

+

+[convolutional]

+batch_normalize=1

+filters=128

+size=1

+stride=1

+pad=1

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+size=3

+stride=1

+pad=1

+filters=256

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+filters=128

+size=1

+stride=1

+pad=1

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+size=3

+stride=1

+pad=1

+filters=256

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+filters=128

+size=1

+stride=1

+pad=1

+activation=leaky

+

+[convolutional]

+batch_normalize=1

+size=3

+stride=1

+pad=1

+filters=256

+activation=leaky

+

+[convolutional]

+size=1

+stride=1

+pad=1

+filters=255

+activation=linear

+

+

+[yolo]

+mask = 0,1,2

+anchors = 10,13, 16,30, 33,23, 30,61, 62,45, 59,119, 116,90, 156,198, 373,326

+classes=80

+num=9

+jitter=.3

+ignore_thresh = .5

+truth_thresh = 1

+random=1

+

diff --git a/ros/src/computing/perception/detection/packages/yolo3_detector/darknet/src/activation_kernels.cu b/ros/src/computing/perception/detection/packages/yolo3_detector/darknet/src/activation_kernels.cu

new file mode 100644

index 00000000000..5852eb58e0e

--- /dev/null

+++ b/ros/src/computing/perception/detection/packages/yolo3_detector/darknet/src/activation_kernels.cu

@@ -0,0 +1,200 @@

+#include "cuda_runtime.h"

+#include "curand.h"

+#include "cublas_v2.h"

+

+extern "C" {

+#include "activations.h"

+#include "cuda.h"

+}

+

+

+__device__ float lhtan_activate_kernel(float x)

+{

+ if(x < 0) return .001f*x;

+ if(x > 1) return .001f*(x-1.f) + 1.f;

+ return x;

+}

+__device__ float lhtan_gradient_kernel(float x)

+{

+ if(x > 0 && x < 1) return 1;

+ return .001;

+}

+

+__device__ float hardtan_activate_kernel(float x)

+{

+ if (x < -1) return -1;

+ if (x > 1) return 1;

+ return x;

+}

+__device__ float linear_activate_kernel(float x){return x;}

+__device__ float logistic_activate_kernel(float x){return 1.f/(1.f + expf(-x));}

+__device__ float loggy_activate_kernel(float x){return 2.f/(1.f + expf(-x)) - 1;}

+__device__ float relu_activate_kernel(float x){return x*(x>0);}

+__device__ float elu_activate_kernel(float x){return (x >= 0)*x + (x < 0)*(expf(x)-1);}

+__device__ float relie_activate_kernel(float x){return (x>0) ? x : .01f*x;}

+__device__ float ramp_activate_kernel(float x){return x*(x>0)+.1f*x;}

+__device__ float leaky_activate_kernel(float x){return (x>0) ? x : .1f*x;}

+__device__ float tanh_activate_kernel(float x){return (2.f/(1 + expf(-2*x)) - 1);}

+__device__ float plse_activate_kernel(float x)

+{

+ if(x < -4) return .01f * (x + 4);

+ if(x > 4) return .01f * (x - 4) + 1;

+ return .125f*x + .5f;

+}

+__device__ float stair_activate_kernel(float x)

+{

+ int n = floorf(x);

+ if (n%2 == 0) return floorf(x/2);

+ else return (x - n) + floorf(x/2);

+}

+

+

+__device__ float hardtan_gradient_kernel(float x)

+{

+ if (x > -1 && x < 1) return 1;

+ return 0;

+}

+__device__ float linear_gradient_kernel(float x){return 1;}

+__device__ float logistic_gradient_kernel(float x){return (1-x)*x;}

+__device__ float loggy_gradient_kernel(float x)

+{

+ float y = (x+1)/2;

+ return 2*(1-y)*y;

+}

+__device__ float relu_gradient_kernel(float x){return (x>0);}

+__device__ float elu_gradient_kernel(float x){return (x >= 0) + (x < 0)*(x + 1);}

+__device__ float relie_gradient_kernel(float x){return (x>0) ? 1 : .01f;}

+__device__ float ramp_gradient_kernel(float x){return (x>0)+.1f;}

+__device__ float leaky_gradient_kernel(float x){return (x>0) ? 1 : .1f;}

+__device__ float tanh_gradient_kernel(float x){return 1-x*x;}

+__device__ float plse_gradient_kernel(float x){return (x < 0 || x > 1) ? .01f : .125f;}

+__device__ float stair_gradient_kernel(float x)

+{

+ if (floorf(x) == x) return 0;

+ return 1;

+}

+

+__device__ float activate_kernel(float x, ACTIVATION a)

+{

+ switch(a){

+ case LINEAR:

+ return linear_activate_kernel(x);

+ case LOGISTIC:

+ return logistic_activate_kernel(x);

+ case LOGGY:

+ return loggy_activate_kernel(x);

+ case RELU:

+ return relu_activate_kernel(x);

+ case ELU:

+ return elu_activate_kernel(x);

+ case RELIE:

+ return relie_activate_kernel(x);

+ case RAMP:

+ return ramp_activate_kernel(x);

+ case LEAKY:

+ return leaky_activate_kernel(x);

+ case TANH:

+ return tanh_activate_kernel(x);

+ case PLSE:

+ return plse_activate_kernel(x);

+ case STAIR:

+ return stair_activate_kernel(x);

+ case HARDTAN:

+ return hardtan_activate_kernel(x);

+ case LHTAN:

+ return lhtan_activate_kernel(x);

+ }

+ return 0;

+}

+

+__device__ float gradient_kernel(float x, ACTIVATION a)

+{

+ switch(a){

+ case LINEAR:

+ return linear_gradient_kernel(x);

+ case LOGISTIC:

+ return logistic_gradient_kernel(x);

+ case LOGGY:

+ return loggy_gradient_kernel(x);

+ case RELU:

+ return relu_gradient_kernel(x);

+ case ELU:

+ return elu_gradient_kernel(x);

+ case RELIE:

+ return relie_gradient_kernel(x);

+ case RAMP:

+ return ramp_gradient_kernel(x);

+ case LEAKY:

+ return leaky_gradient_kernel(x);

+ case TANH:

+ return tanh_gradient_kernel(x);

+ case PLSE:

+ return plse_gradient_kernel(x);

+ case STAIR:

+ return stair_gradient_kernel(x);

+ case HARDTAN:

+ return hardtan_gradient_kernel(x);

+ case LHTAN:

+ return lhtan_gradient_kernel(x);

+ }

+ return 0;

+}

+

+__global__ void binary_gradient_array_kernel(float *x, float *dy, int n, int s, BINARY_ACTIVATION a, float *dx)

+{

+ int id = (blockIdx.x + blockIdx.y*gridDim.x) * blockDim.x + threadIdx.x;

+ int i = id % s;

+ int b = id / s;

+ float x1 = x[b*s + i];

+ float x2 = x[b*s + s/2 + i];

+ if(id < n) {

+ float de = dy[id];

+ dx[b*s + i] = x2*de;

+ dx[b*s + s/2 + i] = x1*de;

+ }

+}

+

+extern "C" void binary_gradient_array_gpu(float *x, float *dx, int n, int size, BINARY_ACTIVATION a, float *y)

+{

+ binary_gradient_array_kernel<<>>(x, dx, n/2, size, a, y);

+ check_error(cudaPeekAtLastError());

+}

+__global__ void binary_activate_array_kernel(float *x, int n, int s, BINARY_ACTIVATION a, float *y)

+{

+ int id = (blockIdx.x + blockIdx.y*gridDim.x) * blockDim.x + threadIdx.x;

+ int i = id % s;

+ int b = id / s;

+ float x1 = x[b*s + i];

+ float x2 = x[b*s + s/2 + i];

+ if(id < n) y[id] = x1*x2;

+}

+

+extern "C" void binary_activate_array_gpu(float *x, int n, int size, BINARY_ACTIVATION a, float *y)

+{

+ binary_activate_array_kernel<<>>(x, n/2, size, a, y);

+ check_error(cudaPeekAtLastError());

+}

+

+__global__ void activate_array_kernel(float *x, int n, ACTIVATION a)

+{

+ int i = (blockIdx.x + blockIdx.y*gridDim.x) * blockDim.x + threadIdx.x;

+ if(i < n) x[i] = activate_kernel(x[i], a);

+}

+

+__global__ void gradient_array_kernel(float *x, int n, ACTIVATION a, float *delta)

+{

+ int i = (blockIdx.x + blockIdx.y*gridDim.x) * blockDim.x + threadIdx.x;

+ if(i < n) delta[i] *= gradient_kernel(x[i], a);

+}

+

+extern "C" void activate_array_gpu(float *x, int n, ACTIVATION a)

+{

+ activate_array_kernel<<>>(x, n, a);

+ check_error(cudaPeekAtLastError());

+}

+

+extern "C" void gradient_array_gpu(float *x, int n, ACTIVATION a, float *delta)

+{

+ gradient_array_kernel<<>>(x, n, a, delta);

+ check_error(cudaPeekAtLastError());

+}

diff --git a/ros/src/computing/perception/detection/packages/yolo3_detector/darknet/src/activation_layer.c b/ros/src/computing/perception/detection/packages/yolo3_detector/darknet/src/activation_layer.c

new file mode 100644

index 00000000000..b4ba953967b

--- /dev/null

+++ b/ros/src/computing/perception/detection/packages/yolo3_detector/darknet/src/activation_layer.c

@@ -0,0 +1,63 @@

+#include "activation_layer.h"

+#include "utils.h"

+#include "cuda.h"

+#include "blas.h"

+#include "gemm.h"

+

+#include

+#include

+#include

+#include

+

+layer make_activation_layer(int batch, int inputs, ACTIVATION activation)

+{

+ layer l = {0};

+ l.type = ACTIVE;

+

+ l.inputs = inputs;

+ l.outputs = inputs;

+ l.batch=batch;

+

+ l.output = calloc(batch*inputs, sizeof(float*));

+ l.delta = calloc(batch*inputs, sizeof(float*));

+

+ l.forward = forward_activation_layer;

+ l.backward = backward_activation_layer;

+#ifdef GPU

+ l.forward_gpu = forward_activation_layer_gpu;

+ l.backward_gpu = backward_activation_layer_gpu;

+

+ l.output_gpu = cuda_make_array(l.output, inputs*batch);

+ l.delta_gpu = cuda_make_array(l.delta, inputs*batch);

+#endif

+ l.activation = activation;

+ fprintf(stderr, "Activation Layer: %d inputs\n", inputs);

+ return l;

+}

+

+void forward_activation_layer(layer l, network net)

+{

+ copy_cpu(l.outputs*l.batch, net.input, 1, l.output, 1);

+ activate_array(l.output, l.outputs*l.batch, l.activation);

+}

+

+void backward_activation_layer(layer l, network net)

+{

+ gradient_array(l.output, l.outputs*l.batch, l.activation, l.delta);

+ copy_cpu(l.outputs*l.batch, l.delta, 1, net.delta, 1);

+}

+

+#ifdef GPU

+

+void forward_activation_layer_gpu(layer l, network net)

+{

+ copy_gpu(l.outputs*l.batch, net.input_gpu, 1, l.output_gpu, 1);

+ activate_array_gpu(l.output_gpu, l.outputs*l.batch, l.activation);

+}

+

+void backward_activation_layer_gpu(layer l, network net)

+{

+ gradient_array_gpu(l.output_gpu, l.outputs*l.batch, l.activation, l.delta_gpu);

+ copy_gpu(l.outputs*l.batch, l.delta_gpu, 1, net.delta_gpu, 1);

+}

+#endif

diff --git a/ros/src/computing/perception/detection/packages/yolo3_detector/darknet/src/activation_layer.h b/ros/src/computing/perception/detection/packages/yolo3_detector/darknet/src/activation_layer.h

new file mode 100644

index 00000000000..42118a84e83

--- /dev/null

+++ b/ros/src/computing/perception/detection/packages/yolo3_detector/darknet/src/activation_layer.h

@@ -0,0 +1,19 @@

+#ifndef ACTIVATION_LAYER_H

+#define ACTIVATION_LAYER_H

+

+#include "activations.h"

+#include "layer.h"

+#include "network.h"

+

+layer make_activation_layer(int batch, int inputs, ACTIVATION activation);

+

+void forward_activation_layer(layer l, network net);

+void backward_activation_layer(layer l, network net);

+

+#ifdef GPU

+void forward_activation_layer_gpu(layer l, network net);

+void backward_activation_layer_gpu(layer l, network net);

+#endif

+

+#endif

+

diff --git a/ros/src/computing/perception/detection/packages/yolo3_detector/darknet/src/activations.c b/ros/src/computing/perception/detection/packages/yolo3_detector/darknet/src/activations.c

new file mode 100644

index 00000000000..0cbb2f5546e

--- /dev/null

+++ b/ros/src/computing/perception/detection/packages/yolo3_detector/darknet/src/activations.c

@@ -0,0 +1,143 @@

+#include "activations.h"

+

+#include

+#include

+#include

+#include

+

+char *get_activation_string(ACTIVATION a)

+{

+ switch(a){

+ case LOGISTIC:

+ return "logistic";

+ case LOGGY:

+ return "loggy";

+ case RELU:

+ return "relu";

+ case ELU:

+ return "elu";

+ case RELIE:

+ return "relie";

+ case RAMP:

+ return "ramp";

+ case LINEAR:

+ return "linear";

+ case TANH:

+ return "tanh";

+ case PLSE:

+ return "plse";

+ case LEAKY:

+ return "leaky";

+ case STAIR:

+ return "stair";

+ case HARDTAN:

+ return "hardtan";

+ case LHTAN:

+ return "lhtan";

+ default:

+ break;

+ }

+ return "relu";

+}

+

+ACTIVATION get_activation(char *s)

+{

+ if (strcmp(s, "logistic")==0) return LOGISTIC;

+ if (strcmp(s, "loggy")==0) return LOGGY;

+ if (strcmp(s, "relu")==0) return RELU;

+ if (strcmp(s, "elu")==0) return ELU;

+ if (strcmp(s, "relie")==0) return RELIE;

+ if (strcmp(s, "plse")==0) return PLSE;

+ if (strcmp(s, "hardtan")==0) return HARDTAN;

+ if (strcmp(s, "lhtan")==0) return LHTAN;

+ if (strcmp(s, "linear")==0) return LINEAR;

+ if (strcmp(s, "ramp")==0) return RAMP;

+ if (strcmp(s, "leaky")==0) return LEAKY;

+ if (strcmp(s, "tanh")==0) return TANH;

+ if (strcmp(s, "stair")==0) return STAIR;

+ fprintf(stderr, "Couldn't find activation function %s, going with ReLU\n", s);

+ return RELU;

+}

+

+float activate(float x, ACTIVATION a)

+{

+ switch(a){

+ case LINEAR:

+ return linear_activate(x);

+ case LOGISTIC:

+ return logistic_activate(x);

+ case LOGGY:

+ return loggy_activate(x);

+ case RELU:

+ return relu_activate(x);

+ case ELU:

+ return elu_activate(x);

+ case RELIE:

+ return relie_activate(x);

+ case RAMP:

+ return ramp_activate(x);

+ case LEAKY:

+ return leaky_activate(x);

+ case TANH:

+ return tanh_activate(x);

+ case PLSE:

+ return plse_activate(x);

+ case STAIR:

+ return stair_activate(x);

+ case HARDTAN:

+ return hardtan_activate(x);

+ case LHTAN:

+ return lhtan_activate(x);

+ }

+ return 0;

+}

+

+void activate_array(float *x, const int n, const ACTIVATION a)

+{

+ int i;

+ for(i = 0; i < n; ++i){

+ x[i] = activate(x[i], a);

+ }

+}

+

+float gradient(float x, ACTIVATION a)

+{

+ switch(a){

+ case LINEAR:

+ return linear_gradient(x);

+ case LOGISTIC:

+ return logistic_gradient(x);

+ case LOGGY:

+ return loggy_gradient(x);

+ case RELU:

+ return relu_gradient(x);

+ case ELU:

+ return elu_gradient(x);

+ case RELIE:

+ return relie_gradient(x);

+ case RAMP:

+ return ramp_gradient(x);

+ case LEAKY:

+ return leaky_gradient(x);

+ case TANH:

+ return tanh_gradient(x);

+ case PLSE:

+ return plse_gradient(x);

+ case STAIR:

+ return stair_gradient(x);

+ case HARDTAN:

+ return hardtan_gradient(x);

+ case LHTAN:

+ return lhtan_gradient(x);

+ }

+ return 0;

+}

+

+void gradient_array(const float *x, const int n, const ACTIVATION a, float *delta)

+{

+ int i;

+ for(i = 0; i < n; ++i){

+ delta[i] *= gradient(x[i], a);

+ }

+}

+

diff --git a/ros/src/computing/perception/detection/packages/yolo3_detector/darknet/src/activations.h b/ros/src/computing/perception/detection/packages/yolo3_detector/darknet/src/activations.h

new file mode 100644

index 00000000000..d456dbe3c2b

--- /dev/null

+++ b/ros/src/computing/perception/detection/packages/yolo3_detector/darknet/src/activations.h

@@ -0,0 +1,85 @@

+#ifndef ACTIVATIONS_H

+#define ACTIVATIONS_H

+#include "darknet.h"

+#include "cuda.h"

+#include "math.h"

+

+ACTIVATION get_activation(char *s);

+

+char *get_activation_string(ACTIVATION a);

+float activate(float x, ACTIVATION a);

+float gradient(float x, ACTIVATION a);

+void gradient_array(const float *x, const int n, const ACTIVATION a, float *delta);

+void activate_array(float *x, const int n, const ACTIVATION a);

+#ifdef GPU

+void activate_array_gpu(float *x, int n, ACTIVATION a);

+void gradient_array_gpu(float *x, int n, ACTIVATION a, float *delta);

+#endif

+

+static inline float stair_activate(float x)

+{

+ int n = floor(x);

+ if (n%2 == 0) return floor(x/2.);

+ else return (x - n) + floor(x/2.);

+}

+static inline float hardtan_activate(float x)

+{

+ if (x < -1) return -1;

+ if (x > 1) return 1;

+ return x;

+}

+static inline float linear_activate(float x){return x;}

+static inline float logistic_activate(float x){return 1./(1. + exp(-x));}

+static inline float loggy_activate(float x){return 2./(1. + exp(-x)) - 1;}

+static inline float relu_activate(float x){return x*(x>0);}

+static inline float elu_activate(float x){return (x >= 0)*x + (x < 0)*(exp(x)-1);}

+static inline float relie_activate(float x){return (x>0) ? x : .01*x;}

+static inline float ramp_activate(float x){return x*(x>0)+.1*x;}

+static inline float leaky_activate(float x){return (x>0) ? x : .1*x;}

+static inline float tanh_activate(float x){return (exp(2*x)-1)/(exp(2*x)+1);}

+static inline float plse_activate(float x)

+{

+ if(x < -4) return .01 * (x + 4);

+ if(x > 4) return .01 * (x - 4) + 1;

+ return .125*x + .5;

+}

+

+static inline float lhtan_activate(float x)

+{

+ if(x < 0) return .001*x;

+ if(x > 1) return .001*(x-1) + 1;

+ return x;

+}

+static inline float lhtan_gradient(float x)

+{

+ if(x > 0 && x < 1) return 1;

+ return .001;

+}

+

+static inline float hardtan_gradient(float x)

+{

+ if (x > -1 && x < 1) return 1;

+ return 0;

+}

+static inline float linear_gradient(float x){return 1;}

+static inline float logistic_gradient(float x){return (1-x)*x;}

+static inline float loggy_gradient(float x)

+{

+ float y = (x+1.)/2.;

+ return 2*(1-y)*y;

+}

+static inline float stair_gradient(float x)

+{

+ if (floor(x) == x) return 0;

+ return 1;

+}

+static inline float relu_gradient(float x){return (x>0);}

+static inline float elu_gradient(float x){return (x >= 0) + (x < 0)*(x + 1);}

+static inline float relie_gradient(float x){return (x>0) ? 1 : .01;}

+static inline float ramp_gradient(float x){return (x>0)+.1;}

+static inline float leaky_gradient(float x){return (x>0) ? 1 : .1;}

+static inline float tanh_gradient(float x){return 1-x*x;}

+static inline float plse_gradient(float x){return (x < 0 || x > 1) ? .01 : .125;}

+

+#endif

+

diff --git a/ros/src/computing/perception/detection/packages/yolo3_detector/darknet/src/avgpool_layer.c b/ros/src/computing/perception/detection/packages/yolo3_detector/darknet/src/avgpool_layer.c

new file mode 100644

index 00000000000..83034dbecf4

--- /dev/null

+++ b/ros/src/computing/perception/detection/packages/yolo3_detector/darknet/src/avgpool_layer.c

@@ -0,0 +1,71 @@

+#include "avgpool_layer.h"

+#include "cuda.h"

+#include

+

+avgpool_layer make_avgpool_layer(int batch, int w, int h, int c)

+{

+ fprintf(stderr, "avg %4d x%4d x%4d -> %4d\n", w, h, c, c);

+ avgpool_layer l = {0};

+ l.type = AVGPOOL;

+ l.batch = batch;

+ l.h = h;

+ l.w = w;

+ l.c = c;

+ l.out_w = 1;

+ l.out_h = 1;

+ l.out_c = c;

+ l.outputs = l.out_c;

+ l.inputs = h*w*c;

+ int output_size = l.outputs * batch;

+ l.output = calloc(output_size, sizeof(float));

+ l.delta = calloc(output_size, sizeof(float));

+ l.forward = forward_avgpool_layer;

+ l.backward = backward_avgpool_layer;

+ #ifdef GPU

+ l.forward_gpu = forward_avgpool_layer_gpu;

+ l.backward_gpu = backward_avgpool_layer_gpu;

+ l.output_gpu = cuda_make_array(l.output, output_size);

+ l.delta_gpu = cuda_make_array(l.delta, output_size);

+ #endif

+ return l;

+}

+

+void resize_avgpool_layer(avgpool_layer *l, int w, int h)

+{

+ l->w = w;

+ l->h = h;

+ l->inputs = h*w*l->c;

+}

+

+void forward_avgpool_layer(const avgpool_layer l, network net)

+{

+ int b,i,k;

+

+ for(b = 0; b < l.batch; ++b){

+ for(k = 0; k < l.c; ++k){

+ int out_index = k + b*l.c;

+ l.output[out_index] = 0;

+ for(i = 0; i < l.h*l.w; ++i){

+ int in_index = i + l.h*l.w*(k + b*l.c);

+ l.output[out_index] += net.input[in_index];

+ }

+ l.output[out_index] /= l.h*l.w;

+ }

+ }

+}

+

+void backward_avgpool_layer(const avgpool_layer l, network net)

+{

+ int b,i,k;

+

+ for(b = 0; b < l.batch; ++b){

+ for(k = 0; k < l.c; ++k){

+ int out_index = k + b*l.c;

+ for(i = 0; i < l.h*l.w; ++i){

+ int in_index = i + l.h*l.w*(k + b*l.c);

+ net.delta[in_index] += l.delta[out_index] / (l.h*l.w);

+ }

+ }

+ }

+}

+

diff --git a/ros/src/computing/perception/detection/packages/yolo3_detector/darknet/src/avgpool_layer.h b/ros/src/computing/perception/detection/packages/yolo3_detector/darknet/src/avgpool_layer.h

new file mode 100644

index 00000000000..3bd356c4e39

--- /dev/null

+++ b/ros/src/computing/perception/detection/packages/yolo3_detector/darknet/src/avgpool_layer.h

@@ -0,0 +1,23 @@

+#ifndef AVGPOOL_LAYER_H

+#define AVGPOOL_LAYER_H

+

+#include "image.h"

+#include "cuda.h"

+#include "layer.h"

+#include "network.h"

+

+typedef layer avgpool_layer;

+

+image get_avgpool_image(avgpool_layer l);

+avgpool_layer make_avgpool_layer(int batch, int w, int h, int c);

+void resize_avgpool_layer(avgpool_layer *l, int w, int h);

+void forward_avgpool_layer(const avgpool_layer l, network net);

+void backward_avgpool_layer(const avgpool_layer l, network net);

+

+#ifdef GPU

+void forward_avgpool_layer_gpu(avgpool_layer l, network net);

+void backward_avgpool_layer_gpu(avgpool_layer l, network net);

+#endif

+

+#endif

+

diff --git a/ros/src/computing/perception/detection/packages/yolo3_detector/darknet/src/avgpool_layer_kernels.cu b/ros/src/computing/perception/detection/packages/yolo3_detector/darknet/src/avgpool_layer_kernels.cu

new file mode 100644

index 00000000000..a7eca3aeae9

--- /dev/null

+++ b/ros/src/computing/perception/detection/packages/yolo3_detector/darknet/src/avgpool_layer_kernels.cu

@@ -0,0 +1,61 @@

+#include "cuda_runtime.h"

+#include "curand.h"

+#include "cublas_v2.h"

+

+extern "C" {

+#include "avgpool_layer.h"

+#include "cuda.h"

+}

+

+__global__ void forward_avgpool_layer_kernel(int n, int w, int h, int c, float *input, float *output)

+{

+ int id = (blockIdx.x + blockIdx.y*gridDim.x) * blockDim.x + threadIdx.x;

+ if(id >= n) return;

+

+ int k = id % c;

+ id /= c;

+ int b = id;

+

+ int i;

+ int out_index = (k + c*b);

+ output[out_index] = 0;

+ for(i = 0; i < w*h; ++i){

+ int in_index = i + h*w*(k + b*c);

+ output[out_index] += input[in_index];

+ }

+ output[out_index] /= w*h;

+}

+

+__global__ void backward_avgpool_layer_kernel(int n, int w, int h, int c, float *in_delta, float *out_delta)

+{

+ int id = (blockIdx.x + blockIdx.y*gridDim.x) * blockDim.x + threadIdx.x;

+ if(id >= n) return;

+

+ int k = id % c;

+ id /= c;

+ int b = id;

+

+ int i;

+ int out_index = (k + c*b);

+ for(i = 0; i < w*h; ++i){

+ int in_index = i + h*w*(k + b*c);

+ in_delta[in_index] += out_delta[out_index] / (w*h);

+ }

+}

+

+extern "C" void forward_avgpool_layer_gpu(avgpool_layer layer, network net)

+{

+ size_t n = layer.c*layer.batch;

+

+ forward_avgpool_layer_kernel<<>>(n, layer.w, layer.h, layer.c, net.input_gpu, layer.output_gpu);

+ check_error(cudaPeekAtLastError());

+}

+

+extern "C" void backward_avgpool_layer_gpu(avgpool_layer layer, network net)

+{

+ size_t n = layer.c*layer.batch;

+

+ backward_avgpool_layer_kernel<<>>(n, layer.w, layer.h, layer.c, net.delta_gpu, layer.delta_gpu);

+ check_error(cudaPeekAtLastError());

+}

+

diff --git a/ros/src/computing/perception/detection/packages/yolo3_detector/darknet/src/batchnorm_layer.c b/ros/src/computing/perception/detection/packages/yolo3_detector/darknet/src/batchnorm_layer.c

new file mode 100644

index 00000000000..ebff387cc4b

--- /dev/null

+++ b/ros/src/computing/perception/detection/packages/yolo3_detector/darknet/src/batchnorm_layer.c

@@ -0,0 +1,279 @@

+#include "convolutional_layer.h"

+#include "batchnorm_layer.h"

+#include "blas.h"

+#include

+

+layer make_batchnorm_layer(int batch, int w, int h, int c)

+{

+ fprintf(stderr, "Batch Normalization Layer: %d x %d x %d image\n", w,h,c);

+ layer l = {0};

+ l.type = BATCHNORM;

+ l.batch = batch;

+ l.h = l.out_h = h;

+ l.w = l.out_w = w;

+ l.c = l.out_c = c;

+ l.output = calloc(h * w * c * batch, sizeof(float));

+ l.delta = calloc(h * w * c * batch, sizeof(float));

+ l.inputs = w*h*c;

+ l.outputs = l.inputs;

+

+ l.scales = calloc(c, sizeof(float));

+ l.scale_updates = calloc(c, sizeof(float));

+ l.biases = calloc(c, sizeof(float));

+ l.bias_updates = calloc(c, sizeof(float));

+ int i;

+ for(i = 0; i < c; ++i){

+ l.scales[i] = 1;

+ }

+

+ l.mean = calloc(c, sizeof(float));

+ l.variance = calloc(c, sizeof(float));

+

+ l.rolling_mean = calloc(c, sizeof(float));

+ l.rolling_variance = calloc(c, sizeof(float));

+

+ l.forward = forward_batchnorm_layer;

+ l.backward = backward_batchnorm_layer;

+#ifdef GPU

+ l.forward_gpu = forward_batchnorm_layer_gpu;

+ l.backward_gpu = backward_batchnorm_layer_gpu;

+

+ l.output_gpu = cuda_make_array(l.output, h * w * c * batch);

+ l.delta_gpu = cuda_make_array(l.delta, h * w * c * batch);

+

+ l.biases_gpu = cuda_make_array(l.biases, c);

+ l.bias_updates_gpu = cuda_make_array(l.bias_updates, c);

+

+ l.scales_gpu = cuda_make_array(l.scales, c);

+ l.scale_updates_gpu = cuda_make_array(l.scale_updates, c);

+

+ l.mean_gpu = cuda_make_array(l.mean, c);

+ l.variance_gpu = cuda_make_array(l.variance, c);

+

+ l.rolling_mean_gpu = cuda_make_array(l.mean, c);

+ l.rolling_variance_gpu = cuda_make_array(l.variance, c);

+

+ l.mean_delta_gpu = cuda_make_array(l.mean, c);

+ l.variance_delta_gpu = cuda_make_array(l.variance, c);

+

+ l.x_gpu = cuda_make_array(l.output, l.batch*l.outputs);

+ l.x_norm_gpu = cuda_make_array(l.output, l.batch*l.outputs);

+ #ifdef CUDNN

+ cudnnCreateTensorDescriptor(&l.normTensorDesc);

+ cudnnCreateTensorDescriptor(&l.dstTensorDesc);

+ cudnnSetTensor4dDescriptor(l.dstTensorDesc, CUDNN_TENSOR_NCHW, CUDNN_DATA_FLOAT, l.batch, l.out_c, l.out_h, l.out_w);

+ cudnnSetTensor4dDescriptor(l.normTensorDesc, CUDNN_TENSOR_NCHW, CUDNN_DATA_FLOAT, 1, l.out_c, 1, 1);

+

+ #endif

+#endif

+ return l;

+}

+

+void backward_scale_cpu(float *x_norm, float *delta, int batch, int n, int size, float *scale_updates)

+{

+ int i,b,f;

+ for(f = 0; f < n; ++f){

+ float sum = 0;

+ for(b = 0; b < batch; ++b){

+ for(i = 0; i < size; ++i){

+ int index = i + size*(f + n*b);

+ sum += delta[index] * x_norm[index];

+ }

+ }

+ scale_updates[f] += sum;

+ }

+}

+

+void mean_delta_cpu(float *delta, float *variance, int batch, int filters, int spatial, float *mean_delta)

+{

+

+ int i,j,k;

+ for(i = 0; i < filters; ++i){

+ mean_delta[i] = 0;

+ for (j = 0; j < batch; ++j) {

+ for (k = 0; k < spatial; ++k) {

+ int index = j*filters*spatial + i*spatial + k;

+ mean_delta[i] += delta[index];

+ }

+ }

+ mean_delta[i] *= (-1./sqrt(variance[i] + .00001f));

+ }

+}

+void variance_delta_cpu(float *x, float *delta, float *mean, float *variance, int batch, int filters, int spatial, float *variance_delta)

+{

+

+ int i,j,k;

+ for(i = 0; i < filters; ++i){

+ variance_delta[i] = 0;

+ for(j = 0; j < batch; ++j){

+ for(k = 0; k < spatial; ++k){

+ int index = j*filters*spatial + i*spatial + k;

+ variance_delta[i] += delta[index]*(x[index] - mean[i]);

+ }

+ }

+ variance_delta[i] *= -.5 * pow(variance[i] + .00001f, (float)(-3./2.));

+ }

+}

+void normalize_delta_cpu(float *x, float *mean, float *variance, float *mean_delta, float *variance_delta, int batch, int filters, int spatial, float *delta)

+{

+ int f, j, k;

+ for(j = 0; j < batch; ++j){

+ for(f = 0; f < filters; ++f){

+ for(k = 0; k < spatial; ++k){

+ int index = j*filters*spatial + f*spatial + k;

+ delta[index] = delta[index] * 1./(sqrt(variance[f] + .00001f)) + variance_delta[f] * 2. * (x[index] - mean[f]) / (spatial * batch) + mean_delta[f]/(spatial*batch);

+ }

+ }

+ }

+}

+

+void resize_batchnorm_layer(layer *layer, int w, int h)

+{

+ fprintf(stderr, "Not implemented\n");

+}

+

+void forward_batchnorm_layer(layer l, network net)

+{

+ if(l.type == BATCHNORM) copy_cpu(l.outputs*l.batch, net.input, 1, l.output, 1);

+ copy_cpu(l.outputs*l.batch, l.output, 1, l.x, 1);

+ if(net.train){

+ mean_cpu(l.output, l.batch, l.out_c, l.out_h*l.out_w, l.mean);

+ variance_cpu(l.output, l.mean, l.batch, l.out_c, l.out_h*l.out_w, l.variance);

+

+ scal_cpu(l.out_c, .99, l.rolling_mean, 1);

+ axpy_cpu(l.out_c, .01, l.mean, 1, l.rolling_mean, 1);

+ scal_cpu(l.out_c, .99, l.rolling_variance, 1);

+ axpy_cpu(l.out_c, .01, l.variance, 1, l.rolling_variance, 1);

+

+ normalize_cpu(l.output, l.mean, l.variance, l.batch, l.out_c, l.out_h*l.out_w);

+ copy_cpu(l.outputs*l.batch, l.output, 1, l.x_norm, 1);

+ } else {

+ normalize_cpu(l.output, l.rolling_mean, l.rolling_variance, l.batch, l.out_c, l.out_h*l.out_w);

+ }

+ scale_bias(l.output, l.scales, l.batch, l.out_c, l.out_h*l.out_w);

+ add_bias(l.output, l.biases, l.batch, l.out_c, l.out_h*l.out_w);

+}

+

+void backward_batchnorm_layer(layer l, network net)

+{

+ if(!net.train){

+ l.mean = l.rolling_mean;

+ l.variance = l.rolling_variance;

+ }

+ backward_bias(l.bias_updates, l.delta, l.batch, l.out_c, l.out_w*l.out_h);

+ backward_scale_cpu(l.x_norm, l.delta, l.batch, l.out_c, l.out_w*l.out_h, l.scale_updates);

+

+ scale_bias(l.delta, l.scales, l.batch, l.out_c, l.out_h*l.out_w);

+

+ mean_delta_cpu(l.delta, l.variance, l.batch, l.out_c, l.out_w*l.out_h, l.mean_delta);

+ variance_delta_cpu(l.x, l.delta, l.mean, l.variance, l.batch, l.out_c, l.out_w*l.out_h, l.variance_delta);

+ normalize_delta_cpu(l.x, l.mean, l.variance, l.mean_delta, l.variance_delta, l.batch, l.out_c, l.out_w*l.out_h, l.delta);

+ if(l.type == BATCHNORM) copy_cpu(l.outputs*l.batch, l.delta, 1, net.delta, 1);

+}

+

+#ifdef GPU

+

+void pull_batchnorm_layer(layer l)

+{

+ cuda_pull_array(l.scales_gpu, l.scales, l.c);

+ cuda_pull_array(l.rolling_mean_gpu, l.rolling_mean, l.c);

+ cuda_pull_array(l.rolling_variance_gpu, l.rolling_variance, l.c);

+}

+void push_batchnorm_layer(layer l)

+{

+ cuda_push_array(l.scales_gpu, l.scales, l.c);

+ cuda_push_array(l.rolling_mean_gpu, l.rolling_mean, l.c);

+ cuda_push_array(l.rolling_variance_gpu, l.rolling_variance, l.c);

+}

+

+void forward_batchnorm_layer_gpu(layer l, network net)

+{

+ if(l.type == BATCHNORM) copy_gpu(l.outputs*l.batch, net.input_gpu, 1, l.output_gpu, 1);

+ copy_gpu(l.outputs*l.batch, l.output_gpu, 1, l.x_gpu, 1);

+ if (net.train) {

+#ifdef CUDNN

+ float one = 1;

+ float zero = 0;

+ cudnnBatchNormalizationForwardTraining(cudnn_handle(),

+ CUDNN_BATCHNORM_SPATIAL,

+ &one,

+ &zero,

+ l.dstTensorDesc,

+ l.x_gpu,

+ l.dstTensorDesc,

+ l.output_gpu,

+ l.normTensorDesc,

+ l.scales_gpu,

+ l.biases_gpu,

+ .01,

+ l.rolling_mean_gpu,

+ l.rolling_variance_gpu,

+ .00001,

+ l.mean_gpu,

+ l.variance_gpu);

+#else

+ fast_mean_gpu(l.output_gpu, l.batch, l.out_c, l.out_h*l.out_w, l.mean_gpu);

+ fast_variance_gpu(l.output_gpu, l.mean_gpu, l.batch, l.out_c, l.out_h*l.out_w, l.variance_gpu);

+

+ scal_gpu(l.out_c, .99, l.rolling_mean_gpu, 1);

+ axpy_gpu(l.out_c, .01, l.mean_gpu, 1, l.rolling_mean_gpu, 1);

+ scal_gpu(l.out_c, .99, l.rolling_variance_gpu, 1);

+ axpy_gpu(l.out_c, .01, l.variance_gpu, 1, l.rolling_variance_gpu, 1);

+

+ copy_gpu(l.outputs*l.batch, l.output_gpu, 1, l.x_gpu, 1);

+ normalize_gpu(l.output_gpu, l.mean_gpu, l.variance_gpu, l.batch, l.out_c, l.out_h*l.out_w);

+ copy_gpu(l.outputs*l.batch, l.output_gpu, 1, l.x_norm_gpu, 1);

+

+ scale_bias_gpu(l.output_gpu, l.scales_gpu, l.batch, l.out_c, l.out_h*l.out_w);

+ add_bias_gpu(l.output_gpu, l.biases_gpu, l.batch, l.out_c, l.out_w*l.out_h);

+#endif

+ } else {

+ normalize_gpu(l.output_gpu, l.rolling_mean_gpu, l.rolling_variance_gpu, l.batch, l.out_c, l.out_h*l.out_w);

+ scale_bias_gpu(l.output_gpu, l.scales_gpu, l.batch, l.out_c, l.out_h*l.out_w);

+ add_bias_gpu(l.output_gpu, l.biases_gpu, l.batch, l.out_c, l.out_w*l.out_h);

+ }

+

+}

+

+void backward_batchnorm_layer_gpu(layer l, network net)

+{

+ if(!net.train){

+ l.mean_gpu = l.rolling_mean_gpu;

+ l.variance_gpu = l.rolling_variance_gpu;

+ }

+#ifdef CUDNN

+ float one = 1;

+ float zero = 0;

+ cudnnBatchNormalizationBackward(cudnn_handle(),

+ CUDNN_BATCHNORM_SPATIAL,

+ &one,

+ &zero,

+ &one,

+ &one,

+ l.dstTensorDesc,

+ l.x_gpu,

+ l.dstTensorDesc,

+ l.delta_gpu,

+ l.dstTensorDesc,

+ l.x_norm_gpu,

+ l.normTensorDesc,

+ l.scales_gpu,

+ l.scale_updates_gpu,

+ l.bias_updates_gpu,

+ .00001,

+ l.mean_gpu,

+ l.variance_gpu);

+ copy_gpu(l.outputs*l.batch, l.x_norm_gpu, 1, l.delta_gpu, 1);

+#else

+ backward_bias_gpu(l.bias_updates_gpu, l.delta_gpu, l.batch, l.out_c, l.out_w*l.out_h);

+ backward_scale_gpu(l.x_norm_gpu, l.delta_gpu, l.batch, l.out_c, l.out_w*l.out_h, l.scale_updates_gpu);

+

+ scale_bias_gpu(l.delta_gpu, l.scales_gpu, l.batch, l.out_c, l.out_h*l.out_w);

+

+ fast_mean_delta_gpu(l.delta_gpu, l.variance_gpu, l.batch, l.out_c, l.out_w*l.out_h, l.mean_delta_gpu);

+ fast_variance_delta_gpu(l.x_gpu, l.delta_gpu, l.mean_gpu, l.variance_gpu, l.batch, l.out_c, l.out_w*l.out_h, l.variance_delta_gpu);

+ normalize_delta_gpu(l.x_gpu, l.mean_gpu, l.variance_gpu, l.mean_delta_gpu, l.variance_delta_gpu, l.batch, l.out_c, l.out_w*l.out_h, l.delta_gpu);

+#endif

+ if(l.type == BATCHNORM) copy_gpu(l.outputs*l.batch, l.delta_gpu, 1, net.delta_gpu, 1);

+}

+#endif

diff --git a/ros/src/computing/perception/detection/packages/yolo3_detector/darknet/src/batchnorm_layer.h b/ros/src/computing/perception/detection/packages/yolo3_detector/darknet/src/batchnorm_layer.h

new file mode 100644

index 00000000000..25a18a3c8f2

--- /dev/null

+++ b/ros/src/computing/perception/detection/packages/yolo3_detector/darknet/src/batchnorm_layer.h

@@ -0,0 +1,19 @@

+#ifndef BATCHNORM_LAYER_H

+#define BATCHNORM_LAYER_H

+

+#include "image.h"

+#include "layer.h"

+#include "network.h"

+

+layer make_batchnorm_layer(int batch, int w, int h, int c);

+void forward_batchnorm_layer(layer l, network net);

+void backward_batchnorm_layer(layer l, network net);

+

+#ifdef GPU

+void forward_batchnorm_layer_gpu(layer l, network net);

+void backward_batchnorm_layer_gpu(layer l, network net);

+void pull_batchnorm_layer(layer l);

+void push_batchnorm_layer(layer l);

+#endif

+

+#endif

diff --git a/ros/src/computing/perception/detection/packages/yolo3_detector/darknet/src/blas.c b/ros/src/computing/perception/detection/packages/yolo3_detector/darknet/src/blas.c

new file mode 100644

index 00000000000..9e1604449ba

--- /dev/null

+++ b/ros/src/computing/perception/detection/packages/yolo3_detector/darknet/src/blas.c

@@ -0,0 +1,351 @@

+#include "blas.h"

+

+#include

+#include

+#include

+#include

+#include

+#include

+void reorg_cpu(float *x, int w, int h, int c, int batch, int stride, int forward, float *out)

+{

+ int b,i,j,k;

+ int out_c = c/(stride*stride);

+

+ for(b = 0; b < batch; ++b){

+ for(k = 0; k < c; ++k){

+ for(j = 0; j < h; ++j){

+ for(i = 0; i < w; ++i){

+ int in_index = i + w*(j + h*(k + c*b));

+ int c2 = k % out_c;

+ int offset = k / out_c;

+ int w2 = i*stride + offset % stride;

+ int h2 = j*stride + offset / stride;

+ int out_index = w2 + w*stride*(h2 + h*stride*(c2 + out_c*b));

+ if(forward) out[out_index] = x[in_index];

+ else out[in_index] = x[out_index];

+ }

+ }

+ }

+ }

+}

+

+void flatten(float *x, int size, int layers, int batch, int forward)

+{

+ float *swap = calloc(size*layers*batch, sizeof(float));

+ int i,c,b;

+ for(b = 0; b < batch; ++b){

+ for(c = 0; c < layers; ++c){

+ for(i = 0; i < size; ++i){

+ int i1 = b*layers*size + c*size + i;

+ int i2 = b*layers*size + i*layers + c;

+ if (forward) swap[i2] = x[i1];

+ else swap[i1] = x[i2];

+ }

+ }

+ }

+ memcpy(x, swap, size*layers*batch*sizeof(float));

+ free(swap);

+}

+

+void weighted_sum_cpu(float *a, float *b, float *s, int n, float *c)

+{

+ int i;

+ for(i = 0; i < n; ++i){

+ c[i] = s[i]*a[i] + (1-s[i])*(b ? b[i] : 0);

+ }

+}

+

+void weighted_delta_cpu(float *a, float *b, float *s, float *da, float *db, float *ds, int n, float *dc)

+{

+ int i;

+ for(i = 0; i < n; ++i){

+ if(da) da[i] += dc[i] * s[i];

+ if(db) db[i] += dc[i] * (1-s[i]);

+ ds[i] += dc[i] * (a[i] - b[i]);

+ }

+}

+

+void shortcut_cpu(int batch, int w1, int h1, int c1, float *add, int w2, int h2, int c2, float s1, float s2, float *out)

+{

+ int stride = w1/w2;

+ int sample = w2/w1;

+ assert(stride == h1/h2);

+ assert(sample == h2/h1);

+ if(stride < 1) stride = 1;

+ if(sample < 1) sample = 1;

+ int minw = (w1 < w2) ? w1 : w2;

+ int minh = (h1 < h2) ? h1 : h2;

+ int minc = (c1 < c2) ? c1 : c2;

+

+ int i,j,k,b;

+ for(b = 0; b < batch; ++b){

+ for(k = 0; k < minc; ++k){

+ for(j = 0; j < minh; ++j){

+ for(i = 0; i < minw; ++i){

+ int out_index = i*sample + w2*(j*sample + h2*(k + c2*b));

+ int add_index = i*stride + w1*(j*stride + h1*(k + c1*b));

+ out[out_index] = s1*out[out_index] + s2*add[add_index];

+ }

+ }

+ }

+ }

+}

+

+void mean_cpu(float *x, int batch, int filters, int spatial, float *mean)

+{

+ float scale = 1./(batch * spatial);

+ int i,j,k;

+ for(i = 0; i < filters; ++i){

+ mean[i] = 0;

+ for(j = 0; j < batch; ++j){

+ for(k = 0; k < spatial; ++k){

+ int index = j*filters*spatial + i*spatial + k;

+ mean[i] += x[index];