-

-

Notifications

You must be signed in to change notification settings - Fork 1.1k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

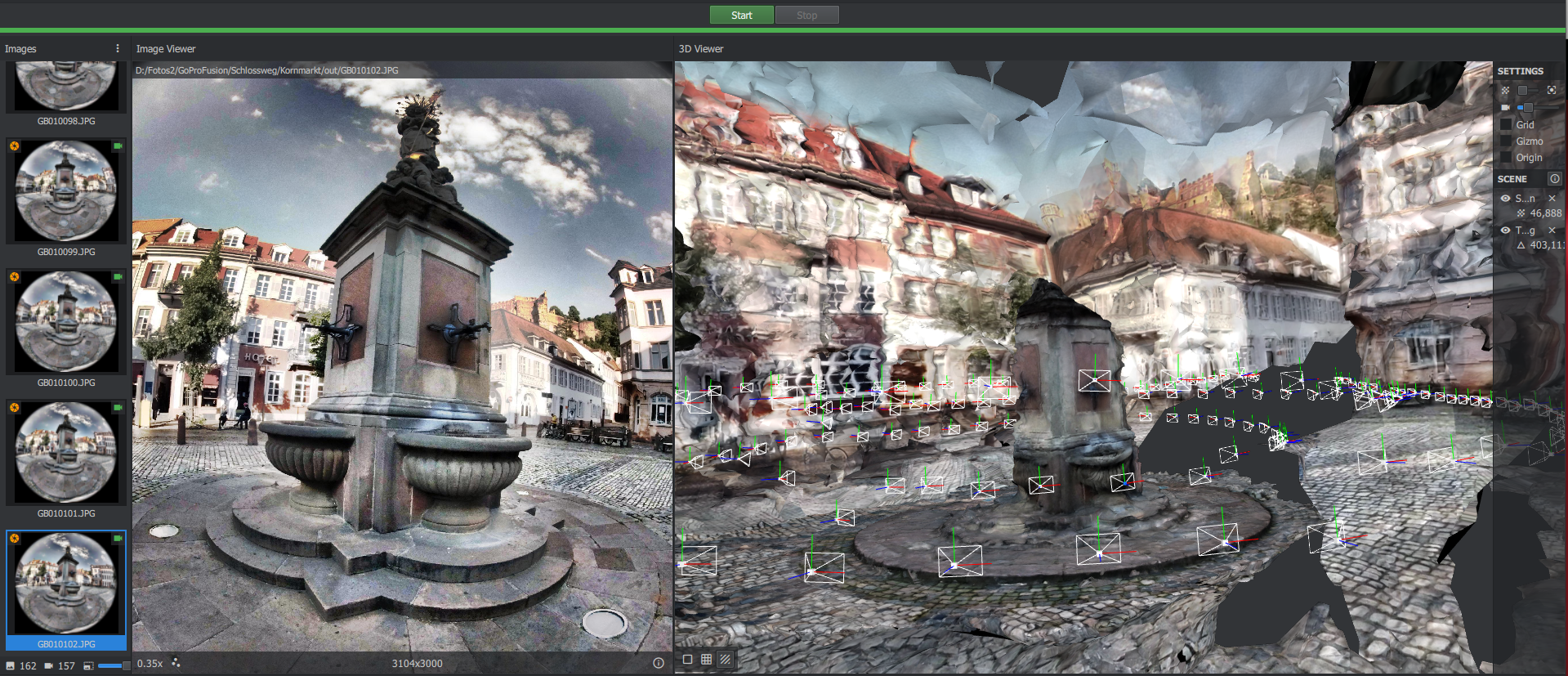

inaccurate meshing #896

Comments

|

Look at the tooltip on the orange flag on your images. The camera model is probably missing from the sensor database. |

|

Try:

If it is this model |

|

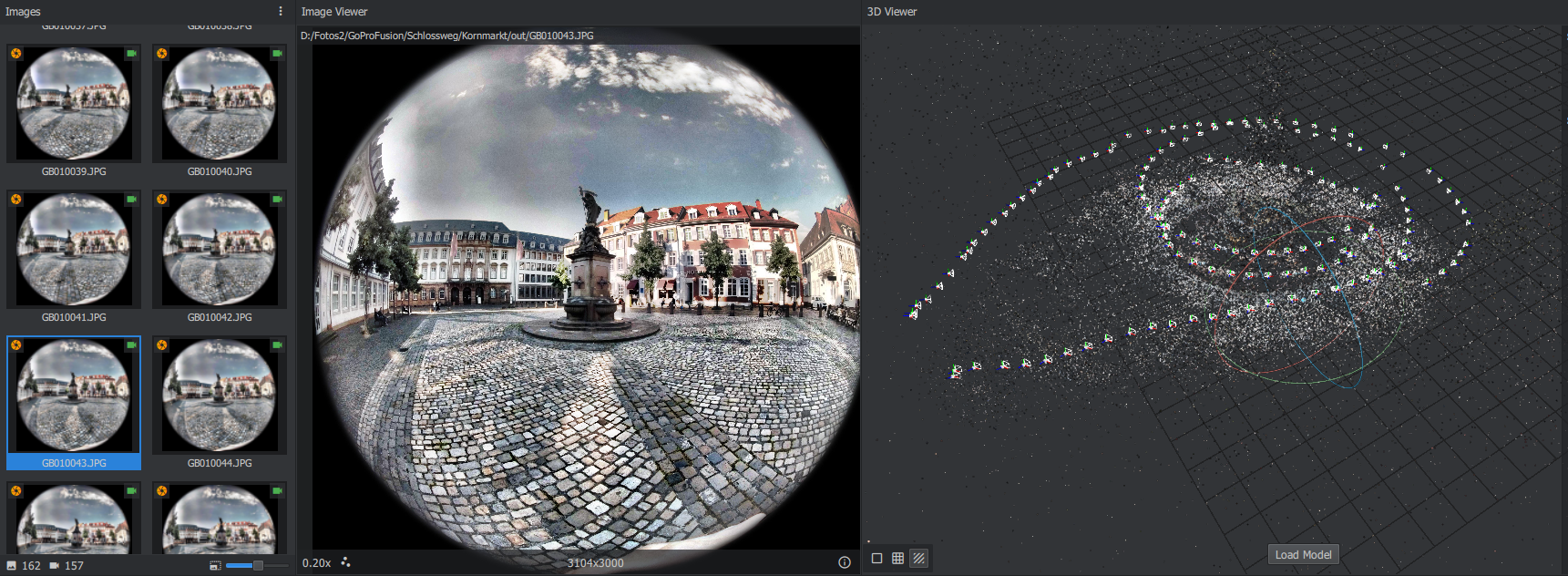

i tried this model (GoPro;FUSION;6.17;dpreview) with following results: i also tried with only 9 images, like you have done, and that works without problems. it seems the problem occurs with more than one loop around the target object. also it seems like there are 3 layers of ground-floor-point-clouds in the SfM output instead of one. i know, it will take some time to compute, but it would be a very helpful information for me if the reconstruction with all 162 images causes the same problems for you. |

|

good news, it seems to work with more SIFT-Points AND "min observation for triangulation = 3" |

|

@TR7 Do you have the other side of the gopro fusion 360? If yes, could you share it? It would be interesting to declare it as a rig of 2 cameras and see if it improves the results. |

|

one the other side from the gopro, there is me standing in front of the camera, so not really useful. but i will take some other pictures and can of course share them (both sides with me only on the side). |

|

Here I proposed to collect user contributed datasets similar to the Monstree demo dataset to test 360 and fish eye images. |

|

whats your prefered amount of front/back photos per dataset? special kind of objects/places? |

|

@TR7 It would be ideal if you could make an indoor and an outdoor dataset. |

|

another outdoor dataset with 2x 128 JPGs from GoPro Fusion (both sides, 0.5 Sec Photo Timelapse): |

|

an here a small indoor dataset for test purposes: @fabiencastan |

|

@TR7 Thanks a lot! I see that you have already organized them as a rig of 2 cameras. Did you already tried to reconstruct them? And compare with/without using the rig? |

yes i tested a lot with over 14 datasets from the GoPro Fusion.

Some other (more about the rig) problems are unsolved yet:

|

|

@TR7 Can I add a few of your images to https://github.com/natowi/meshroom-360-datasets under CC-BY-SA-4.0 license? |

|

yes! |

Describe the bug

inaccurate/broken meshing. I guess it's not supposed to be like this

To Reproduce

all other parameters are the defaults

Expected behavior

meshing should be a way better with the default values and so many pictures.

Screenshots

overview camera poses:

Logs

http://hosting141203.a2e6d.netcup.net/Thomas/HDKornmarkt/Logs.zip

Desktop (please complete the following and other pertinent information):

The text was updated successfully, but these errors were encountered: