diff --git a/.gitignore b/.gitignore

index a0f8ba7486..bc67f86f1d 100644

--- a/.gitignore

+++ b/.gitignore

@@ -81,6 +81,7 @@ typings/

__pycache__

build

*.egg-info

+.eggs/

setup.pye

**/__init__.pye

**/.ipynb_checkpoints

diff --git a/README.md b/README.md

index e03b339b80..42e6aa3552 100644

--- a/README.md

+++ b/README.md

@@ -16,7 +16,7 @@

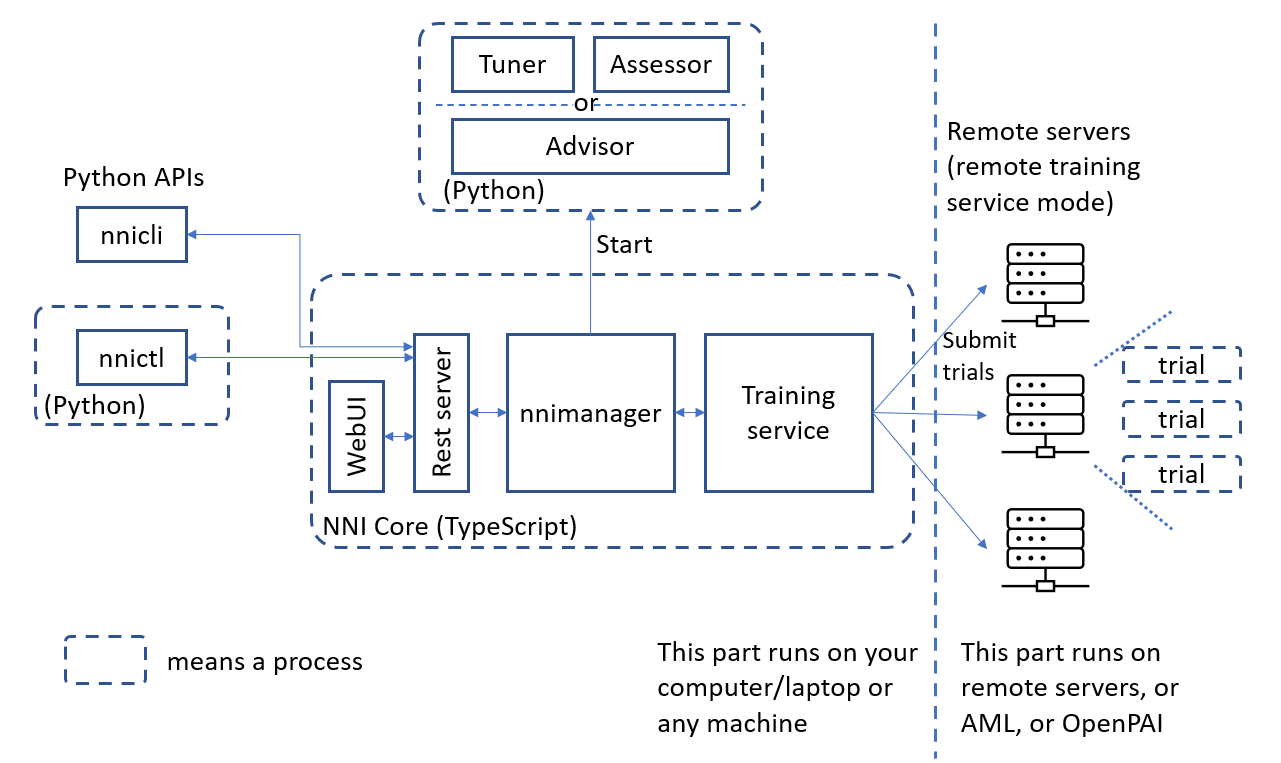

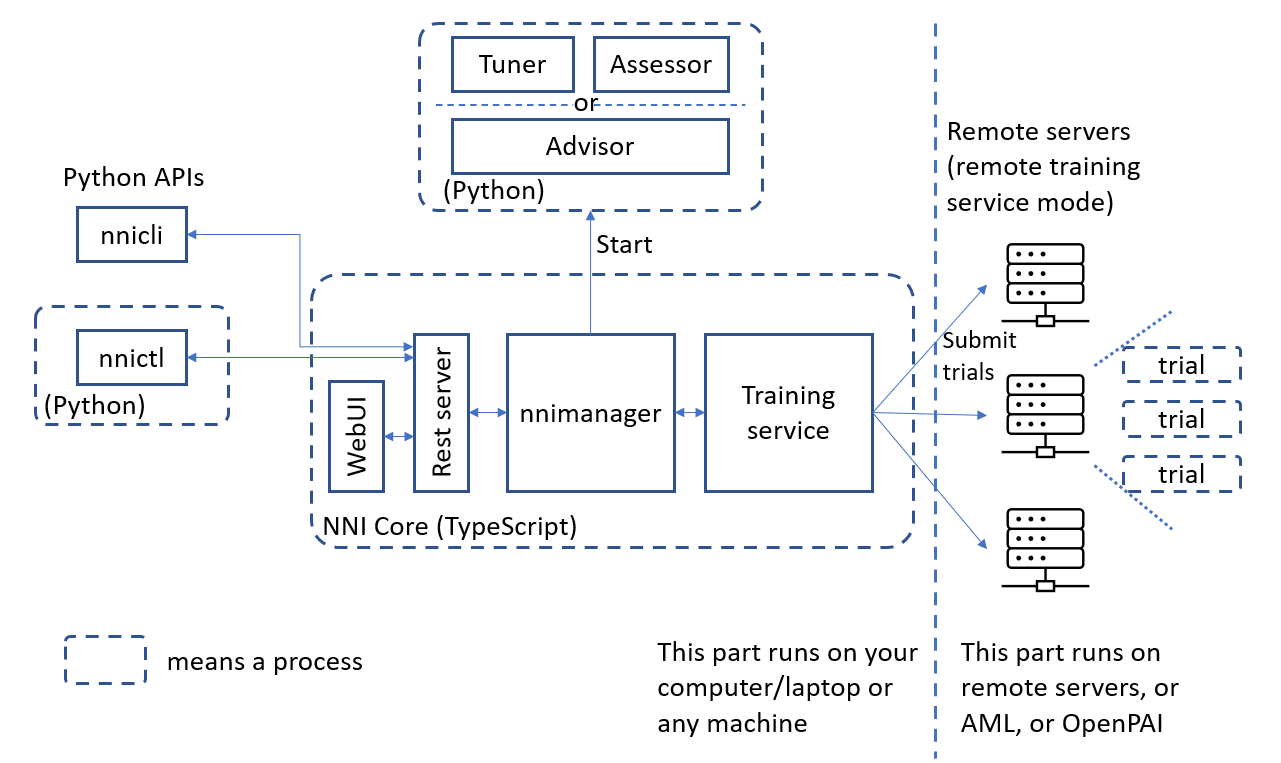

**NNI (Neural Network Intelligence)** is a lightweight but powerful toolkit to help users **automate** Feature Engineering, Neural Architecture Search, Hyperparameter Tuning and Model Compression.

-The tool manages automated machine learning (AutoML) experiments, **dispatches and runs** experiments' trial jobs generated by tuning algorithms to search the best neural architecture and/or hyper-parameters in **different training environments** like Local Machine, Remote Servers, OpenPAI, Kubeflow, FrameworkController on K8S (AKS etc.), DLWorkspace (aka. DLTS), AML (Azure Machine Learning) and other cloud options.

+The tool manages automated machine learning (AutoML) experiments, **dispatches and runs** experiments' trial jobs generated by tuning algorithms to search the best neural architecture and/or hyper-parameters in **different training environments** like Local Machine, Remote Servers, OpenPAI, Kubeflow, FrameworkController on K8S (AKS etc.), DLWorkspace (aka. DLTS), AML (Azure Machine Learning), AdaptDL (aka. ADL) and other cloud options.

## **Who should consider using NNI**

@@ -173,11 +173,13 @@ Within the following table, we summarized the current NNI capabilities, we are g

Remote Servers

AML(Azure Machine Learning)

Kubernetes based services

-

-

diff --git a/docs/en_US/Assessor/BuiltinAssessor.md b/docs/archive_en_US/Assessor/BuiltinAssessor.md

similarity index 100%

rename from docs/en_US/Assessor/BuiltinAssessor.md

rename to docs/archive_en_US/Assessor/BuiltinAssessor.md

diff --git a/docs/en_US/Assessor/CurvefittingAssessor.md b/docs/archive_en_US/Assessor/CurvefittingAssessor.md

similarity index 100%

rename from docs/en_US/Assessor/CurvefittingAssessor.md

rename to docs/archive_en_US/Assessor/CurvefittingAssessor.md

diff --git a/docs/en_US/Assessor/CustomizeAssessor.md b/docs/archive_en_US/Assessor/CustomizeAssessor.md

similarity index 100%

rename from docs/en_US/Assessor/CustomizeAssessor.md

rename to docs/archive_en_US/Assessor/CustomizeAssessor.md

diff --git a/docs/en_US/Assessor/MedianstopAssessor.md b/docs/archive_en_US/Assessor/MedianstopAssessor.md

similarity index 100%

rename from docs/en_US/Assessor/MedianstopAssessor.md

rename to docs/archive_en_US/Assessor/MedianstopAssessor.md

diff --git a/docs/en_US/CommunitySharings/AutoCompletion.md b/docs/archive_en_US/CommunitySharings/AutoCompletion.md

similarity index 100%

rename from docs/en_US/CommunitySharings/AutoCompletion.md

rename to docs/archive_en_US/CommunitySharings/AutoCompletion.md

diff --git a/docs/en_US/CommunitySharings/HpoComparison.md b/docs/archive_en_US/CommunitySharings/HpoComparison.md

similarity index 100%

rename from docs/en_US/CommunitySharings/HpoComparison.md

rename to docs/archive_en_US/CommunitySharings/HpoComparison.md

diff --git a/docs/en_US/CommunitySharings/ModelCompressionComparison.md b/docs/archive_en_US/CommunitySharings/ModelCompressionComparison.md

similarity index 100%

rename from docs/en_US/CommunitySharings/ModelCompressionComparison.md

rename to docs/archive_en_US/CommunitySharings/ModelCompressionComparison.md

diff --git a/docs/en_US/CommunitySharings/NNI_AutoFeatureEng.md b/docs/archive_en_US/CommunitySharings/NNI_AutoFeatureEng.md

similarity index 100%

rename from docs/en_US/CommunitySharings/NNI_AutoFeatureEng.md

rename to docs/archive_en_US/CommunitySharings/NNI_AutoFeatureEng.md

diff --git a/docs/en_US/CommunitySharings/NNI_colab_support.md b/docs/archive_en_US/CommunitySharings/NNI_colab_support.md

similarity index 100%

rename from docs/en_US/CommunitySharings/NNI_colab_support.md

rename to docs/archive_en_US/CommunitySharings/NNI_colab_support.md

diff --git a/docs/en_US/CommunitySharings/NasComparison.md b/docs/archive_en_US/CommunitySharings/NasComparison.md

similarity index 100%

rename from docs/en_US/CommunitySharings/NasComparison.md

rename to docs/archive_en_US/CommunitySharings/NasComparison.md

diff --git a/docs/en_US/CommunitySharings/ParallelizingTpeSearch.md b/docs/archive_en_US/CommunitySharings/ParallelizingTpeSearch.md

similarity index 100%

rename from docs/en_US/CommunitySharings/ParallelizingTpeSearch.md

rename to docs/archive_en_US/CommunitySharings/ParallelizingTpeSearch.md

diff --git a/docs/en_US/CommunitySharings/RecommendersSvd.md b/docs/archive_en_US/CommunitySharings/RecommendersSvd.md

similarity index 100%

rename from docs/en_US/CommunitySharings/RecommendersSvd.md

rename to docs/archive_en_US/CommunitySharings/RecommendersSvd.md

diff --git a/docs/en_US/CommunitySharings/SptagAutoTune.md b/docs/archive_en_US/CommunitySharings/SptagAutoTune.md

similarity index 100%

rename from docs/en_US/CommunitySharings/SptagAutoTune.md

rename to docs/archive_en_US/CommunitySharings/SptagAutoTune.md

diff --git a/docs/en_US/Compression/AutoPruningUsingTuners.md b/docs/archive_en_US/Compression/AutoPruningUsingTuners.md

similarity index 100%

rename from docs/en_US/Compression/AutoPruningUsingTuners.md

rename to docs/archive_en_US/Compression/AutoPruningUsingTuners.md

diff --git a/docs/en_US/Compression/CompressionReference.md b/docs/archive_en_US/Compression/CompressionReference.md

similarity index 100%

rename from docs/en_US/Compression/CompressionReference.md

rename to docs/archive_en_US/Compression/CompressionReference.md

diff --git a/docs/en_US/Compression/CompressionUtils.md b/docs/archive_en_US/Compression/CompressionUtils.md

similarity index 100%

rename from docs/en_US/Compression/CompressionUtils.md

rename to docs/archive_en_US/Compression/CompressionUtils.md

diff --git a/docs/en_US/Compression/CustomizeCompressor.md b/docs/archive_en_US/Compression/CustomizeCompressor.md

similarity index 100%

rename from docs/en_US/Compression/CustomizeCompressor.md

rename to docs/archive_en_US/Compression/CustomizeCompressor.md

diff --git a/docs/en_US/Compression/DependencyAware.md b/docs/archive_en_US/Compression/DependencyAware.md

similarity index 100%

rename from docs/en_US/Compression/DependencyAware.md

rename to docs/archive_en_US/Compression/DependencyAware.md

diff --git a/docs/en_US/Compression/Framework.md b/docs/archive_en_US/Compression/Framework.md

similarity index 100%

rename from docs/en_US/Compression/Framework.md

rename to docs/archive_en_US/Compression/Framework.md

diff --git a/docs/en_US/Compression/ModelSpeedup.md b/docs/archive_en_US/Compression/ModelSpeedup.md

similarity index 100%

rename from docs/en_US/Compression/ModelSpeedup.md

rename to docs/archive_en_US/Compression/ModelSpeedup.md

diff --git a/docs/en_US/Compression/Overview.md b/docs/archive_en_US/Compression/Overview.md

similarity index 100%

rename from docs/en_US/Compression/Overview.md

rename to docs/archive_en_US/Compression/Overview.md

diff --git a/docs/en_US/Compression/Pruner.md b/docs/archive_en_US/Compression/Pruner.md

similarity index 100%

rename from docs/en_US/Compression/Pruner.md

rename to docs/archive_en_US/Compression/Pruner.md

diff --git a/docs/en_US/Compression/Quantizer.md b/docs/archive_en_US/Compression/Quantizer.md

similarity index 100%

rename from docs/en_US/Compression/Quantizer.md

rename to docs/archive_en_US/Compression/Quantizer.md

diff --git a/docs/en_US/Compression/QuickStart.md b/docs/archive_en_US/Compression/QuickStart.md

similarity index 100%

rename from docs/en_US/Compression/QuickStart.md

rename to docs/archive_en_US/Compression/QuickStart.md

diff --git a/docs/en_US/FeatureEngineering/GBDTSelector.md b/docs/archive_en_US/FeatureEngineering/GBDTSelector.md

similarity index 100%

rename from docs/en_US/FeatureEngineering/GBDTSelector.md

rename to docs/archive_en_US/FeatureEngineering/GBDTSelector.md

diff --git a/docs/en_US/FeatureEngineering/GradientFeatureSelector.md b/docs/archive_en_US/FeatureEngineering/GradientFeatureSelector.md

similarity index 100%

rename from docs/en_US/FeatureEngineering/GradientFeatureSelector.md

rename to docs/archive_en_US/FeatureEngineering/GradientFeatureSelector.md

diff --git a/docs/en_US/FeatureEngineering/Overview.md b/docs/archive_en_US/FeatureEngineering/Overview.md

similarity index 100%

rename from docs/en_US/FeatureEngineering/Overview.md

rename to docs/archive_en_US/FeatureEngineering/Overview.md

diff --git a/docs/en_US/NAS/Advanced.md b/docs/archive_en_US/NAS/Advanced.md

similarity index 100%

rename from docs/en_US/NAS/Advanced.md

rename to docs/archive_en_US/NAS/Advanced.md

diff --git a/docs/en_US/NAS/Benchmarks.md b/docs/archive_en_US/NAS/Benchmarks.md

similarity index 100%

rename from docs/en_US/NAS/Benchmarks.md

rename to docs/archive_en_US/NAS/Benchmarks.md

diff --git a/docs/en_US/NAS/CDARTS.md b/docs/archive_en_US/NAS/CDARTS.md

similarity index 100%

rename from docs/en_US/NAS/CDARTS.md

rename to docs/archive_en_US/NAS/CDARTS.md

diff --git a/docs/en_US/NAS/ClassicNas.md b/docs/archive_en_US/NAS/ClassicNas.md

similarity index 100%

rename from docs/en_US/NAS/ClassicNas.md

rename to docs/archive_en_US/NAS/ClassicNas.md

diff --git a/docs/en_US/NAS/Cream.md b/docs/archive_en_US/NAS/Cream.md

similarity index 100%

rename from docs/en_US/NAS/Cream.md

rename to docs/archive_en_US/NAS/Cream.md

diff --git a/docs/en_US/NAS/DARTS.md b/docs/archive_en_US/NAS/DARTS.md

similarity index 100%

rename from docs/en_US/NAS/DARTS.md

rename to docs/archive_en_US/NAS/DARTS.md

diff --git a/docs/en_US/NAS/ENAS.md b/docs/archive_en_US/NAS/ENAS.md

similarity index 100%

rename from docs/en_US/NAS/ENAS.md

rename to docs/archive_en_US/NAS/ENAS.md

diff --git a/docs/en_US/NAS/NasGuide.md b/docs/archive_en_US/NAS/NasGuide.md

similarity index 100%

rename from docs/en_US/NAS/NasGuide.md

rename to docs/archive_en_US/NAS/NasGuide.md

diff --git a/docs/en_US/NAS/NasReference.md b/docs/archive_en_US/NAS/NasReference.md

similarity index 100%

rename from docs/en_US/NAS/NasReference.md

rename to docs/archive_en_US/NAS/NasReference.md

diff --git a/docs/en_US/NAS/Overview.md b/docs/archive_en_US/NAS/Overview.md

similarity index 100%

rename from docs/en_US/NAS/Overview.md

rename to docs/archive_en_US/NAS/Overview.md

diff --git a/docs/en_US/NAS/PDARTS.md b/docs/archive_en_US/NAS/PDARTS.md

similarity index 100%

rename from docs/en_US/NAS/PDARTS.md

rename to docs/archive_en_US/NAS/PDARTS.md

diff --git a/docs/en_US/NAS/Proxylessnas.md b/docs/archive_en_US/NAS/Proxylessnas.md

similarity index 100%

rename from docs/en_US/NAS/Proxylessnas.md

rename to docs/archive_en_US/NAS/Proxylessnas.md

diff --git a/docs/en_US/NAS/SPOS.md b/docs/archive_en_US/NAS/SPOS.md

similarity index 100%

rename from docs/en_US/NAS/SPOS.md

rename to docs/archive_en_US/NAS/SPOS.md

diff --git a/docs/en_US/NAS/SearchSpaceZoo.md b/docs/archive_en_US/NAS/SearchSpaceZoo.md

similarity index 100%

rename from docs/en_US/NAS/SearchSpaceZoo.md

rename to docs/archive_en_US/NAS/SearchSpaceZoo.md

diff --git a/docs/en_US/NAS/TextNAS.md b/docs/archive_en_US/NAS/TextNAS.md

similarity index 100%

rename from docs/en_US/NAS/TextNAS.md

rename to docs/archive_en_US/NAS/TextNAS.md

diff --git a/docs/en_US/NAS/Visualization.md b/docs/archive_en_US/NAS/Visualization.md

similarity index 100%

rename from docs/en_US/NAS/Visualization.md

rename to docs/archive_en_US/NAS/Visualization.md

diff --git a/docs/en_US/NAS/WriteSearchSpace.md b/docs/archive_en_US/NAS/WriteSearchSpace.md

similarity index 100%

rename from docs/en_US/NAS/WriteSearchSpace.md

rename to docs/archive_en_US/NAS/WriteSearchSpace.md

diff --git a/docs/en_US/Overview.md b/docs/archive_en_US/Overview.md

similarity index 100%

rename from docs/en_US/Overview.md

rename to docs/archive_en_US/Overview.md

diff --git a/docs/en_US/Release.md b/docs/archive_en_US/Release.md

similarity index 100%

rename from docs/en_US/Release.md

rename to docs/archive_en_US/Release.md

diff --git a/docs/en_US/ResearchPublications.md b/docs/archive_en_US/ResearchPublications.md

similarity index 100%

rename from docs/en_US/ResearchPublications.md

rename to docs/archive_en_US/ResearchPublications.md

diff --git a/docs/en_US/SupportedFramework_Library.md b/docs/archive_en_US/SupportedFramework_Library.md

similarity index 100%

rename from docs/en_US/SupportedFramework_Library.md

rename to docs/archive_en_US/SupportedFramework_Library.md

diff --git a/docs/en_US/TrainingService/AMLMode.md b/docs/archive_en_US/TrainingService/AMLMode.md

similarity index 100%

rename from docs/en_US/TrainingService/AMLMode.md

rename to docs/archive_en_US/TrainingService/AMLMode.md

diff --git a/docs/en_US/TrainingService/AdaptDLMode.md b/docs/archive_en_US/TrainingService/AdaptDLMode.md

similarity index 100%

rename from docs/en_US/TrainingService/AdaptDLMode.md

rename to docs/archive_en_US/TrainingService/AdaptDLMode.md

diff --git a/docs/en_US/TrainingService/DLTSMode.md b/docs/archive_en_US/TrainingService/DLTSMode.md

similarity index 100%

rename from docs/en_US/TrainingService/DLTSMode.md

rename to docs/archive_en_US/TrainingService/DLTSMode.md

diff --git a/docs/en_US/TrainingService/FrameworkControllerMode.md b/docs/archive_en_US/TrainingService/FrameworkControllerMode.md

similarity index 100%

rename from docs/en_US/TrainingService/FrameworkControllerMode.md

rename to docs/archive_en_US/TrainingService/FrameworkControllerMode.md

diff --git a/docs/en_US/TrainingService/HowToImplementTrainingService.md b/docs/archive_en_US/TrainingService/HowToImplementTrainingService.md

similarity index 100%

rename from docs/en_US/TrainingService/HowToImplementTrainingService.md

rename to docs/archive_en_US/TrainingService/HowToImplementTrainingService.md

diff --git a/docs/en_US/TrainingService/KubeflowMode.md b/docs/archive_en_US/TrainingService/KubeflowMode.md

similarity index 100%

rename from docs/en_US/TrainingService/KubeflowMode.md

rename to docs/archive_en_US/TrainingService/KubeflowMode.md

diff --git a/docs/en_US/TrainingService/LocalMode.md b/docs/archive_en_US/TrainingService/LocalMode.md

similarity index 100%

rename from docs/en_US/TrainingService/LocalMode.md

rename to docs/archive_en_US/TrainingService/LocalMode.md

diff --git a/docs/en_US/TrainingService/Overview.md b/docs/archive_en_US/TrainingService/Overview.md

similarity index 100%

rename from docs/en_US/TrainingService/Overview.md

rename to docs/archive_en_US/TrainingService/Overview.md

diff --git a/docs/en_US/TrainingService/PaiMode.md b/docs/archive_en_US/TrainingService/PaiMode.md

similarity index 100%

rename from docs/en_US/TrainingService/PaiMode.md

rename to docs/archive_en_US/TrainingService/PaiMode.md

diff --git a/docs/en_US/TrainingService/PaiYarnMode.md b/docs/archive_en_US/TrainingService/PaiYarnMode.md

similarity index 100%

rename from docs/en_US/TrainingService/PaiYarnMode.md

rename to docs/archive_en_US/TrainingService/PaiYarnMode.md

diff --git a/docs/en_US/TrainingService/RemoteMachineMode.md b/docs/archive_en_US/TrainingService/RemoteMachineMode.md

similarity index 100%

rename from docs/en_US/TrainingService/RemoteMachineMode.md

rename to docs/archive_en_US/TrainingService/RemoteMachineMode.md

diff --git a/docs/en_US/TrialExample/Cifar10Examples.md b/docs/archive_en_US/TrialExample/Cifar10Examples.md

similarity index 100%

rename from docs/en_US/TrialExample/Cifar10Examples.md

rename to docs/archive_en_US/TrialExample/Cifar10Examples.md

diff --git a/docs/en_US/TrialExample/EfficientNet.md b/docs/archive_en_US/TrialExample/EfficientNet.md

similarity index 67%

rename from docs/en_US/TrialExample/EfficientNet.md

rename to docs/archive_en_US/TrialExample/EfficientNet.md

index e22da7e42e..f71a0f7f08 100644

--- a/docs/en_US/TrialExample/EfficientNet.md

+++ b/docs/archive_en_US/TrialExample/EfficientNet.md

@@ -9,7 +9,7 @@ Use Grid search to find the best combination of alpha, beta and gamma for Effici

[Example code](https://github.com/microsoft/nni/tree/v1.9/examples/trials/efficientnet)

1. Set your working directory here in the example code directory.

-2. Run `git clone https://github.com/ultmaster/EfficientNet-PyTorch` to clone this modified version of [EfficientNet-PyTorch](https://github.com/lukemelas/EfficientNet-PyTorch). The modifications were done to adhere to the original [Tensorflow version](https://github.com/tensorflow/tpu/tree/master/models/official/efficientnet) as close as possible (including EMA, label smoothing and etc.); also added are the part which gets parameters from tuner and reports intermediate/final results. Clone it into `EfficientNet-PyTorch`; the files like `main.py`, `train_imagenet.sh` will appear inside, as specified in the configuration files.

+2. Run `git clone https://github.com/ultmaster/EfficientNet-PyTorch` to clone the [ultmaster modified version](https://github.com/ultmaster/EfficientNet-PyTorch) of the original [EfficientNet-PyTorch](https://github.com/lukemelas/EfficientNet-PyTorch). The modifications were done to adhere to the original [Tensorflow version](https://github.com/tensorflow/tpu/tree/master/models/official/efficientnet) as close as possible (including EMA, label smoothing and etc.); also added are the part which gets parameters from tuner and reports intermediate/final results. Clone it into `EfficientNet-PyTorch`; the files like `main.py`, `train_imagenet.sh` will appear inside, as specified in the configuration files.

3. Run `nnictl create --config config_local.yml` (use `config_pai.yml` for OpenPAI) to find the best EfficientNet-B1. Adjust the training service (PAI/local/remote), batch size in the config files according to the environment.

For training on ImageNet, read `EfficientNet-PyTorch/train_imagenet.sh`. Download ImageNet beforehand and extract it adhering to [PyTorch format](https://pytorch.org/docs/stable/torchvision/datasets.html#imagenet) and then replace `/mnt/data/imagenet` in with the location of the ImageNet storage. This file should also be a good example to follow for mounting ImageNet into the container on OpenPAI.

diff --git a/docs/en_US/TrialExample/GbdtExample.md b/docs/archive_en_US/TrialExample/GbdtExample.md

similarity index 100%

rename from docs/en_US/TrialExample/GbdtExample.md

rename to docs/archive_en_US/TrialExample/GbdtExample.md

diff --git a/docs/en_US/TrialExample/KDExample.md b/docs/archive_en_US/TrialExample/KDExample.md

similarity index 100%

rename from docs/en_US/TrialExample/KDExample.md

rename to docs/archive_en_US/TrialExample/KDExample.md

diff --git a/docs/en_US/TrialExample/MnistExamples.md b/docs/archive_en_US/TrialExample/MnistExamples.md

similarity index 100%

rename from docs/en_US/TrialExample/MnistExamples.md

rename to docs/archive_en_US/TrialExample/MnistExamples.md

diff --git a/docs/en_US/TrialExample/OpEvoExamples.md b/docs/archive_en_US/TrialExample/OpEvoExamples.md

similarity index 100%

rename from docs/en_US/TrialExample/OpEvoExamples.md

rename to docs/archive_en_US/TrialExample/OpEvoExamples.md

diff --git a/docs/en_US/TrialExample/RocksdbExamples.md b/docs/archive_en_US/TrialExample/RocksdbExamples.md

similarity index 100%

rename from docs/en_US/TrialExample/RocksdbExamples.md

rename to docs/archive_en_US/TrialExample/RocksdbExamples.md

diff --git a/docs/en_US/TrialExample/SklearnExamples.md b/docs/archive_en_US/TrialExample/SklearnExamples.md

similarity index 100%

rename from docs/en_US/TrialExample/SklearnExamples.md

rename to docs/archive_en_US/TrialExample/SklearnExamples.md

diff --git a/docs/en_US/TrialExample/SquadEvolutionExamples.md b/docs/archive_en_US/TrialExample/SquadEvolutionExamples.md

similarity index 100%

rename from docs/en_US/TrialExample/SquadEvolutionExamples.md

rename to docs/archive_en_US/TrialExample/SquadEvolutionExamples.md

diff --git a/docs/en_US/TrialExample/Trials.md b/docs/archive_en_US/TrialExample/Trials.md

similarity index 100%

rename from docs/en_US/TrialExample/Trials.md

rename to docs/archive_en_US/TrialExample/Trials.md

diff --git a/docs/en_US/Tuner/BatchTuner.md b/docs/archive_en_US/Tuner/BatchTuner.md

similarity index 100%

rename from docs/en_US/Tuner/BatchTuner.md

rename to docs/archive_en_US/Tuner/BatchTuner.md

diff --git a/docs/en_US/Tuner/BohbAdvisor.md b/docs/archive_en_US/Tuner/BohbAdvisor.md

similarity index 100%

rename from docs/en_US/Tuner/BohbAdvisor.md

rename to docs/archive_en_US/Tuner/BohbAdvisor.md

diff --git a/docs/en_US/Tuner/BuiltinTuner.md b/docs/archive_en_US/Tuner/BuiltinTuner.md

similarity index 100%

rename from docs/en_US/Tuner/BuiltinTuner.md

rename to docs/archive_en_US/Tuner/BuiltinTuner.md

diff --git a/docs/en_US/Tuner/CustomizeAdvisor.md b/docs/archive_en_US/Tuner/CustomizeAdvisor.md

similarity index 100%

rename from docs/en_US/Tuner/CustomizeAdvisor.md

rename to docs/archive_en_US/Tuner/CustomizeAdvisor.md

diff --git a/docs/en_US/Tuner/CustomizeTuner.md b/docs/archive_en_US/Tuner/CustomizeTuner.md

similarity index 100%

rename from docs/en_US/Tuner/CustomizeTuner.md

rename to docs/archive_en_US/Tuner/CustomizeTuner.md

diff --git a/docs/en_US/Tuner/EvolutionTuner.md b/docs/archive_en_US/Tuner/EvolutionTuner.md

similarity index 100%

rename from docs/en_US/Tuner/EvolutionTuner.md

rename to docs/archive_en_US/Tuner/EvolutionTuner.md

diff --git a/docs/en_US/Tuner/GPTuner.md b/docs/archive_en_US/Tuner/GPTuner.md

similarity index 100%

rename from docs/en_US/Tuner/GPTuner.md

rename to docs/archive_en_US/Tuner/GPTuner.md

diff --git a/docs/en_US/Tuner/GridsearchTuner.md b/docs/archive_en_US/Tuner/GridsearchTuner.md

similarity index 100%

rename from docs/en_US/Tuner/GridsearchTuner.md

rename to docs/archive_en_US/Tuner/GridsearchTuner.md

diff --git a/docs/en_US/Tuner/HyperbandAdvisor.md b/docs/archive_en_US/Tuner/HyperbandAdvisor.md

similarity index 100%

rename from docs/en_US/Tuner/HyperbandAdvisor.md

rename to docs/archive_en_US/Tuner/HyperbandAdvisor.md

diff --git a/docs/en_US/Tuner/HyperoptTuner.md b/docs/archive_en_US/Tuner/HyperoptTuner.md

similarity index 100%

rename from docs/en_US/Tuner/HyperoptTuner.md

rename to docs/archive_en_US/Tuner/HyperoptTuner.md

diff --git a/docs/en_US/Tuner/InstallCustomizedTuner.md b/docs/archive_en_US/Tuner/InstallCustomizedTuner.md

similarity index 100%

rename from docs/en_US/Tuner/InstallCustomizedTuner.md

rename to docs/archive_en_US/Tuner/InstallCustomizedTuner.md

diff --git a/docs/en_US/Tuner/MetisTuner.md b/docs/archive_en_US/Tuner/MetisTuner.md

similarity index 100%

rename from docs/en_US/Tuner/MetisTuner.md

rename to docs/archive_en_US/Tuner/MetisTuner.md

diff --git a/docs/en_US/Tuner/NetworkmorphismTuner.md b/docs/archive_en_US/Tuner/NetworkmorphismTuner.md

similarity index 100%

rename from docs/en_US/Tuner/NetworkmorphismTuner.md

rename to docs/archive_en_US/Tuner/NetworkmorphismTuner.md

diff --git a/docs/en_US/Tuner/PBTTuner.md b/docs/archive_en_US/Tuner/PBTTuner.md

similarity index 100%

rename from docs/en_US/Tuner/PBTTuner.md

rename to docs/archive_en_US/Tuner/PBTTuner.md

diff --git a/docs/en_US/Tuner/PPOTuner.md b/docs/archive_en_US/Tuner/PPOTuner.md

similarity index 100%

rename from docs/en_US/Tuner/PPOTuner.md

rename to docs/archive_en_US/Tuner/PPOTuner.md

diff --git a/docs/en_US/Tuner/SmacTuner.md b/docs/archive_en_US/Tuner/SmacTuner.md

similarity index 100%

rename from docs/en_US/Tuner/SmacTuner.md

rename to docs/archive_en_US/Tuner/SmacTuner.md

diff --git a/docs/en_US/Tutorial/AnnotationSpec.md b/docs/archive_en_US/Tutorial/AnnotationSpec.md

similarity index 100%

rename from docs/en_US/Tutorial/AnnotationSpec.md

rename to docs/archive_en_US/Tutorial/AnnotationSpec.md

diff --git a/docs/en_US/Tutorial/Contributing.md b/docs/archive_en_US/Tutorial/Contributing.md

similarity index 100%

rename from docs/en_US/Tutorial/Contributing.md

rename to docs/archive_en_US/Tutorial/Contributing.md

diff --git a/docs/en_US/Tutorial/ExperimentConfig.md b/docs/archive_en_US/Tutorial/ExperimentConfig.md

similarity index 100%

rename from docs/en_US/Tutorial/ExperimentConfig.md

rename to docs/archive_en_US/Tutorial/ExperimentConfig.md

diff --git a/docs/en_US/Tutorial/FAQ.md b/docs/archive_en_US/Tutorial/FAQ.md

similarity index 100%

rename from docs/en_US/Tutorial/FAQ.md

rename to docs/archive_en_US/Tutorial/FAQ.md

diff --git a/docs/en_US/Tutorial/HowToDebug.md b/docs/archive_en_US/Tutorial/HowToDebug.md

similarity index 100%

rename from docs/en_US/Tutorial/HowToDebug.md

rename to docs/archive_en_US/Tutorial/HowToDebug.md

diff --git a/docs/en_US/Tutorial/HowToUseDocker.md b/docs/archive_en_US/Tutorial/HowToUseDocker.md

similarity index 100%

rename from docs/en_US/Tutorial/HowToUseDocker.md

rename to docs/archive_en_US/Tutorial/HowToUseDocker.md

diff --git a/docs/en_US/Tutorial/InstallCustomizedAlgos.md b/docs/archive_en_US/Tutorial/InstallCustomizedAlgos.md

similarity index 100%

rename from docs/en_US/Tutorial/InstallCustomizedAlgos.md

rename to docs/archive_en_US/Tutorial/InstallCustomizedAlgos.md

diff --git a/docs/en_US/Tutorial/InstallationLinux.md b/docs/archive_en_US/Tutorial/InstallationLinux.md

similarity index 100%

rename from docs/en_US/Tutorial/InstallationLinux.md

rename to docs/archive_en_US/Tutorial/InstallationLinux.md

diff --git a/docs/en_US/Tutorial/InstallationWin.md b/docs/archive_en_US/Tutorial/InstallationWin.md

similarity index 100%

rename from docs/en_US/Tutorial/InstallationWin.md

rename to docs/archive_en_US/Tutorial/InstallationWin.md

diff --git a/docs/en_US/Tutorial/Nnictl.md b/docs/archive_en_US/Tutorial/Nnictl.md

similarity index 100%

rename from docs/en_US/Tutorial/Nnictl.md

rename to docs/archive_en_US/Tutorial/Nnictl.md

diff --git a/docs/en_US/Tutorial/QuickStart.md b/docs/archive_en_US/Tutorial/QuickStart.md

similarity index 100%

rename from docs/en_US/Tutorial/QuickStart.md

rename to docs/archive_en_US/Tutorial/QuickStart.md

diff --git a/docs/en_US/Tutorial/SearchSpaceSpec.md b/docs/archive_en_US/Tutorial/SearchSpaceSpec.md

similarity index 100%

rename from docs/en_US/Tutorial/SearchSpaceSpec.md

rename to docs/archive_en_US/Tutorial/SearchSpaceSpec.md

diff --git a/docs/en_US/Tutorial/SetupNniDeveloperEnvironment.md b/docs/archive_en_US/Tutorial/SetupNniDeveloperEnvironment.md

similarity index 100%

rename from docs/en_US/Tutorial/SetupNniDeveloperEnvironment.md

rename to docs/archive_en_US/Tutorial/SetupNniDeveloperEnvironment.md

diff --git a/docs/en_US/Tutorial/WebUI.md b/docs/archive_en_US/Tutorial/WebUI.md

similarity index 100%

rename from docs/en_US/Tutorial/WebUI.md

rename to docs/archive_en_US/Tutorial/WebUI.md

diff --git a/docs/en_US/autotune_ref.md b/docs/archive_en_US/autotune_ref.md

similarity index 100%

rename from docs/en_US/autotune_ref.md

rename to docs/archive_en_US/autotune_ref.md

diff --git a/docs/en_US/nnicli_ref.md b/docs/archive_en_US/nnicli_ref.md

similarity index 100%

rename from docs/en_US/nnicli_ref.md

rename to docs/archive_en_US/nnicli_ref.md

diff --git a/docs/en_US/Assessor/BuiltinAssessor.rst b/docs/en_US/Assessor/BuiltinAssessor.rst

new file mode 100644

index 0000000000..6b85253a73

--- /dev/null

+++ b/docs/en_US/Assessor/BuiltinAssessor.rst

@@ -0,0 +1,101 @@

+.. role:: raw-html(raw)

+ :format: html

+

+

+Built-in Assessors

+==================

+

+NNI provides state-of-the-art tuning algorithms within our builtin-assessors and makes them easy to use. Below is a brief overview of NNI's current builtin Assessors.

+

+Note: Click the **Assessor's name** to get each Assessor's installation requirements, suggested usage scenario, and a config example. A link to a detailed description of each algorithm is provided at the end of the suggested scenario for each Assessor.

+

+Currently, we support the following Assessors:

+

+.. list-table::

+ :header-rows: 1

+ :widths: auto

+

+ * - Assessor

+ - Brief Introduction of Algorithm

+ * - `Medianstop <#MedianStop>`__

+ - Medianstop is a simple early stopping rule. It stops a pending trial X at step S if the trial’s best objective value by step S is strictly worse than the median value of the running averages of all completed trials’ objectives reported up to step S. `Reference Paper `__

+ * - `Curvefitting <#Curvefitting>`__

+ - Curve Fitting Assessor is an LPA (learning, predicting, assessing) algorithm. It stops a pending trial X at step S if the prediction of the final epoch's performance worse than the best final performance in the trial history. In this algorithm, we use 12 curves to fit the accuracy curve. `Reference Paper `__

+

+

+Usage of Builtin Assessors

+--------------------------

+

+Usage of builtin assessors provided by the NNI SDK requires one to declare the **builtinAssessorName** and **classArgs** in the ``config.yml`` file. In this part, we will introduce the details of usage and the suggested scenarios, classArg requirements, and an example for each assessor.

+

+Note: Please follow the provided format when writing your ``config.yml`` file.

+

+:raw-html:``

+

+Median Stop Assessor

+^^^^^^^^^^^^^^^^^^^^

+

+..

+

+ Builtin Assessor Name: **Medianstop**

+

+

+**Suggested scenario**

+

+It's applicable in a wide range of performance curves, thus, it can be used in various scenarios to speed up the tuning progress. `Detailed Description <./MedianstopAssessor.rst>`__

+

+**classArgs requirements:**

+

+

+* **optimize_mode** (*maximize or minimize, optional, default = maximize*\ ) - If 'maximize', assessor will **stop** the trial with smaller expectation. If 'minimize', assessor will **stop** the trial with larger expectation.

+* **start_step** (*int, optional, default = 0*\ ) - A trial is determined to be stopped or not only after receiving start_step number of reported intermediate results.

+

+**Usage example:**

+

+.. code-block:: yaml

+

+ # config.yml

+ assessor:

+ builtinAssessorName: Medianstop

+ classArgs:

+ optimize_mode: maximize

+ start_step: 5

+

+:raw-html:`

`

+

+:raw-html:``

+

+Curve Fitting Assessor

+^^^^^^^^^^^^^^^^^^^^^^

+

+..

+

+ Builtin Assessor Name: **Curvefitting**

+

+

+**Suggested scenario**

+

+It's applicable in a wide range of performance curves, thus, it can be used in various scenarios to speed up the tuning progress. Even better, it's able to handle and assess curves with similar performance. `Detailed Description <./CurvefittingAssessor.rst>`__

+

+**Note**\ , according to the original paper, only incremental functions are supported. Therefore this assessor can only be used to maximize optimization metrics. For example, it can be used for accuracy, but not for loss.

+

+**classArgs requirements:**

+

+

+* **epoch_num** (*int,** required***\ ) - The total number of epochs. We need to know the number of epochs to determine which points we need to predict.

+* **start_step** (*int, optional, default = 6*\ ) - A trial is determined to be stopped or not only after receiving start_step number of reported intermediate results.

+* **threshold** (*float, optional, default = 0.95*\ ) - The threshold that we use to decide to early stop the worst performance curve. For example: if threshold = 0.95, and the best performance in the history is 0.9, then we will stop the trial who's predicted value is lower than 0.95 * 0.9 = 0.855.

+* **gap** (*int, optional, default = 1*\ ) - The gap interval between Assessor judgements. For example: if gap = 2, start_step = 6, then we will assess the result when we get 6, 8, 10, 12...intermediate results.

+

+**Usage example:**

+

+.. code-block:: yaml

+

+ # config.yml

+ assessor:

+ builtinAssessorName: Curvefitting

+ classArgs:

+ epoch_num: 20

+ start_step: 6

+ threshold: 0.95

+ gap: 1

diff --git a/docs/en_US/Assessor/CurvefittingAssessor.rst b/docs/en_US/Assessor/CurvefittingAssessor.rst

new file mode 100644

index 0000000000..41c6d2c147

--- /dev/null

+++ b/docs/en_US/Assessor/CurvefittingAssessor.rst

@@ -0,0 +1,101 @@

+Curve Fitting Assessor on NNI

+=============================

+

+Introduction

+------------

+

+The Curve Fitting Assessor is an LPA (learning, predicting, assessing) algorithm. It stops a pending trial X at step S if the prediction of the final epoch's performance is worse than the best final performance in the trial history.

+

+In this algorithm, we use 12 curves to fit the learning curve. The set of parametric curve models are chosen from this `reference paper `__. The learning curves' shape coincides with our prior knowledge about the form of learning curves: They are typically increasing, saturating functions.

+

+

+.. image:: ../../img/curvefitting_learning_curve.PNG

+ :target: ../../img/curvefitting_learning_curve.PNG

+ :alt: learning_curve

+

+

+We combine all learning curve models into a single, more powerful model. This combined model is given by a weighted linear combination:

+

+

+.. image:: ../../img/curvefitting_f_comb.gif

+ :target: ../../img/curvefitting_f_comb.gif

+ :alt: f_comb

+

+

+with the new combined parameter vector

+

+

+.. image:: ../../img/curvefitting_expression_xi.gif

+ :target: ../../img/curvefitting_expression_xi.gif

+ :alt: expression_xi

+

+

+Assuming additive Gaussian noise and the noise parameter being initialized to its maximum likelihood estimate.

+

+We determine the maximum probability value of the new combined parameter vector by learning the historical data. We use such a value to predict future trial performance and stop the inadequate experiments to save computing resources.

+

+Concretely, this algorithm goes through three stages of learning, predicting, and assessing.

+

+

+*

+ Step1: Learning. We will learn about the trial history of the current trial and determine the \xi at the Bayesian angle. First of all, We fit each curve using the least-squares method, implemented by ``fit_theta``. After we obtained the parameters, we filter the curve and remove the outliers, implemented by ``filter_curve``. Finally, we use the MCMC sampling method. implemented by ``mcmc_sampling``\ , to adjust the weight of each curve. Up to now, we have determined all the parameters in \xi.

+

+*

+ Step2: Predicting. It calculates the expected final result accuracy, implemented by ``f_comb``\ , at the target position (i.e., the total number of epochs) by \xi and the formula of the combined model.

+

+*

+ Step3: If the fitting result doesn't converge, the predicted value will be ``None``. In this case, we return ``AssessResult.Good`` to ask for future accuracy information and predict again. Furthermore, we will get a positive value from the ``predict()`` function. If this value is strictly greater than the best final performance in history * ``THRESHOLD``\ (default value = 0.95), return ``AssessResult.Good``\ , otherwise, return ``AssessResult.Bad``

+

+The figure below is the result of our algorithm on MNIST trial history data, where the green point represents the data obtained by Assessor, the blue point represents the future but unknown data, and the red line is the Curve predicted by the Curve fitting assessor.

+

+

+.. image:: ../../img/curvefitting_example.PNG

+ :target: ../../img/curvefitting_example.PNG

+ :alt: examples

+

+

+Usage

+-----

+

+To use Curve Fitting Assessor, you should add the following spec in your experiment's YAML config file:

+

+.. code-block:: yaml

+

+ assessor:

+ builtinAssessorName: Curvefitting

+ classArgs:

+ # (required)The total number of epoch.

+ # We need to know the number of epoch to determine which point we need to predict.

+ epoch_num: 20

+ # (optional) In order to save our computing resource, we start to predict when we have more than only after receiving start_step number of reported intermediate results.

+ # The default value of start_step is 6.

+ start_step: 6

+ # (optional) The threshold that we decide to early stop the worse performance curve.

+ # For example: if threshold = 0.95, best performance in the history is 0.9, then we will stop the trial which predict value is lower than 0.95 * 0.9 = 0.855.

+ # The default value of threshold is 0.95.

+ threshold: 0.95

+ # (optional) The gap interval between Assesor judgements.

+ # For example: if gap = 2, start_step = 6, then we will assess the result when we get 6, 8, 10, 12...intermedian result.

+ # The default value of gap is 1.

+ gap: 1

+

+Limitation

+----------

+

+According to the original paper, only incremental functions are supported. Therefore this assessor can only be used to maximize optimization metrics. For example, it can be used for accuracy, but not for loss.

+

+File Structure

+--------------

+

+The assessor has a lot of different files, functions, and classes. Here we briefly describe a few of them.

+

+

+* ``curvefunctions.py`` includes all the function expressions and default parameters.

+* ``modelfactory.py`` includes learning and predicting; the corresponding calculation part is also implemented here.

+* ``curvefitting_assessor.py`` is the assessor which receives the trial history and assess whether to early stop the trial.

+

+TODO

+----

+

+

+* Further improve the accuracy of the prediction and test it on more models.

diff --git a/docs/en_US/Assessor/CustomizeAssessor.rst b/docs/en_US/Assessor/CustomizeAssessor.rst

new file mode 100644

index 0000000000..3926d7a306

--- /dev/null

+++ b/docs/en_US/Assessor/CustomizeAssessor.rst

@@ -0,0 +1,67 @@

+Customize Assessor

+==================

+

+NNI supports to build an assessor by yourself for tuning demand.

+

+If you want to implement a customized Assessor, there are three things to do:

+

+

+#. Inherit the base Assessor class

+#. Implement assess_trial function

+#. Configure your customized Assessor in experiment YAML config file

+

+**1. Inherit the base Assessor class**

+

+.. code-block:: python

+

+ from nni.assessor import Assessor

+

+ class CustomizedAssessor(Assessor):

+ def __init__(self, ...):

+ ...

+

+**2. Implement assess trial function**

+

+.. code-block:: python

+

+ from nni.assessor import Assessor, AssessResult

+

+ class CustomizedAssessor(Assessor):

+ def __init__(self, ...):

+ ...

+

+ def assess_trial(self, trial_history):

+ """

+ Determines whether a trial should be killed. Must override.

+ trial_history: a list of intermediate result objects.

+ Returns AssessResult.Good or AssessResult.Bad.

+ """

+ # you code implement here.

+ ...

+

+**3. Configure your customized Assessor in experiment YAML config file**

+

+NNI needs to locate your customized Assessor class and instantiate the class, so you need to specify the location of the customized Assessor class and pass literal values as parameters to the __init__ constructor.

+

+.. code-block:: yaml

+

+ assessor:

+ codeDir: /home/abc/myassessor

+ classFileName: my_customized_assessor.py

+ className: CustomizedAssessor

+ # Any parameter need to pass to your Assessor class __init__ constructor

+ # can be specified in this optional classArgs field, for example

+ classArgs:

+ arg1: value1

+

+Please noted in **2**. The object ``trial_history`` are exact the object that Trial send to Assessor by using SDK ``report_intermediate_result`` function.

+

+The working directory of your assessor is ``/nni-experiments//log``\ , which can be retrieved with environment variable ``NNI_LOG_DIRECTORY``\ ,

+

+More detail example you could see:

+

+..

+

+ * :githublink:`medianstop-assessor `

+ * :githublink:`curvefitting-assessor `

+

diff --git a/docs/en_US/Assessor/MedianstopAssessor.rst b/docs/en_US/Assessor/MedianstopAssessor.rst

new file mode 100644

index 0000000000..5a307bf0d3

--- /dev/null

+++ b/docs/en_US/Assessor/MedianstopAssessor.rst

@@ -0,0 +1,7 @@

+Medianstop Assessor on NNI

+==========================

+

+Median Stop

+-----------

+

+Medianstop is a simple early stopping rule mentioned in this `paper `__. It stops a pending trial X after step S if the trial’s best objective value by step S is strictly worse than the median value of the running averages of all completed trials’ objectives reported up to step S.

diff --git a/docs/en_US/CommunitySharings/AutoCompletion.rst b/docs/en_US/CommunitySharings/AutoCompletion.rst

new file mode 100644

index 0000000000..cb0c76c12f

--- /dev/null

+++ b/docs/en_US/CommunitySharings/AutoCompletion.rst

@@ -0,0 +1,55 @@

+Auto Completion for nnictl Commands

+===================================

+

+NNI's command line tool **nnictl** support auto-completion, i.e., you can complete a nnictl command by pressing the ``tab`` key.

+

+For example, if the current command is

+

+.. code-block:: bash

+

+ nnictl cre

+

+By pressing the ``tab`` key, it will be completed to

+

+.. code-block:: bash

+

+ nnictl create

+

+For now, auto-completion will not be enabled by default if you install NNI through ``pip``\ , and it only works on Linux with bash shell. If you want to enable this feature on your computer, please refer to the following steps:

+

+Step 1. Download ``bash-completion``

+^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

+

+.. code-block:: bash

+

+ cd ~

+ wget https://raw.githubusercontent.com/microsoft/nni/{nni-version}/tools/bash-completion

+

+Here, {nni-version} should by replaced by the version of NNI, e.g., ``master``\ , ``v1.9``. You can also check the latest ``bash-completion`` script :githublink:`here `.

+

+Step 2. Install the script

+^^^^^^^^^^^^^^^^^^^^^^^^^^

+

+If you are running a root account and want to install this script for all the users

+

+.. code-block:: bash

+

+ install -m644 ~/bash-completion /usr/share/bash-completion/completions/nnictl

+

+If you just want to install this script for your self

+

+.. code-block:: bash

+

+ mkdir -p ~/.bash_completion.d

+ install -m644 ~/bash-completion ~/.bash_completion.d/nnictl

+ echo '[[ -f ~/.bash_completion.d/nnictl ]] && source ~/.bash_completion.d/nnictl' >> ~/.bash_completion

+

+Step 3. Reopen your terminal

+^^^^^^^^^^^^^^^^^^^^^^^^^^^^

+

+Reopen your terminal and you should be able to use the auto-completion feature. Enjoy!

+

+Step 4. Uninstall

+^^^^^^^^^^^^^^^^^

+

+If you want to uninstall this feature, just revert the changes in the steps above.

diff --git a/docs/en_US/CommunitySharings/HpoComparison.rst b/docs/en_US/CommunitySharings/HpoComparison.rst

new file mode 100644

index 0000000000..75925ab2e9

--- /dev/null

+++ b/docs/en_US/CommunitySharings/HpoComparison.rst

@@ -0,0 +1,385 @@

+Hyper Parameter Optimization Comparison

+=======================================

+

+*Posted by Anonymous Author*

+

+Comparison of Hyperparameter Optimization (HPO) algorithms on several problems.

+

+Hyperparameter Optimization algorithms are list below:

+

+

+* `Random Search <../Tuner/BuiltinTuner.rst>`__

+* `Grid Search <../Tuner/BuiltinTuner.rst>`__

+* `Evolution <../Tuner/BuiltinTuner.rst>`__

+* `Anneal <../Tuner/BuiltinTuner.rst>`__

+* `Metis <../Tuner/BuiltinTuner.rst>`__

+* `TPE <../Tuner/BuiltinTuner.rst>`__

+* `SMAC <../Tuner/BuiltinTuner.rst>`__

+* `HyperBand <../Tuner/BuiltinTuner.rst>`__

+* `BOHB <../Tuner/BuiltinTuner.rst>`__

+

+All algorithms run in NNI local environment.

+

+Machine Environment:

+

+.. code-block:: bash

+

+ OS: Linux Ubuntu 16.04 LTS

+ CPU: Intel(R) Xeon(R) CPU E5-2690 v3 @ 2.60GHz 2600 MHz

+ Memory: 112 GB

+ NNI Version: v0.7

+ NNI Mode(local|pai|remote): local

+ Python version: 3.6

+ Is conda or virtualenv used?: Conda

+ is running in docker?: no

+

+AutoGBDT Example

+----------------

+

+Problem Description

+^^^^^^^^^^^^^^^^^^^

+

+Nonconvex problem on the hyper-parameter search of `AutoGBDT <../TrialExample/GbdtExample.rst>`__ example.

+

+Search Space

+^^^^^^^^^^^^

+

+.. code-block:: json

+

+ {

+ "num_leaves": {

+ "_type": "choice",

+ "_value": [10, 12, 14, 16, 18, 20, 22, 24, 28, 32, 48, 64, 96, 128]

+ },

+ "learning_rate": {

+ "_type": "choice",

+ "_value": [0.00001, 0.0001, 0.001, 0.01, 0.05, 0.1, 0.2, 0.5]

+ },

+ "max_depth": {

+ "_type": "choice",

+ "_value": [-1, 2, 3, 4, 5, 6, 8, 10, 12, 14, 16, 18, 20, 22, 24, 28, 32, 48, 64, 96, 128]

+ },

+ "feature_fraction": {

+ "_type": "choice",

+ "_value": [0.9, 0.8, 0.7, 0.6, 0.5, 0.4, 0.3, 0.2]

+ },

+ "bagging_fraction": {

+ "_type": "choice",

+ "_value": [0.9, 0.8, 0.7, 0.6, 0.5, 0.4, 0.3, 0.2]

+ },

+ "bagging_freq": {

+ "_type": "choice",

+ "_value": [1, 2, 4, 8, 10, 12, 14, 16]

+ }

+ }

+

+The total search space is 1,204,224, we set the number of maximum trial to 1000. The time limitation is 48 hours.

+

+Results

+^^^^^^^

+

+.. list-table::

+ :header-rows: 1

+ :widths: auto

+

+ * - Algorithm

+ - Best loss

+ - Average of Best 5 Losses

+ - Average of Best 10 Losses

+ * - Random Search

+ - 0.418854

+ - 0.420352

+ - 0.421553

+ * - Random Search

+ - 0.417364

+ - 0.420024

+ - 0.420997

+ * - Random Search

+ - 0.417861

+ - 0.419744

+ - 0.420642

+ * - Grid Search

+ - 0.498166

+ - 0.498166

+ - 0.498166

+ * - Evolution

+ - 0.409887

+ - 0.409887

+ - 0.409887

+ * - Evolution

+ - 0.413620

+ - 0.413875

+ - 0.414067

+ * - Evolution

+ - 0.409887

+ - 0.409887

+ - 0.409887

+ * - Anneal

+ - 0.414877

+ - 0.417289

+ - 0.418281

+ * - Anneal

+ - 0.409887

+ - 0.409887

+ - 0.410118

+ * - Anneal

+ - 0.413683

+ - 0.416949

+ - 0.417537

+ * - Metis

+ - 0.416273

+ - 0.420411

+ - 0.422380

+ * - Metis

+ - 0.420262

+ - 0.423175

+ - 0.424816

+ * - Metis

+ - 0.421027

+ - 0.424172

+ - 0.425714

+ * - TPE

+ - 0.414478

+ - 0.414478

+ - 0.414478

+ * - TPE

+ - 0.415077

+ - 0.417986

+ - 0.418797

+ * - TPE

+ - 0.415077

+ - 0.417009

+ - 0.418053

+ * - SMAC

+ - **0.408386**

+ - **0.408386**

+ - **0.408386**

+ * - SMAC

+ - 0.414012

+ - 0.414012

+ - 0.414012

+ * - SMAC

+ - **0.408386**

+ - **0.408386**

+ - **0.408386**

+ * - BOHB

+ - 0.410464

+ - 0.415319

+ - 0.417755

+ * - BOHB

+ - 0.418995

+ - 0.420268

+ - 0.422604

+ * - BOHB

+ - 0.415149

+ - 0.418072

+ - 0.418932

+ * - HyperBand

+ - 0.414065

+ - 0.415222

+ - 0.417628

+ * - HyperBand

+ - 0.416807

+ - 0.417549

+ - 0.418828

+ * - HyperBand

+ - 0.415550

+ - 0.415977

+ - 0.417186

+ * - GP

+ - 0.414353

+ - 0.418563

+ - 0.420263

+ * - GP

+ - 0.414395

+ - 0.418006

+ - 0.420431

+ * - GP

+ - 0.412943

+ - 0.416566

+ - 0.418443

+

+

+In this example, all the algorithms are used with default parameters. For Metis, there are about 300 trials because it runs slowly due to its high time complexity O(n^3) in Gaussian Process.

+

+RocksDB Benchmark 'fillrandom' and 'readrandom'

+-----------------------------------------------

+

+Problem Description

+^^^^^^^^^^^^^^^^^^^

+

+`DB_Bench `__ is the main tool that is used to benchmark `RocksDB `__\ 's performance. It has so many hapermeter to tune.

+

+The performance of ``DB_Bench`` is associated with the machine configuration and installation method. We run the ``DB_Bench``\ in the Linux machine and install the Rock in shared library.

+

+Machine configuration

+^^^^^^^^^^^^^^^^^^^^^

+

+.. code-block:: bash

+

+ RocksDB: version 6.1

+ CPU: 6 * Intel(R) Xeon(R) CPU E5-2690 v4 @ 2.60GHz

+ CPUCache: 35840 KB

+ Keys: 16 bytes each

+ Values: 100 bytes each (50 bytes after compression)

+ Entries: 1000000

+

+Storage performance

+^^^^^^^^^^^^^^^^^^^

+

+**Latency**\ : each IO request will take some time to complete, this is called the average latency. There are several factors that would affect this time including network connection quality and hard disk IO performance.

+

+**IOPS**\ :** IO operations per second**\ , which means the amount of *read or write operations* that could be done in one seconds time.

+

+**IO size**\ :** the size of each IO request**. Depending on the operating system and the application/service that needs disk access it will issue a request to read or write a certain amount of data at the same time.

+

+**Throughput (in MB/s) = Average IO size x IOPS**

+

+IOPS is related to online processing ability and we use the IOPS as the metric in my experiment.

+

+Search Space

+^^^^^^^^^^^^

+

+.. code-block:: json

+

+ {

+ "max_background_compactions": {

+ "_type": "quniform",

+ "_value": [1, 256, 1]

+ },

+ "block_size": {

+ "_type": "quniform",

+ "_value": [1, 500000, 1]

+ },

+ "write_buffer_size": {

+ "_type": "quniform",

+ "_value": [1, 130000000, 1]

+ },

+ "max_write_buffer_number": {

+ "_type": "quniform",

+ "_value": [1, 128, 1]

+ },

+ "min_write_buffer_number_to_merge": {

+ "_type": "quniform",

+ "_value": [1, 32, 1]

+ },

+ "level0_file_num_compaction_trigger": {

+ "_type": "quniform",

+ "_value": [1, 256, 1]

+ },

+ "level0_slowdown_writes_trigger": {

+ "_type": "quniform",

+ "_value": [1, 1024, 1]

+ },

+ "level0_stop_writes_trigger": {

+ "_type": "quniform",

+ "_value": [1, 1024, 1]

+ },

+ "cache_size": {

+ "_type": "quniform",

+ "_value": [1, 30000000, 1]

+ },

+ "compaction_readahead_size": {

+ "_type": "quniform",

+ "_value": [1, 30000000, 1]

+ },

+ "new_table_reader_for_compaction_inputs": {

+ "_type": "randint",

+ "_value": [1]

+ }

+ }

+

+The search space is enormous (about 10^40) and we set the maximum number of trial to 100 to limit the computation resource.

+

+Results

+^^^^^^^

+

+fillrandom' Benchmark

+^^^^^^^^^^^^^^^^^^^^^

+

+.. list-table::

+ :header-rows: 1

+ :widths: auto

+

+ * - Model

+ - Best IOPS (Repeat 1)

+ - Best IOPS (Repeat 2)

+ - Best IOPS (Repeat 3)

+ * - Random

+ - 449901

+ - 427620

+ - 477174

+ * - Anneal

+ - 461896

+ - 467150

+ - 437528

+ * - Evolution

+ - 436755

+ - 389956

+ - 389790

+ * - TPE

+ - 378346

+ - 482316

+ - 468989

+ * - SMAC

+ - 491067

+ - 490472

+ - **491136**

+ * - Metis

+ - 444920

+ - 457060

+ - 454438

+

+

+Figure:

+

+

+.. image:: ../../img/hpo_rocksdb_fillrandom.png

+ :target: ../../img/hpo_rocksdb_fillrandom.png

+ :alt:

+

+

+'readrandom' Benchmark

+^^^^^^^^^^^^^^^^^^^^^^

+

+.. list-table::

+ :header-rows: 1

+ :widths: auto

+

+ * - Model

+ - Best IOPS (Repeat 1)

+ - Best IOPS (Repeat 2)

+ - Best IOPS (Repeat 3)

+ * - Random

+ - 2276157

+ - 2285301

+ - 2275142

+ * - Anneal

+ - 2286330

+ - 2282229

+ - 2284012

+ * - Evolution

+ - 2286524

+ - 2283673

+ - 2283558

+ * - TPE

+ - 2287366

+ - 2282865

+ - 2281891

+ * - SMAC

+ - 2270874

+ - 2284904

+ - 2282266

+ * - Metis

+ - **2287696**

+ - 2283496

+ - 2277701

+

+

+Figure:

+

+

+.. image:: ../../img/hpo_rocksdb_readrandom.png

+ :target: ../../img/hpo_rocksdb_readrandom.png

+ :alt:

+

diff --git a/docs/en_US/CommunitySharings/ModelCompressionComparison.rst b/docs/en_US/CommunitySharings/ModelCompressionComparison.rst

new file mode 100644

index 0000000000..12cc009e25

--- /dev/null

+++ b/docs/en_US/CommunitySharings/ModelCompressionComparison.rst

@@ -0,0 +1,133 @@

+Comparison of Filter Pruning Algorithms

+=======================================

+

+To provide an initial insight into the performance of various filter pruning algorithms,

+we conduct extensive experiments with various pruning algorithms on some benchmark models and datasets.

+We present the experiment result in this document.

+In addition, we provide friendly instructions on the re-implementation of these experiments to facilitate further contributions to this effort.

+

+Experiment Setting

+------------------

+

+The experiments are performed with the following pruners/datasets/models:

+

+

+*

+ Models: :githublink:`VGG16, ResNet18, ResNet50 `

+

+*

+ Datasets: CIFAR-10

+

+*

+ Pruners:

+

+

+ * These pruners are included:

+

+ * Pruners with scheduling : ``SimulatedAnnealing Pruner``\ , ``NetAdapt Pruner``\ , ``AutoCompress Pruner``.

+ Given the overal sparsity requirement, these pruners can automatically generate a sparsity distribution among different layers.

+ * One-shot pruners: ``L1Filter Pruner``\ , ``L2Filter Pruner``\ , ``FPGM Pruner``.

+ The sparsity of each layer is set the same as the overall sparsity in this experiment.

+

+ *

+ Only **filter pruning** performances are compared here.

+

+ For the pruners with scheduling, ``L1Filter Pruner`` is used as the base algorithm. That is to say, after the sparsities distribution is decided by the scheduling algorithm, ``L1Filter Pruner`` is used to performn real pruning.

+

+ *

+ All the pruners listed above are implemented in :githublink:`nni `.

+

+Experiment Result

+-----------------

+

+For each dataset/model/pruner combination, we prune the model to different levels by setting a series of target sparsities for the pruner.

+

+Here we plot both **Number of Weights - Performances** curve and** FLOPs - Performance** curve.

+As a reference, we also plot the result declared in the paper `AutoCompress: An Automatic DNN Structured Pruning Framework for Ultra-High Compression Rates `__ for models VGG16 and ResNet18 on CIFAR-10.

+

+The experiment result are shown in the following figures:

+

+CIFAR-10, VGG16:

+

+

+.. image:: ../../../examples/model_compress/comparison_of_pruners/img/performance_comparison_vgg16.png

+ :target: ../../../examples/model_compress/comparison_of_pruners/img/performance_comparison_vgg16.png

+ :alt:

+

+

+CIFAR-10, ResNet18:

+

+

+.. image:: ../../../examples/model_compress/comparison_of_pruners/img/performance_comparison_resnet18.png

+ :target: ../../../examples/model_compress/comparison_of_pruners/img/performance_comparison_resnet18.png

+ :alt:

+

+

+CIFAR-10, ResNet50:

+

+

+.. image:: ../../../examples/model_compress/comparison_of_pruners/img/performance_comparison_resnet50.png

+ :target: ../../../examples/model_compress/comparison_of_pruners/img/performance_comparison_resnet50.png

+ :alt:

+

+

+Analysis

+--------

+

+From the experiment result, we get the following conclusions:

+

+

+* Given the constraint on the number of parameters, the pruners with scheduling ( ``AutoCompress Pruner`` , ``SimualatedAnnealing Pruner`` ) performs better than the others when the constraint is strict. However, they have no such advantage in FLOPs/Performances comparison since only number of parameters constraint is considered in the optimization process;

+* The basic algorithms ``L1Filter Pruner`` , ``L2Filter Pruner`` , ``FPGM Pruner`` performs very similarly in these experiments;

+* ``NetAdapt Pruner`` can not achieve very high compression rate. This is caused by its mechanism that it prunes only one layer each pruning iteration. This leads to un-acceptable complexity if the sparsity per iteration is much lower than the overall sparisity constraint.

+

+Experiments Reproduction

+------------------------

+

+Implementation Details

+^^^^^^^^^^^^^^^^^^^^^^

+

+

+*

+ The experiment results are all collected with the default configuration of the pruners in nni, which means that when we call a pruner class in nni, we don't change any default class arguments.

+

+*

+ Both FLOPs and the number of parameters are counted with :githublink:`Model FLOPs/Parameters Counter ` after :githublink:`model speed up `.

+ This avoids potential issues of counting them of masked models.

+

+*

+ The experiment code can be found :githublink:`here `.

+

+Experiment Result Rendering

+^^^^^^^^^^^^^^^^^^^^^^^^^^^

+

+

+*

+ If you follow the practice in the :githublink:`example `\ , for every single pruning experiment, the experiment result will be saved in JSON format as follows:

+

+ .. code-block:: json

+

+ {

+ "performance": {"original": 0.9298, "pruned": 0.1, "speedup": 0.1, "finetuned": 0.7746},

+ "params": {"original": 14987722.0, "speedup": 167089.0},

+ "flops": {"original": 314018314.0, "speedup": 38589922.0}

+ }

+

+*

+ The experiment results are saved :githublink:`here `.

+ You can refer to :githublink:`analyze ` to plot new performance comparison figures.

+

+Contribution

+------------

+

+TODO Items

+^^^^^^^^^^

+

+

+* Pruners constrained by FLOPS/latency

+* More pruning algorithms/datasets/models

+

+Issues

+^^^^^^

+

+For algorithm implementation & experiment issues, please `create an issue `__.

diff --git a/docs/en_US/CommunitySharings/NNI_AutoFeatureEng.rst b/docs/en_US/CommunitySharings/NNI_AutoFeatureEng.rst

new file mode 100644

index 0000000000..d01a824517

--- /dev/null

+++ b/docs/en_US/CommunitySharings/NNI_AutoFeatureEng.rst

@@ -0,0 +1,141 @@

+.. role:: raw-html(raw)

+ :format: html

+

+

+NNI review article from Zhihu: :raw-html:`` - By Garvin Li

+========================================================================================================================

+

+The article is by a NNI user on Zhihu forum. In the article, Garvin had shared his experience on using NNI for Automatic Feature Engineering. We think this article is very useful for users who are interested in using NNI for feature engineering. With author's permission, we translated the original article into English.

+

+**原文(source)**\ : `如何看待微软最新发布的AutoML平台NNI?By Garvin Li `__

+

+01 Overview of AutoML

+---------------------

+

+In author's opinion, AutoML is not only about hyperparameter optimization, but

+also a process that can target various stages of the machine learning process,

+including feature engineering, NAS, HPO, etc.

+

+02 Overview of NNI

+------------------

+

+NNI (Neural Network Intelligence) is an open source AutoML toolkit from

+Microsoft, to help users design and tune machine learning models, neural network

+architectures, or a complex system’s parameters in an efficient and automatic

+way.

+

+Link:\ ` https://github.com/Microsoft/nni `__

+

+In general, most of Microsoft tools have one prominent characteristic: the

+design is highly reasonable (regardless of the technology innovation degree).

+NNI's AutoFeatureENG basically meets all user requirements of AutoFeatureENG

+with a very reasonable underlying framework design.

+

+03 Details of NNI-AutoFeatureENG

+--------------------------------

+

+..

+

+ The article is following the github project: `https://github.com/SpongebBob/tabular_automl_NNI `__.

+

+

+Each new user could do AutoFeatureENG with NNI easily and efficiently. To exploring the AutoFeatureENG capability, downloads following required files, and then run NNI install through pip.

+

+

+.. image:: https://pic3.zhimg.com/v2-8886eea730cad25f5ac06ef1897cd7e4_r.jpg

+ :target: https://pic3.zhimg.com/v2-8886eea730cad25f5ac06ef1897cd7e4_r.jpg

+ :alt:

+

+NNI treats AutoFeatureENG as a two-steps-task, feature generation exploration and feature selection. Feature generation exploration is mainly about feature derivation and high-order feature combination.

+

+04 Feature Exploration

+----------------------

+

+For feature derivation, NNI offers many operations which could automatically generate new features, which list \ `as following `__\ :

+

+**count**\ : Count encoding is based on replacing categories with their counts computed on the train set, also named frequency encoding.

+

+**target**\ : Target encoding is based on encoding categorical variable values with the mean of target variable per value.

+

+**embedding**\ : Regard features as sentences, generate vectors using *Word2Vec.*

+

+**crosscout**\ : Count encoding on more than one-dimension, alike CTR (Click Through Rate).

+

+**aggregete**\ : Decide the aggregation functions of the features, including min/max/mean/var.

+

+**nunique**\ : Statistics of the number of unique features.

+

+**histsta**\ : Statistics of feature buckets, like histogram statistics.

+

+Search space could be defined in a **JSON file**\ : to define how specific features intersect, which two columns intersect and how features generate from corresponding columns.

+

+

+.. image:: https://pic1.zhimg.com/v2-3c3eeec6eea9821e067412725e5d2317_r.jpg

+ :target: https://pic1.zhimg.com/v2-3c3eeec6eea9821e067412725e5d2317_r.jpg

+ :alt:

+

+

+The picture shows us the procedure of defining search space. NNI provides count encoding for 1-order-op, as well as cross count encoding, aggerate statistics (min max var mean median nunique) for 2-order-op.

+

+For example, we want to search the features which are a frequency encoding (valuecount) features on columns name {“C1”, ...,” C26”}, in the following way:

+

+

+.. image:: https://github.com/JSong-Jia/Pic/blob/master/images/pic%203.jpg

+ :target: https://github.com/JSong-Jia/Pic/blob/master/images/pic%203.jpg

+ :alt:

+

+

+we can define a cross frequency encoding (value count on cross dims) method on columns {"C1",...,"C26"} x {"C1",...,"C26"} in the following way:

+

+

+.. image:: https://github.com/JSong-Jia/Pic/blob/master/images/pic%204.jpg

+ :target: https://github.com/JSong-Jia/Pic/blob/master/images/pic%204.jpg

+ :alt:

+

+

+The purpose of Exploration is to generate new features. You can use **get_next_parameter** function to get received feature candidates of one trial.

+

+..

+

+ RECEIVED_PARAMS = nni.get_next_parameter()

+

+

+05 Feature selection

+--------------------

+

+To avoid feature explosion and overfitting, feature selection is necessary. In the feature selection of NNI-AutoFeatureENG, LightGBM (Light Gradient Boosting Machine), a gradient boosting framework developed by Microsoft, is mainly promoted.

+

+

+.. image:: https://pic2.zhimg.com/v2-7bf9c6ae1303692101a911def478a172_r.jpg

+ :target: https://pic2.zhimg.com/v2-7bf9c6ae1303692101a911def478a172_r.jpg

+ :alt:

+

+

+If you have used **XGBoost** or** GBDT**\ , you would know the algorithm based on tree structure can easily calculate the importance of each feature on results. LightGBM is able to make feature selection naturally.

+

+The issue is that selected features might be applicable to *GBDT* (Gradient Boosting Decision Tree), but not to the linear algorithm like *LR* (Logistic Regression).

+

+

+.. image:: https://pic4.zhimg.com/v2-d2f919497b0ed937acad0577f7a8df83_r.jpg

+ :target: https://pic4.zhimg.com/v2-d2f919497b0ed937acad0577f7a8df83_r.jpg

+ :alt:

+

+

+06 Summary

+----------

+

+NNI's AutoFeatureEng sets a well-established standard, showing us the operation procedure, available modules, which is highly convenient to use. However, a simple model is probably not enough for good results.

+

+Suggestions to NNI

+------------------

+

+About Exploration: If consider using DNN (like xDeepFM) to extract high-order feature would be better.

+

+About Selection: There could be more intelligent options, such as automatic selection system based on downstream models.

+

+Conclusion: NNI could offer users some inspirations of design and it is a good open source project. I suggest researchers leverage it to accelerate the AI research.

+

+Tips: Because the scripts of open source projects are compiled based on gcc7, Mac system may encounter problems of gcc (GNU Compiler Collection). The solution is as follows:

+

+brew install libomp

+===================

diff --git a/docs/en_US/CommunitySharings/NNI_colab_support.rst b/docs/en_US/CommunitySharings/NNI_colab_support.rst

new file mode 100644

index 0000000000..438f66bb26

--- /dev/null

+++ b/docs/en_US/CommunitySharings/NNI_colab_support.rst

@@ -0,0 +1,47 @@

+Use NNI on Google Colab

+=======================

+

+NNI can easily run on Google Colab platform. However, Colab doesn't expose its public IP and ports, so by default you can not access NNI's Web UI on Colab. To solve this, you need a reverse proxy software like ``ngrok`` or ``frp``. This tutorial will show you how to use ngrok to access NNI's Web UI on Colab.

+

+How to Open NNI's Web UI on Google Colab

+----------------------------------------

+

+

+#. Install required packages and softwares.

+

+.. code-block:: bash

+

+ ! pip install nni # install nni

+ ! wget https://bin.equinox.io/c/4VmDzA7iaHb/ngrok-stable-linux-amd64.zip # download ngrok and unzip it

+ ! unzip ngrok-stable-linux-amd64.zip

+ ! mkdir -p nni_repo

+ ! git clone https://github.com/microsoft/nni.git nni_repo/nni # clone NNI's offical repo to get examples

+

+

+#. Register a ngrok account `here `__\ , then connect to your account using your authtoken.

+

+.. code-block:: bash

+

+ ! ./ngrok authtoken

+

+

+#. Start an NNI example on a port bigger than 1024, then start ngrok with the same port. If you want to use gpu, make sure gpuNum >= 1 in config.yml. Use ``get_ipython()`` to start ngrok since it will be stuck if you use ``! ngrok http 5000 &``.

+

+.. code-block:: bash

+

+ ! nnictl create --config nni_repo/nni/examples/trials/mnist-pytorch/config.yml --port 5000 &

+ get_ipython().system_raw('./ngrok http 5000 &')

+

+

+#. Check the public url.

+

+.. code-block:: bash

+

+ ! curl -s http://localhost:4040/api/tunnels # don't change the port number 4040

+

+You will see an url like http://xxxx.ngrok.io after step 4, open this url and you will find NNI's Web UI. Have fun :)

+

+Access Web UI with frp

+----------------------

+

+frp is another reverse proxy software with similar functions. However, frp doesn't provide free public urls, so you may need an server with public IP as a frp server. See `here `__ to know more about how to deploy frp.

diff --git a/docs/en_US/CommunitySharings/NasComparison.rst b/docs/en_US/CommunitySharings/NasComparison.rst

new file mode 100644

index 0000000000..d2a9ac1131

--- /dev/null

+++ b/docs/en_US/CommunitySharings/NasComparison.rst

@@ -0,0 +1,165 @@

+Neural Architecture Search Comparison

+=====================================

+

+*Posted by Anonymous Author*

+

+Train and Compare NAS (Neural Architecture Search) models including Autokeras, DARTS, ENAS and NAO.

+

+Their source code link is as below:

+

+

+*

+ Autokeras: `https://github.com/jhfjhfj1/autokeras `__

+

+*

+ DARTS: `https://github.com/quark0/darts `__

+

+*

+ ENAS: `https://github.com/melodyguan/enas `__

+

+*

+ NAO: `https://github.com/renqianluo/NAO `__

+

+Experiment Description

+----------------------

+

+To avoid over-fitting in **CIFAR-10**\ , we also compare the models in the other five datasets including Fashion-MNIST, CIFAR-100, OUI-Adience-Age, ImageNet-10-1 (subset of ImageNet), ImageNet-10-2 (another subset of ImageNet). We just sample a subset with 10 different labels from ImageNet to make ImageNet-10-1 or ImageNet-10-2.

+

+.. list-table::

+ :header-rows: 1

+ :widths: auto

+

+ * - Dataset

+ - Training Size

+ - Numer of Classes

+ - Descriptions

+ * - `Fashion-MNIST `__

+ - 60,000

+ - 10

+ - T-shirt/top, trouser, pullover, dress, coat, sandal, shirt, sneaker, bag and ankle boot.

+ * - `CIFAR-10 `__

+ - 50,000

+ - 10

+ - Airplanes, cars, birds, cats, deer, dogs, frogs, horses, ships and trucks.

+ * - `CIFAR-100 `__

+ - 50,000

+ - 100

+ - Similar to CIFAR-10 but with 100 classes and 600 images each.

+ * - `OUI-Adience-Age `__

+ - 26,580

+ - 8

+ - 8 age groups/labels (0-2, 4-6, 8-13, 15-20, 25-32, 38-43, 48-53, 60-).

+ * - `ImageNet-10-1 `__

+ - 9,750

+ - 10

+ - Coffee mug, computer keyboard, dining table, wardrobe, lawn mower, microphone, swing, sewing machine, odometer and gas pump.

+ * - `ImageNet-10-2 `__

+ - 9,750

+ - 10

+ - Drum, banj, whistle, grand piano, violin, organ, acoustic guitar, trombone, flute and sax.

+

+

+We do not change the default fine-tuning technique in their source code. In order to match each task, the codes of input image shape and output numbers are changed.

+

+Search phase time for all NAS methods is **two days** as well as the retrain time. Average results are reported based on** three repeat times**. Our evaluation machines have one Nvidia Tesla P100 GPU, 112GB of RAM and one 2.60GHz CPU (Intel E5-2690).

+

+For NAO, it requires too much computing resources, so we only use NAO-WS which provides the pipeline script.

+

+For AutoKeras, we used 0.2.18 version because it was the latest version when we started the experiment.

+

+NAS Performance

+---------------

+

+.. list-table::

+ :header-rows: 1

+ :widths: auto

+

+ * - NAS

+ - AutoKeras (%)

+ - ENAS (macro) (%)

+ - ENAS (micro) (%)

+ - DARTS (%)

+ - NAO-WS (%)

+ * - Fashion-MNIST

+ - 91.84

+ - 95.44

+ - 95.53

+ - **95.74**

+ - 95.20

+ * - CIFAR-10

+ - 75.78

+ - 95.68

+ - **96.16**

+ - 94.23

+ - 95.64

+ * - CIFAR-100

+ - 43.61

+ - 78.13

+ - 78.84

+ - **79.74**

+ - 75.75

+ * - OUI-Adience-Age

+ - 63.20

+ - **80.34**

+ - 78.55

+ - 76.83

+ - 72.96

+ * - ImageNet-10-1

+ - 61.80

+ - 77.07

+ - 79.80

+ - **80.48**

+ - 77.20

+ * - ImageNet-10-2

+ - 37.20

+ - 58.13

+ - 56.47

+ - 60.53

+ - **61.20**

+

+

+Unfortunately, we cannot reproduce all the results in the paper.

+

+The best or average results reported in the paper:

+

+.. list-table::

+ :header-rows: 1

+ :widths: auto

+

+ * - NAS

+ - AutoKeras(%)

+ - ENAS (macro) (%)

+ - ENAS (micro) (%)

+ - DARTS (%)

+ - NAO-WS (%)

+ * - CIFAR- 10

+ - 88.56(best)

+ - 96.13(best)

+ - 97.11(best)

+ - 97.17(average)

+ - 96.47(best)

+

+

+For AutoKeras, it has relatively worse performance across all datasets due to its random factor on network morphism.

+

+For ENAS, ENAS (macro) shows good results in OUI-Adience-Age and ENAS (micro) shows good results in CIFAR-10.

+

+For DARTS, it has a good performance on some datasets but we found its high variance in other datasets. The difference among three runs of benchmarks can be up to 5.37% in OUI-Adience-Age and 4.36% in ImageNet-10-1.

+

+For NAO-WS, it shows good results in ImageNet-10-2 but it can perform very poorly in OUI-Adience-Age.

+

+Reference

+---------

+

+

+#.

+ Jin, Haifeng, Qingquan Song, and Xia Hu. "Efficient neural architecture search with network morphism." *arXiv preprint arXiv:1806.10282* (2018).

+

+#.

+ Liu, Hanxiao, Karen Simonyan, and Yiming Yang. "Darts: Differentiable architecture search." arXiv preprint arXiv:1806.09055 (2018).

+

+#.

+ Pham, Hieu, et al. "Efficient Neural Architecture Search via Parameters Sharing." international conference on machine learning (2018): 4092-4101.

+

+#.

+ Luo, Renqian, et al. "Neural Architecture Optimization." neural information processing systems (2018): 7827-7838.

diff --git a/docs/en_US/CommunitySharings/ParallelizingTpeSearch.rst b/docs/en_US/CommunitySharings/ParallelizingTpeSearch.rst

new file mode 100644

index 0000000000..3d75962f6c

--- /dev/null

+++ b/docs/en_US/CommunitySharings/ParallelizingTpeSearch.rst

@@ -0,0 +1,183 @@

+.. role:: raw-html(raw)

+ :format: html

+

+

+Parallelizing a Sequential Algorithm TPE

+========================================

+