-

Notifications

You must be signed in to change notification settings - Fork 0

[en]

The DYCI2 library was designed for improvisation and style imitation: it works on sequences of abstract labels (e.g. chord labels, etc.) matching segments of a memory. DYCI2 produces new sequences by recombinations of these memory segments, given a scenario expressed as a sequence of labels, and keeping some properties learned from the memory.

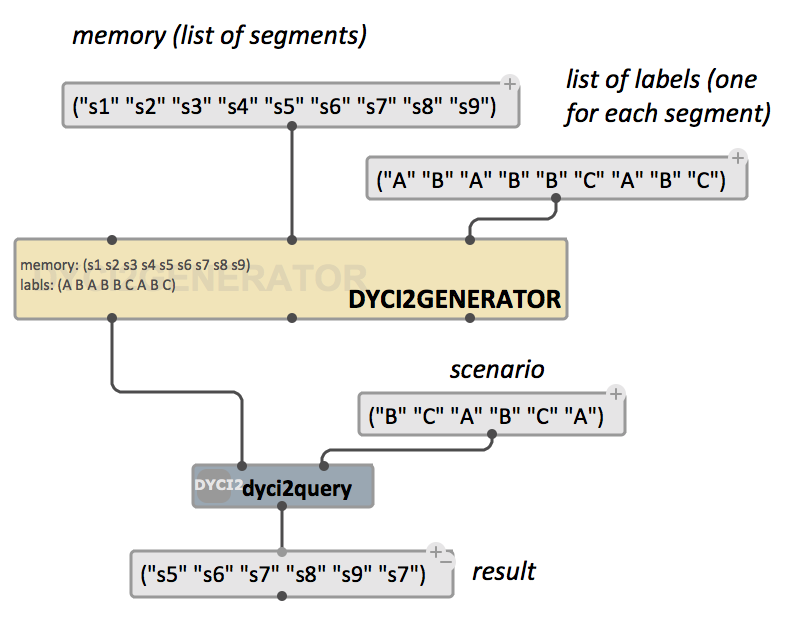

The main object in om-dyci2 is DYCI2GENERATOR. It is initialized with a memory (list of "segments") and a sequence of labels indexing this memory (=> two lists of the same length).

Currently DYCI2GENERATOR only support strings as memory segments and labels.

In the simple example below the memory is a simple list of 9 segments ("s1", ..., "s9") indexed by an alphabet of 3 labels ("A", "B", or "C"). Once the generator is initialized, a query can be made using the dyi2query function, and a new list of labels driving the sequence called the scenario.

The patch dyci2generator-basic provided with the library reproduces a similar example.

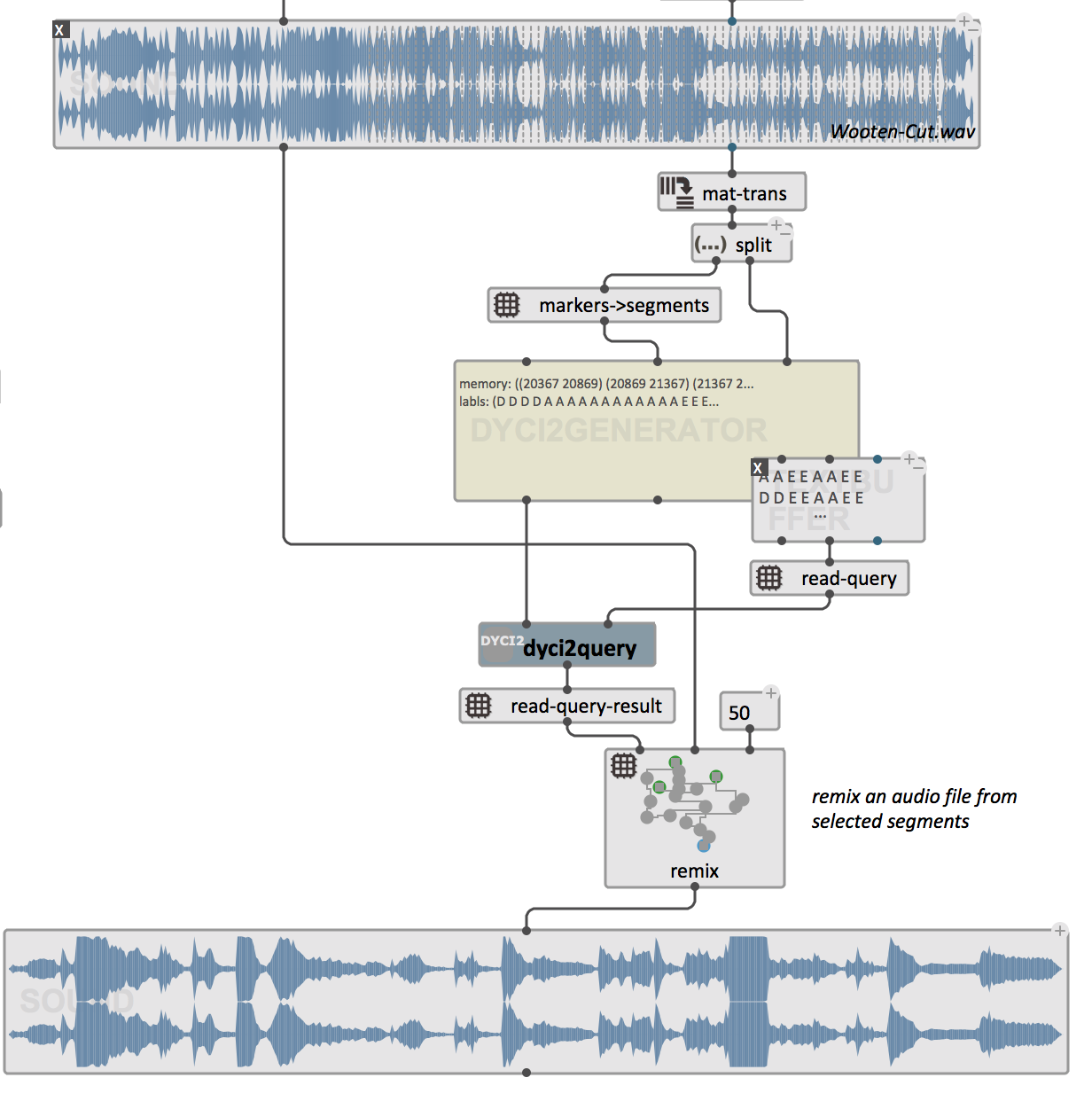

In most case with real musical sequences, the memory will simply consist in indices or time markers indicating the beginning and end time of each segment. These markers can correspond to the markers in an audio file, chords or other events in a MIDI sequence, etc. (see examples below).

Depending on the application at hand, a first trick will be to convert both the list of time markers and the corresponding labels into lists of strings suitable to initalize a DYCI2Generator. A second trick will be to recombine a sequence from the new list of memory segments.

Two cases are covered in the library examples:

- a segmented and labelled audio file

- a MIDI file including text labels.

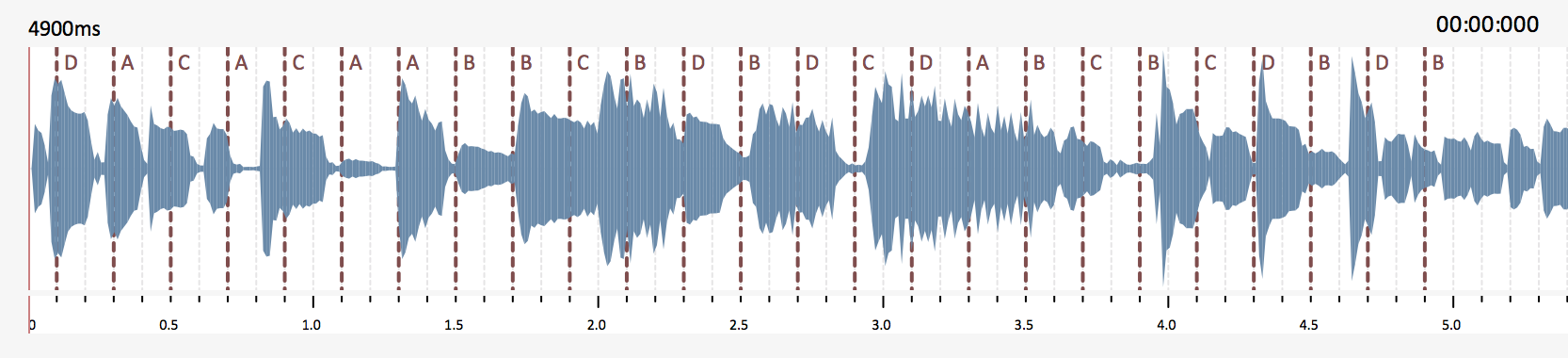

Let's start with an OM audio file (SOUND object) containing labelled markers.

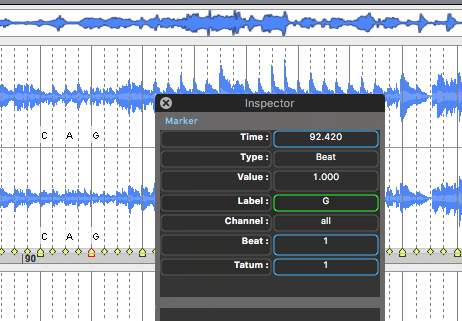

In om7, markers and labels can be set and extracted using the :markers inputs of the SOUND box.

Markers can also be added manually (CMD + click) and labelled (select + L) within the SOUND object editor.

Labeled markers are output as a list of ((time "label") (time "label") ...).

From this list, need to be gereated:

- A list of segments formatted as strings: ("(t1 t2)" "(t2 t3)" ...)

- A list of labels

The result of a query is a new list of ("(ta ta+1)" ... "(tx tx+1)" ...). From this list needs to be retrieved and sequenced segments if the original audio file. This can also be done using standard OM audio tools.

=> See the example patch dyci2generator-audio included in the library.

OM provides tools to process files and extract labels and time markers coming from external tools.

IRCAM's AudioSculpt for instance provides powerful means to automatically segment an audio file in beats and let you input labels attached to the beat markers. Markers can then be exported as SDIF files, and imported/processed in OM:

Depending on your annotation and storage convention, different patches will need to be programmed to retreive the correct format for memory and labels.

MIDI works similarly as in the previous audio examples, except that lables would most likely be encoded as MIDI messages (e.g. of type Lyrics). OM also provides tools to process and extract such messages.

Some examples are provided in the library.

The function dyci2query allows to get a new generated sequence from a trained DYCI2GENERATOR object and new list of labels (or scenario).

Note: the labels in the scenario must all be part of the original training labels.

Depending on how the memory was formatted, adequate operations must be done to reconstitute a musical sequence (e.g. concatenating MIDI chucks, sequencing audio buffers, etc.)

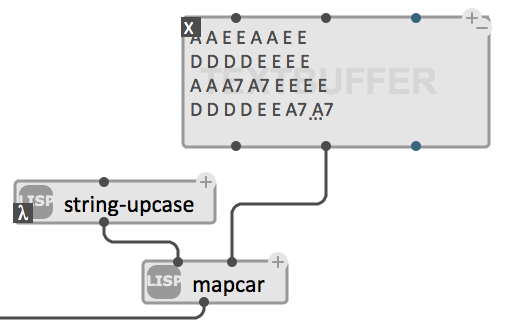

The TEXTBUFFER object can be convenient to easily input queries in dyci2query. Use the :read-mode input's option to get the adequate contents format (e.g. using the "flat-list" option), and convert to string to match the DYCI2GENERATOR labels' format.

=> Avoid space in labels, which might be wrongly parsed and processed.

A lot of options and parameters in DYCI2 allow you to drive the sequence generation.

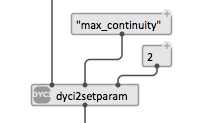

The dyci2setparam command, allows to set parameters of the DYCI2Generator.

Select the parameter (a string corresponding to its name — see DYCI2 documentation for more info) and set the value.

Two main parameters are currently used:

-

avoid_repetitions_mode:- 0: avoids several use of the same segment from the memory in the output sequence.

- 1: favors the least used segments for the output sequence.

- 2: allows repetitions of segments in the output sequence.

-

max_continuity: the maximum length of a segments sequence from the memory in the output sequence.